Measurement System Identification: Not

Measurement Sensitive

NASA TECHNICAL STANDARD

NASA-STD-8739.8B

National Aeronautics and Space Administration

Approved: 2022-09-08

Superseding NASA-STD-

8739.8A

Software Assurance and Software Safety

This official draft has not been approved and is subject to modification.

DO NOT USE PRIOR TO APPROVAL.

NASA-STD-8739.8B

2 of 70

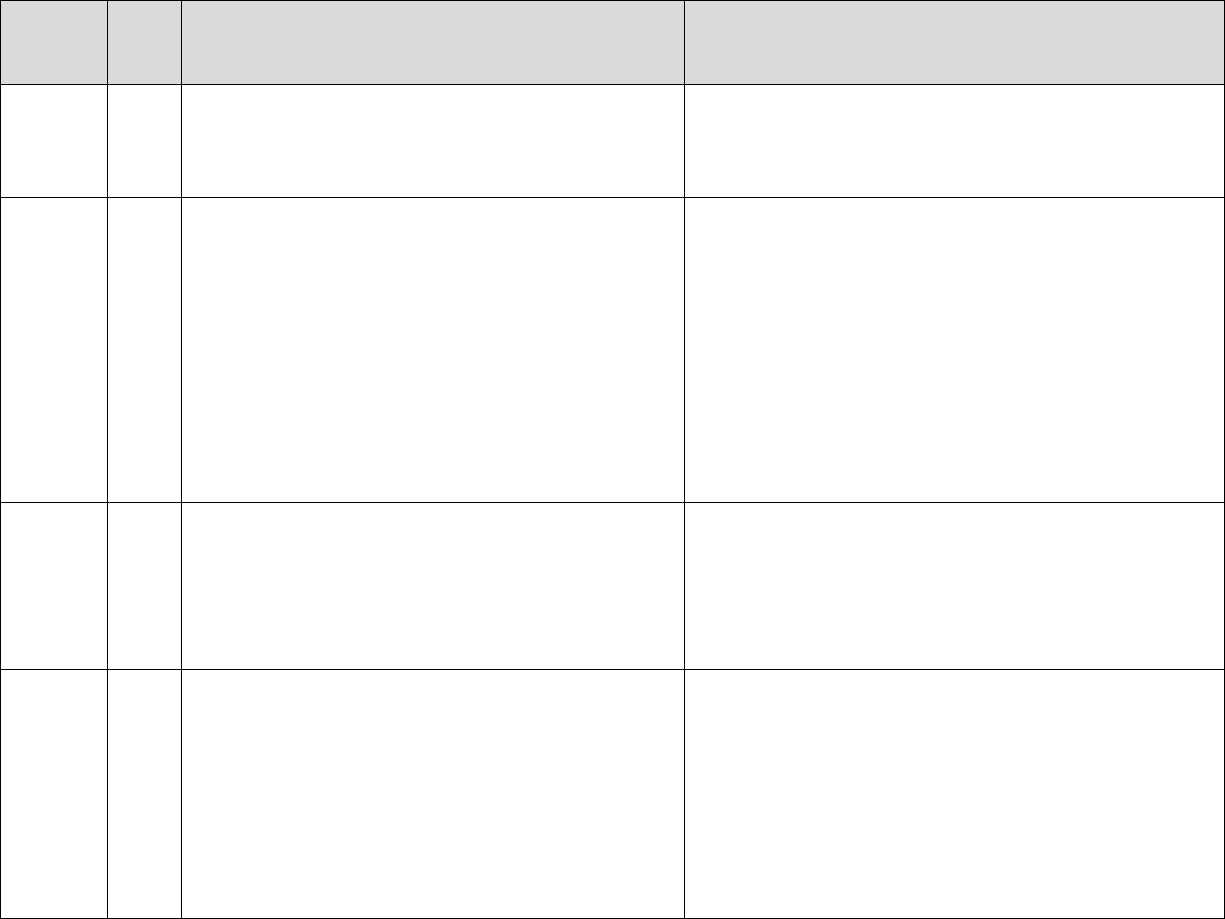

DOCUMENT HISTORY LOG

Status

Document

Revision

Approval Date

Description

Baseline

Initial

2004-07-28

Initial Release

1

2005-05-05

Administrative changes to the Preface;

Paragraphs 1.1, 1.4, 1.5, 2.1.1, 2.2.2, 3, 5.1.2.3,

5.4.1.1; 5.6.2, 5.8.1.2, 6.7.1.a, 7.3.2, 7.3.3, 7.5,

7.5.1; Table 1; Appendix A; Appendix C to

reflect NASA Transformation changes, reflect the

release of NASA Procedural Requirements

(NPR) 7150.2, NASA Software Engineering

Requirements to make minor editorial changes.

Note: Some paragraphs have changed pages as a

result of these changes. Change indications

identify only pages where content has changed.

A

2020-06-10

The revised document addresses the following

significant issues: combined the NASA Software

Assurance Standard (NASA-STD-8739.8) with

the NASA Software Safety Standard (NASA-

STD-8719.13), reduction of requirements,

bringing into alignment with updates to NPR

7150.2, added a section on IV&V requirements to

perform IV&V, and moved guidance text to an

Electronic Handbook. This change combines the

updates to NASA-STD-8739.8 and the content of

NASA-STD-8719.13. The update includes the

NASA software safety requirements and cancels

the NASA-STD-8719.13 standard.

B

2022-09-08

Brings into alignment with the update to NPR

7150.2D. Update the Appendix A table

containing the additional areas to consider when

identifying software causes in Hazard Analysis.

NASA-STD-8739.8B

3 of 70

FOREWORD

This NASA Technical Standard is published by the National Aeronautics and Space

Administration (NASA) to provide uniform engineering and technical requirements for

processes, procedures, practices, and methods that have been endorsed as standard for NASA

facilities, programs, and projects, including requirements for selection, application, and design

criteria of an item.

This standard was developed by the NASA Office of Safety and Mission Assurance (OSMA).

Requests for information, corrections, or additions to this standard should be submitted to the OSMA

by email to Agency-SMA-Policy-Feedback@mail.nasa.gov or via the “Email Feedback” link at

https://standards.nasa.gov.

Russ Deloach

Approval Date

NASA Chief, Safety and Mission Assurance

NASA-STD-8739.8B

4 of 70

TABLE OF CONTENTS

DOCUMENT HISTORY LOG ................................................................................................... 2

FOREWORD................................................................................................................................. 3

TABLE OF CONTENTS ............................................................................................................. 4

LIST OF APPENDICES .............................................................................................................. 4

LIST OF TABLES ........................................................................................................................ 4

1. SCOPE ............................................................................................................................ 5

1.1 Document Purpose ........................................................................................................... 5

1.2 Applicability .................................................................................................................... 6

1.3 Documentation and Deliverables ..................................................................................... 6

1.4 Request for Relief ............................................................................................................ 6

2. APPLICABLE AND REFERENCE DOCUMENTS ................................................. 7

2.1 Applicable Documents ..................................................................................................... 7

2.2 Reference Documents ...................................................................................................... 7

2.3 Order of Precedence ......................................................................................................... 9

3. ACRONYMS AND DEFINITIONS ........................................................................... 11

3.1 Acronyms and Abbreviations ........................................................................................ 11

3.2 Definitions...................................................................................................................... 12

4. SOFTWARE ASSURANCE AND SOFTWARE SAFETY REQUIREMENTS ... 19

4.1 Software Assurance Description .................................................................................... 19

4.2 Safety-Critical Software Determination ........................................................................ 20

4.3 Software Assurance and Software Safety Requirements ............................................... 20

4.4 Independent Verification &Validation .......................................................................... 52

4.5 Principles Related to Tailoring the Standard Requirements .......................................... 60

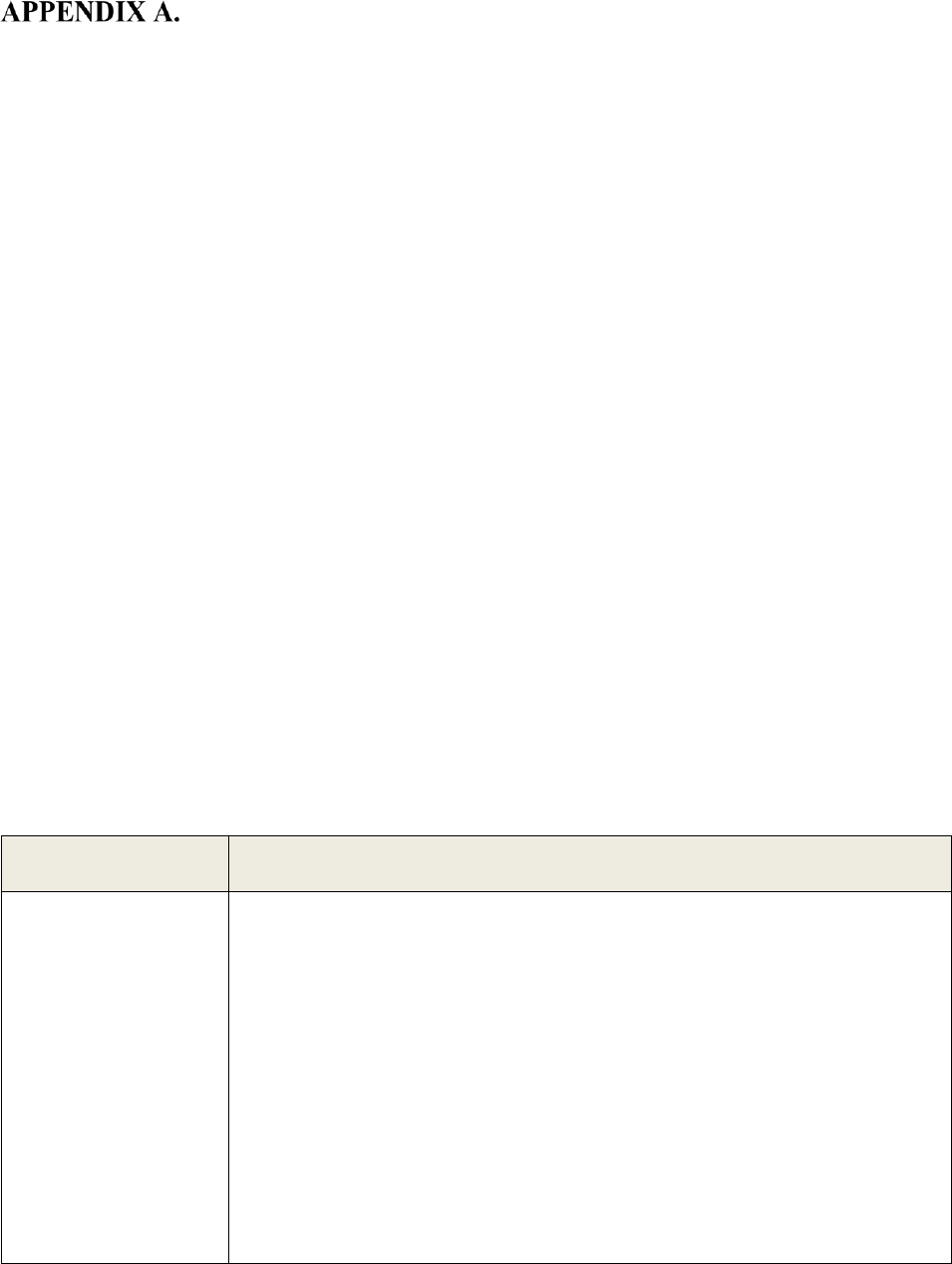

LIST OF APPENDICES

Guidelines for the Hazard Development involving software .............................. 62

LIST OF TABLES

Table 1. Software Assurance and Software Safety Requirements Mapping Matrix .................... 21

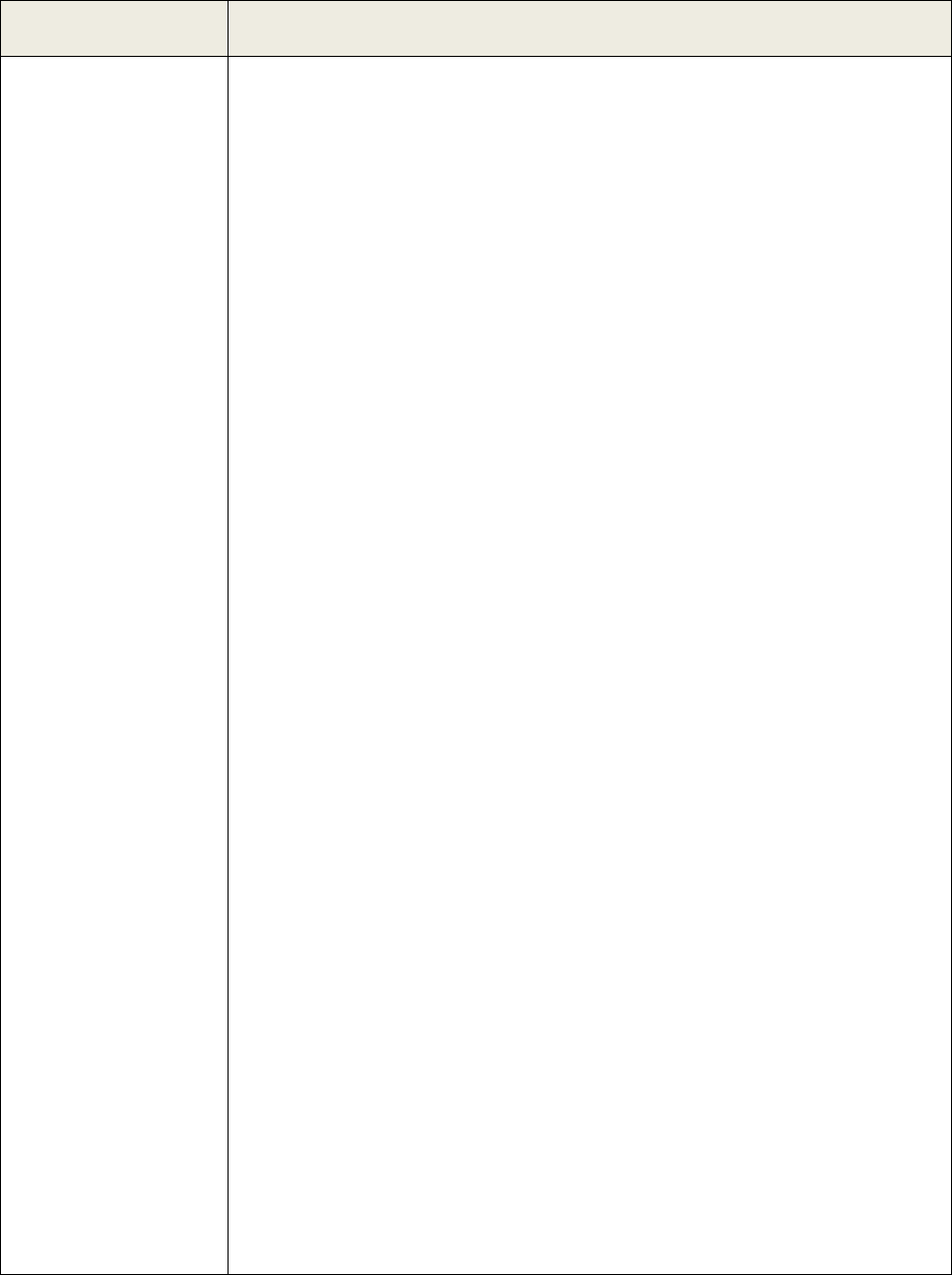

Table 2. Additional considerations to consider when identifying software causes in hazard

analysis .......................................................................................................................................... 62

NASA-STD-8739.8B

5 of 70

SOFTWARE ASSURANCE AND SOFTWARE SAFETY

STANDARD

1. SCOPE

1.1 Document Purpose

The purpose of the Software Assurance and Software Safety Standard is to define the

requirements to implement a systematic approach to software assurance, software safety, and

Independent Verification and Validation (IV&V) for software created, acquired, provided, used,

or maintained by or for NASA. Various personnel in the program, project, engineering, facility,

or Safety and Mission Assurance (SMA) organizations can perform the activities required to

satisfy these requirements. The Software Assurance and Software Safety Standard provides a

basis for personnel to perform software assurance, software safety, and IV&V activities

consistently throughout the life of the software.

The Software Assurance and Software Safety Standard, in accordance with NPR

7150.2, NASA Software Engineering Requirements, supports the implementation of the software

assurance, software safety, and IV&V sub-disciplines. The application and approach to meeting

the Software Assurance and Software Safety Standard vary based on the system and software

products and processes to which they are applied. The Software Assurance and Software Safety

Standard stresses coordination between the software assurance sub-disciplines and system safety,

system reliability, hardware quality, system security, and software engineering to maintain the

system perspective and minimize duplication of effort.

The objectives of the Software Assurance and Software Safety Standard include the

following:

a. Ensuring that the processes, procedures, and products used to produce and sustain the

software conform to all specified requirements and standards that govern those processes,

procedures, and products.

(1) A set of activities that assess adherence to, and the adequacy of the software processes

used to develop and modify software products.

(2) A set of activities that define and assess the adequacy of software processes to provide

evidence that establishes confidence that the software processes are appropriate for and

produce software products of suitable quality for their intended purposes.

b. Determining the degree of software quality obtained by the software products.

c. Ensuring that the software systems are safe and that the software safety-critical requirements

are followed.

d. Ensuring that the software systems are secure.

NASA-STD-8739.8B

6 of 70

e. Employing rigorous analysis and testing methodologies to identify objective evidence and

conclusions to provide an independent assessment of critical products and processes

throughout the life cycle.

The Software Assurance and Software Safety Standard is compatible with all software

life cycle models. The Software Assurance and Software Safety Standard does not impose a

particular life cycle model on a software project.

In this standard, all mandatory actions (i.e., requirements) are denoted by statements

containing the term “shall.” The terms “may” denote a discretionary privilege or permission;

“can” denotes statements of possibility or capability; “should” denotes a good practice and is

recommended; but not required, “will” denotes expected outcome; and “are/is” denotes

descriptive material.

1.2 Applicability

This standard is approved for use by NASA Headquarters and NASA Centers,

including Component Facilities and Technical and Service Support Centers. This NASA

Technical Standard applies to the assurance of software created by or for NASA projects,

programs, facilities, and activities and defines the requirements for those activities. This directive

is applicable to the Jet Propulsion Laboratory, a Federally Funded Research and Development

Center, only to the extent specified in the NASA/Caltech Prime Contract. This standard may also

apply to other contractors, grant recipients, or parties to agreements to the extent specified or

referenced in their contracts, grants, or agreements.

1.3 Documentation and Deliverables

The Software Assurance and Software Safety Standard is not intended to designate the

format of program/project/facility documentation and deliverables. The software assurance and

software safety data, information, and plans may be considered to be quality records with a

retention period as specified in NRRS 1441.1. The format of the documentation is a

program/project/facility decision. The software assurance and software safety organizations

should keep records, reports, metrics, analyses, and trending results and should keep copies of

their project plans for future reference and improvements. The software assurance and software

safety plans (e.g., the Software Assurance Plan) can be standalone documents or incorporated

within other documents (e.g., part of a Software Management Plan, a Software Development

Plan or part of a Program or Project Safety and Mission Assurance (SMA) plan).

1.4 Request for Relief

Tailoring of this standard for application to a specific program or project is documented

as part of program or project requirements and approved by the responsible Center Technical

Authority (TA) in accordance with NPR 8715.3, NASA General Safety Program Requirements.

Section 4.5 of this standard contains the principles related to tailoring this standard’s

requirements.

NASA-STD-8739.8B

7 of 70

2. APPLICABLE AND REFERENCE DOCUMENTS

2.1 Applicable Documents

The applicable documents are accessible via the NASA Technical Standards System at

https://standards.nasa.gov, or the NASA Online Directives Information System

https://nodis3.gsfc.nasa.gov/main_lib.cfm or may be obtained directly from the Standards

Developing Organizations.

NPR 1400.1 NASA Directives and Charters Procedural Requirements

NPR 7120.5 NASA Space Flight Program and Project Management

Requirements

NPR 7120.10 Technical Standards for NASA Programs and Projects

NPR 7150.2 NASA Software Engineering Requirements

NPR 8000.4 Agency Risk Management Procedural Requirements

NPR 8715.3 NASA General Safety Program Requirements

NASA-HDBK-2203 NASA Software Engineering Handbook

NASA-HDBK-4008 Programmable Logic Devices Handbook

NRRS 1441.1 NASA Records Retention Schedules

2.2 Reference Documents

The reference documents listed in this section are not incorporated by reference within this

standard but may provide further clarification and guidance.

Government Documents

NPD 2810.1 NASA Information Security Policy

NPD 8720.1 NASA Reliability and Maintainability Program Policy

NPR 1441.1 NASA Records Management Program Requirements

NPR 2210.1 Release of NASA Software

NPR 2810.1 Security of Information and Information Systems

NPR 2830.1 NASA Enterprise Architecture Procedures

NPR 2841.1 Identity, Credential, and Access Management

NASA-STD-8739.8B

8 of 70

NPR 7120.7 NASA Information Technology Program and Project

Management Requirements

NPR 7120.8 NASA Research and Technology Program and Project

Management Requirements

NPR 7120.10 Technical Standards for NASA Programs and Projects

NPR 7120.11 Health and Medical Technical Authority Implementation

NPR 7123.1 NASA Systems Engineering Processes and Requirements.

NPR 8000.4 Agency Risk Management Procedural Requirements

NPR 7123.1 NASA Systems Engineering Processes and Requirements

NASA-STD-1006 Space System Protection Standard

NASA-STD-2601 Minimum Cybersecurity Requirements for Computing

Systems

NASA-STD-7009 Standard for Models and Simulations

NASA-STD-8729.1 NASA Reliability And Maintainability Standard For

Spaceflight And Support Systems

NASA-HDBK-7009 NASA Handbook for Models and Simulations: An

Implementation Guide for NASA-STD-7009

NASA-HDBK-8709.22 Safety and Mission Assurance Acronyms, Abbreviations,

and Definitions

NASA-HDBK-8739.23 NASA Complex Electronics Handbook for Assurance

Professionals

NIST SP 800-37 Risk Management Framework

NIST SP 800-40 Guide to Enterprise Patch Management Planning:

Preventive Maintenance for Technology

NIST SP 800-53 Security and Privacy Controls for Information Systems and

Organizations

NIST SP 800-70 National Checklist Program for Information Technology

products: Guidelines for Checklist Users and Developers

NIST SP 800-115 Technical Guide to Information Security Testing and

Assessment

NASA-STD-8739.8B

9 of 70

NFS 1813.301-79 Supporting Federal Policies, Regulations, and NASA

Procedural Requirements

NFS 1852.237-72 Access to Sensitive Information

NFS 1852.237-73 Release of Sensitive Information

Non-Government Documents

CMMI-DEV, V2.0 CMMI

®

for Development, Version 2.0

IEEE 730 Institute of Electrical and Electronics Engineers (IEEE)

Standard for Software Quality Assurance Processes

IEEE 828 IEEE Standard for Configuration Management in Systems

and Software Engineering.

IEEE 982.1 IEEE Standard Measures of the Software Aspects of

Dependability

IEEE 1012 IEEE Standard for System, Software, and Hardware

Verification and Validation

IEEE 1028 IEEE Standard for Software Reviews and Audits

IEEE 1633 IEEE Recommended Practice on Software Reliability

IEEE 15026-1 Systems and software engineering--Systems and software

assurance--Part 1: Concepts and vocabulary

IEEE 29119-4 Software and systems engineering -- Software testing --

Part 4: Test techniques

ISO 26514 Systems and software engineering–requirements for

designers and developers of user documentation

ISO 24765 System and Software Engineering – Vocabulary

2.3 Order of Precedence

This standard establishes requirements to implement a systematic approach to Software

Assurance, Software Safety, and IV&V for software created, acquired, provided, or maintained

by or for NASA but does not supersede nor waive established Agency requirements found in

other documentation.

Conflicts between the Software Assurance and Software Safety Standard and other

requirements documents are resolved by the responsible SMA and engineering TA(s), per NPR

NASA-STD-8739.8B

10 of 70

1400.1, NASA Directives and Charters Procedural Requirements, and NPR 7120.10, Technical

Standards for NASA Programs and Projects.

NASA-STD-8739.8B

11 of 70

3. ACRONYMS AND DEFINITIONS

3.1 Acronyms and Abbreviations

CMMI Capability Maturity Model Integration

COTS Commercial-Off-The-Shelf

GOTS Government-Off-The-Shelf

HDBK Handbook

IEEE Institute of Electrical and Electronics Engineers

IPEP IV&V Project Execution Plan

IV&V Independent Verification and Validation

MC/DC Modified Condition/Decision Coverage

MOTS Modified-Off-The-Shelf

NASA National Aeronautics and Space Administration

NIST National Institute of Standards and Technology

NPD NASA Policy Directive

NPR NASA Procedural Requirements

NRRS NASA Records Retention Schedule

OSMA NASA Headquarters Office, Safety and Mission Assurance

OSS Open Source Software

PLC Programmable Logic Controller

PROM Programmable Read-Only Memory

RTOS Real-Time Operating System

SMA Safety and Mission Assurance

SP Special Publication

SWE Software Engineering

TA Technical Authority

NASA-STD-8739.8B

12 of 70

3.2 Definitions

Accredit. The official acceptance of a software development tool, model, or simulation,

including associated data, to use for a specific purpose.

Acquirer. The entity or individual who specifies the requirements and accepts the resulting

software products. The Acquirer is usually NASA or an organization within the Agency but can

also refer to the prime contractor-subcontractor relationship.

Analyze. Review results in-depth, look at relationships of activities, examine methodologies in

detail, and follow methodologies such as Failure Mode and Effects Analysis, Fault Tree

Analysis, trending, and metrics analysis. Examine processes, plans, products, and task lists for

completeness, consistency, accuracy, reasonableness, and compliance with requirements. The

analysis may include identifying missing, incomplete, or inaccurate products, relationships,

deliverables, activities, required actions, etc.

Approve. When the responsible originating official, or designated decision authority, of a

document, report, condition, etc., has agreed, via their signature, to the content and indicates the

document is ready for release, baselining, distribution, etc. Usually, one “approver” and several

stakeholders need to “concur” for official acceptance of a document, report, etc. For example, the

project manager would approve the Software Development Plan, but SMA would concur on it.

Assess. Judge results against plans or work product requirements. Assess includes judging for

practicality, timeliness, correctness, completeness, compliance, evaluation of rationale, etc.,

reviewing activities performed, and independently tracking corrective actions to closure.

Assure. When software assurance personnel make certain that others have performed the

specified software assurance, management, and engineering activities.

Audit. Formal review to assess compliance with hardware or software requirements,

specifications, baselines, safety standards, procedures, instructions, codes, and contractual and

licensing requirements. (Source NPR 8715.3)

Bi-directional Traceability. Association among two or more logical entities that are discernible

in either direction (to and from an entity). (Source IEEE Definition)

Concur. A documented agreement that a proposed course of action is acceptable.

Condition. (1) measurable qualitative or quantitative attribute that is stipulated for a

requirement and that indicates a circumstance or event under which a requirement applies (2)

description of a contingency to be considered in the representation of a problem, or a reference to

other procedures to be considered as part of the condition (3) true or false logical predicate (4)

logical predicate involving one or more behavior model elements (5) Boolean expression

containing no Boolean operators.

Configuration Item. (1)item or aggregation of hardware, software, or both that is designated

for configuration management and treated as a single entity in the configuration management

process (2)component of an infrastructure or an item which is or will be under control of

NASA-STD-8739.8B

13 of 70

configuration management (3) aggregation of work products that is designated for configuration

management and treated as a single entity in the configuration management process (4) any

system element or aggregation of system elements that satisfies an end use function and is

designated by the acquirer for separate configuration control (5) item or aggregation of software

that is designed to be managed as a single entity and its underlying components, such as

documentation, data structures, scripts. (Source IEEE Definition)

Note: Configuration items can vary widely in complexity, size, and type, ranging from an entire

system including all hardware, software, and documentation, to a single module or a minor

hardware component. CIs have four common characteristics: defined functionality; replaceable

as an entity; unique specification; formal control of form, fit, and function. See Also: hardware

configuration item, computer software configuration item, configuration identification, and

critical item.

Confirm. Check to see that activities specified in the software engineering requirements are

adequately done and evidence of the activities exists as proof. Confirm includes ensuring

activities are done completely and correctly and have expected content according to approved

tailoring.

Critical. A condition that may cause severe injury or occupational illness, or major property

damage to facilities, systems, or flight hardware.

Deliverable. Product or item that has to be completed and delivered under the terms of an

agreement or contract. Products may also be deliverables, e.g., software requirements

specifications, and detailed design documents.

Develop. To produce or create a product or document and mature or advance the product or

document content.

Ensure. When software assurance or software safety personnel perform the specified software

assurance and software safety activities themselves.

Event. (1) occurrence of a particular set of circumstances (2) external or internal stimulus used

for synchronization purposes (3) change detectable by the subject software (4) fact that an action

has taken place (5) singular moment in time at which some perceptible phenomenological

change (energy, matter, or information) occurs at the port of a unit.

Failure. Inability of a system, subsystem, component, or part to perform its required function

within specified limits. (Source NPR 8715.3)

Hazard. A state or a set of conditions, internal or external to a system that has the potential to

cause harm. (Source NPR 8715.3)

Hazard Analysis. Identifying and evaluating existing and potential hazards and the

recommended mitigation for the hazard sources found.

Hazard Control. Means of reducing the risk of exposure to a hazard. (Source NPR 8715.3)

NASA-STD-8739.8B

14 of 70

Hazardous Operation/Work Activity. Any operation or other work activity that, without the

implementation of proper mitigations, has a high potential to result in loss of life, serious injury

to personnel or public, or damage to property due to the material or equipment involved or the

nature of the operation/activity itself.

Independent Verification and Validation. Verification and validation performed by an

organization that is technically, managerially, and financially independent of the development

organization. (Source IEEE Definition)

Inhibit. Design feature that prevents the operation of a function.

Insight. An element of Government surveillance that monitors contractor compliance using

Government-identified metrics and contracted milestones. Insight is a continuum that can range

from low intensity such as reviewing quarterly reports to high intensity such as performing

surveys and reviews. (Source NPR 7123.1)

Maintain. To continue to have; to keep in existence, to stay up-to-date and correct.

Mission Critical. [1] Item or function that must retain its operational capability to assure no

mission failure (i.e., for mission success). [2] An item or function, the failure of which may

result in the inability to retain operational capability for mission continuation if corrective action

is not successfully performed. (Source NASA-STD-8729.1)

Mission Success. Meeting all mission objectives and requirements for performance and safety.

(Source NPR 8715.3)

Monitor. (1) software tool or hardware device that operates concurrently with a system or

component and supervises, records, analyzes, or verifies the operation of the system or

component; (2) collect project performance data with respect to a plan, process, produce

performance measures, and report and disseminate performance information.

Participate. To be a part of the activity, audit, review, meeting, or assessment.

Perform. Software assurance does the action specified. Perform may include making

comparisons of independent results with similar activities performed by engineering; performing

audits; and reporting results to engineering.

Product. A result of a physical, analytical, or another process. The item delivered to the

customer (e.g., hardware, software, test reports, data) and the processes (e.g., system

engineering, design, test, logistics) that make the product possible. (Source NASA-HDBK-

8709.22)

Program. A strategic investment by a Mission Directorate or Mission Support Office that has a

defined architecture and technical approach, requirements, funding level, and management

structure that initiates and directs one or more projects. A program implements a strategic

direction that the Agency has identified as needed to accomplish Agency goals and objectives.

(Source NPR 7120.5)

NASA-STD-8739.8B

15 of 70

Program Manager. A generic term for the person who is formally assigned to be in charge of

the program. A program manager could be designated as a program lead, program director, or

some other term, as defined in the program's governing document. A program manager is

responsible for the formulation and implementation of the program, per the governing document

with the sponsoring MDAA.

Project. A specific investment having defined goals, objectives, requirements, life cycle cost, a

beginning, and an end. A project yields new or revised products or services that directly address

NASA’s strategic needs. They may be performed wholly in-house; by Government, industry,

academia partnerships; or through contracts with private industry. (Source NPR 7150.2)

Project Manager. The entity or individual who accepts the resulting software products. Project

managers are responsible and accountable for the safe conduct and successful outcome of their

program or project in conformance with governing programmatic requirements. The project

manager is usually NASA but can also refer to the prime contractor-subcontractor relationship as

well.

Provider. A Provider is a NASA or contractor organization that is tasked by an accountable

organization (i.e., the Acquirer) to produce a product or service. (Source NASA-HDBK-8709.22)

Regression testing. (1) selective retesting of a system or component to verify that modifications

have not caused unintended effects and that the system or component still complies with its

specified requirements (2) testing following modifications to a test item or its operational

environment, to identify whether regression failures occur. (Source IEEE Definition)

Risk. The combination of (1) the probability (qualitative or quantitative) of experiencing an

undesired event, (2) the consequences, impact, or severity that would occur if the undesired

event were to occur, and (3) the uncertainties associated with the probability and consequences.

(Source NPR 8715.3)

Note: A risk is an uncertain future event, or combination of events, that could threaten the

achievement of performance objectives or requirements. A "problem," on the other hand,

describes an issue that is certain or near certain to exist now, or an event that has been

determined with certainty or near certainty to have occurred and is threatening the achievement

of an objective or requirement. It is generally at the discretion of the decision authority to define

at what level of certainty (i.e., likelihood) an event may be classified and addressed as a

“problem” rather than as a “risk.” A risk may be conditional upon a problem, i.e., an existing

issue may or may not develop into performance-objective consequences or the extent to which it

may be at present uncertain.

Risk Posture. A characterization of risk based on conditions (e.g., criticality, complexity,

environments, performance, cost, schedule) and a set of identified risks, taken as a whole which

allows an understanding of the overall risk or provides a target risk range or level, which can

then be used to support decisions being made.

Safe State. A system state in which hazards are inhibited, and all hazardous actuators are in a

non-hazardous state. The system can have more than one Safe State.

NASA-STD-8739.8B

16 of 70

Safety. Freedom from those conditions that can cause death, injury, occupational illness,

damage to or loss of equipment or property, or damage to the environment. In a risk-informed

context, safety is an overall mission and program condition that provides sufficient assurance

that accidents will not result from the mission execution or program implementation, or, if they

occur, their consequences will be mitigated. This assurance is established by means of the

satisfaction of a combination of deterministic criteria and risk criteria. (Source NPR 8715.3)

Safety Analysis. Generic term for a family of analyses, which includes but is not limited to,

preliminary hazard analysis, system (subsystem) hazard analysis, operating hazard analysis,

software hazard analysis, sneak circuit, and others. Software safety analysis consists of a number

of tools and techniques to identify safety risks and formulate effective controls. These techniques

are used to help identify the hazards during the Hazard Analysis process, which in turn identifies

the safety-critical software. The Safety Analysis techniques often used to support the Hazard

Analysis are the Software Fault Tree Analysis and the Software Failure Modes and Effects

Analysis. The Software Fault Tree Analysis and the Software Failure Modes and Effects

Analysis are used to help identify hazards, hazard causes, and potential failure modes.

Safety-Critical. A term describing any condition, event, operation, process, equipment, or

system that could cause or lead to severe injury, major damage, or mission failure if performed

or built improperly or allowed to remain uncorrected. (Source NPR 8715.3)

Safety-Critical Software. Software is classified as safety-critical if the software is determined

by and traceable to a hazard analysis. Software is classified as safety-critical if it meets at least

one of the following criteria:

a. Causes or contributes to a system hazardous condition/event,

b. Controls functions identified in a system hazard,

c. Provides mitigation for a system hazardous condition/event,

d. Mitigates damage if a hazardous condition/event occurs,

e. Detects, reports, and takes corrective action if the system reaches a potentially hazardous state.

Software. defined as (1) computer programs, procedures, and associated documentation and

data pertaining to the operation of a computer system (2) all or a part of the programs,

procedures, rules, and associated documentation of an information processing (3) program or set

of programs used to run a computer (4) all or part of the programs which process or support the

processing of digital information (5) part of a product that is the computer program or the set of

computer programs. This definition applies to software developed by NASA, software developed

for NASA, software maintained by or for NASA, Commercial-Off-The-Shelf (COTS),

Government-Off-The-Shelf (GOTS), Modified-Off-The-Shelf (MOTS), Open Source Software

(OSS), reused software components, auto-generated code, embedded software, the software

executed on processors embedded in programmable logic devices (see NASA-HDBK-4008),

legacy, heritage, applications, freeware, shareware, trial or demonstration software, and OSS

components. (Source NPR 7150.2)

NASA-STD-8739.8B

17 of 70

Software Assurance. (1) a set of activities that assess adherence to, and the adequacy of the

software processes used to develop and modify software products. Software assurance also

determines the degree to which the desired results from software quality control are being

obtained. (2) set of activities that define and assess the adequacy of software processes to provide

evidence that establishes confidence that the software processes are appropriate for and produce

software products of suitable quality for their intended purposes. (Source IEEE Definition)

Note: A key attribute of software assurance is the objectivity of the software assurance function

with respect to the project.

Software Developer. A person, organization, or system that develops software based on

program/project requirements.

Software Life Cycle. The period that begins when a software product is conceived and ends

when the software is no longer available for use. The software life cycle typically includes a

concept phase, requirements phase, design phase, implementation phase, test phase, installation

and checkout phase, operation and maintenance phase, and sometimes, retirement phase.

Software Peer Review. An examination of a software product to detect and identify software

anomalies, including errors and deviations from standards and specifications. (Source IEEE

Definition)

Software Safety. The aspects of software engineering, system safety, and software assurance,

that provide a systematic approach to identifying, analyzing, tracking, mitigating, and controlling

hazards and hazardous functions of a system where software may contribute either to the

hazard(s) or to its detection, mitigation or control, to ensure safe operation of the system.

Software Validation. (1)confirmation, through the provision of objective evidence, that the

requirements for a specific intended use or application have been fulfilled (2) process of

providing evidence that the system, software, or hardware and its associated products satisfy

requirements allocated to it at the end of each life cycle activity, solve the right problem (e.g.,

correctly model physical laws, implement business rules, and use the proper system

assumptions), and satisfy intended use and user needs (3) the assurance that a product, service, or

system meets the needs of the customer and other identified stakeholders (4) process of

evaluating a system or component during or at the end of the development process to determine

whether it satisfies specified requirements (5) confirmation in a timely manner, through

automated techniques where possible, through the provision of objective evidence, that the

requirements for a specific intended use or application have been fulfilled. (Source IEEE

Definition)

Note: Validation in a system life cycle context is the set of activities ensuring and gaining

confidence that a system is able to accomplish its intended use, goals, and objectives (meet

stakeholder requirements) in the intended operational environment. The right system has been

built or is operating to meet business objectives. Validation demonstrates that the system can be

used by the users for their specific tasks. "Validated" is used to designate the corresponding

status. Multiple Validation can be carried out if there are different intended uses.

NASA-STD-8739.8B

18 of 70

Software Verification. Confirmation that products properly reflect the requirements specified

for them. In other words, verification ensures that “you built it right.” (Source IEEE Definition)

Supplier. Any organization which provides a product or service to a customer. By this

definition, suppliers may include vendors, subcontractors, contractors, flight programs/projects,

and the NASA organization supplying science data to a principal investigator. The classical

definition of a supplier is a subcontractor, at any tier, performing contract services or producing

the contract articles for a contractor. (Source NASA-HDBK-8709.22)

System Safety. Application of engineering and management principles, criteria, and techniques

to optimize safety and reduce risks within the constraints of operational effectiveness, time, and

cost.

Tailoring. The process used to adjust a prescribed requirement to accommodate the needs of a

specific task or activity (e.g., program or project). Tailoring may result in changes, subtractions,

or additions to a typical implementation of the requirement. (Source NPR 7150.2)

Track. To follow and note the course or progress of the product.

NASA-STD-8739.8B

19 of 70

4. SOFTWARE ASSURANCE AND SOFTWARE SAFETY

REQUIREMENTS

4.1 Software Assurance Description

The Software Assurance activities provide a level of confidence that software is free

from vulnerabilities, either intentionally designed into the software or accidentally inserted at

any time during its life cycle, that the software functions in an intended manner, and that the

software does not function in an unintended manner. The objectives of the Software Assurance

and Software Safety Standard include the following:

a. Ensuring that the processes, procedures, and products used to produce and sustain the

software conform to all specified requirements and standards that govern those processes,

procedures, and products.

(a) A set of activities that assess adherence to, and the adequacy of the software

processes used to develop and modify software products.

(b) A set of activities that define and assess the adequacy of software processes to

provide evidence that establishes confidence that the software processes are appropriate

for and produce software products of suitable quality for their intended purposes.

b. Determining the degree of software quality obtained by the software products.

c. Ensuring that the software systems are safe and that the software safety-critical requirements

are followed.

d. Ensuring that the software systems are secure.

e. Employing rigorous analysis and testing methodologies to identify objective evidence and

conclusions to provide an independent assessment of critical products and processes throughout

the life cycle.

Project and SMA Management support of the software assurance function is essential

for software assurance, software safety, and IV&V processes to be effective. The software

assurance, software safety, and IV&V support include the following:

a. The Project and SMA Management are familiar with and understand the software assurance,

software safety, and IV&V function’s purposes, concepts, practices, and needs.

b. The Project and SMA Management provide the software assurance, software safety, and

IV&V activities with skilled resources (people, equipment, knowledge, methods, facilities,

and tools) to accomplish their project responsibilities.

c. The Project and SMA Management act upon information provided by the software assurance,

software safety, and IV&V function throughout a project.

NASA-STD-8739.8B

20 of 70

The Software Assurance and Software Safety Standard’s requirements apply to

organizations in their roles as both Acquirers and Providers.

4.2 Safety-Critical Software Determination

Software is classified as safety-critical if the software is determined by and traceable to

a hazard analysis. Software is classified as safety-critical if it meets at least one of the following

criteria:

a. Causes or contributes to a system hazardous condition/event,

b. Controls functions identified in a system hazard,

c. Provides mitigation for a system hazardous condition/event,

d. Mitigates damage if a hazardous condition/event occurs,

e. Detects, reports, and takes corrective action if the system reaches a potentially hazardous state.

Note: See Appendix A for guidelines associated with addressing software in hazard definitions.

See Table 1, 3.7.1, SWE-205 for more details. Consideration for other independent means of

protection (software, hardware, barriers, or administrative) should be a part of the system

hazard definition process.

4.3 Software Assurance and Software Safety Requirements

The responsible project manager shall ensure the performance of the software

assurance, software safety, and IV&V activities, the applicable requirements are defined in Table

1. In this document, the phrase “Software Assurance and Software Safety Tasks” means that the

roles and responsibilities for completing these requirements may be delegated within the project

consistent with the scope and scale of the project. The Center SMA Director designates SMA

TA(s) for programs, facilities, and projects, providing direction, functional oversight, and

assessment for all Agency software assurance, software safety, and IV&V activities.

NASA-STD-8739.8B

21 of 70

Table 1. Software Assurance and Software Safety Requirements Mapping Matrix

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

3

Software Management Requirements

3.1

Software Life Cycle Planning

3.1.2

033

The project manager shall assess options for

software acquisition versus development.

a. Acquire an off-the-shelf software product that

satisfies the requirement.

b. Develop a software product or obtain the

software service internally.

c. Develop the software product or obtain the

software service through contract.

d. Enhance an existing software product or

service.

e. Reuse an existing software product or service.

f. Source code available external to NASA.

1. Confirm that the options for software acquisition

versus development have been evaluated.

2. Confirm the flow down of applicable software

engineering, software assurance, and software safety

requirements on all acquisition activities. (NPR

7150.2 and NASA-STD-8739.8).

3. Assess any risks with acquisition versus

development decision(s).

3.1.3

013

The project manager shall develop, maintain, and

execute software plans, including security plans,

that cover the entire software life cycle and, as a

minimum, address the requirements of this

directive with approved tailoring.

1. Confirm that all plans, including security plans, are

in place and have expected content for the life cycle

events, with proper tailoring for the classification of

the software.

2. Develop and maintain a Software Assurance Plan

following the content defined in NASA-HDBK-2203

for a software assurance plan, including software

safety.

3.1.4

024

The project manager shall track the actual results

and performance of software activities against the

software plans.

a. Corrective actions are taken, recorded, and

managed to closure.

b. Changes to commitments (e.g., software plans)

1. Assess plans for compliance with NPR 7150.2

requirements, NASA-STD-8739.8, including changes

to commitments.

2. Confirm that closure of corrective actions

associated with the performance of software activities

NASA-STD-8739.8B

22 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

that have been agreed to by the affected groups

and individuals are taken, recorded, and

managed.

against the software plans, including closure

rationale.

3. Confirm changes to commitments are recorded and

managed.

3.1.5

034

The project manager shall define and document

the acceptance criteria for the software.

1. Confirm software acceptance criteria are defined

and assess the criteria based on guidance in the

NASA Software Engineering Handbook, NASA-

HDBK-2203.

3.1.6

036

The project manager shall establish and maintain

the software processes, software documentation

plans, list of developed electronic products,

deliverables, and list of tasks for the software

development that are required for the project’s

software developers, as well as the action

required (e.g., approval, review) of the

Government upon receipt of each of the

deliverables.

1. Confirm the following are approved, implemented,

and updated per requirements:

a. Software processes, including software assurance,

software safety, and IV&V processes,

b. Software documentation plans,

c. List of developed electronic products, deliverables,

and

d. List of tasks required or needed for the project’s

software development.

2. Confirm that any required government actions are

established and performed upon receipt of

deliverables (e.g., approvals, reviews).

3.1.7

037

The project manager shall define and document

the milestones at which the software developer(s)

progress will be reviewed and audited.

1. Confirm that milestones for reviewing and auditing

software developer progress are defined and

documented.

2. Participate in project milestones reviews.

3.1.8

039

The project manager shall require the software

developer(s) to periodically report status and

provide insight into software development and

test activities; at a minimum, the software

developer(s) will be required to allow the project

manager and software assurance personnel to:

1. Confirm that software developer(s) periodically

report status and provide insight to the project

manager.

2. Monitor product integration.

3. Analyze the verification activities to ensure

adequacy.

NASA-STD-8739.8B

23 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

a. Monitor product integration.

b. Review the verification activities to ensure

adequacy.

c. Review trade studies and source data.

d. Audit the software development processes and

practices.

e. Participate in software reviews and technical

interchange meetings.

4. Assess trade studies, source data, software reviews,

and technical interchange meetings.

5. Perform audits on software development processes

and practices at least once every two years.

6. Develop and provide status reports.

7. Develop and maintain a list of all software

assurance review discrepancies, risks, issues,

findings, and concerns.

8. Confirm that the project manager provides

responses to software assurance and software safety

submitted issues, findings, and risks and that the

project manager tracks software assurance and

software safety issues, findings, and risks to closure.

3.1.9

040

The project manager shall require the software

developer(s) to provide NASA with software

products, traceability, software change tracking

information and nonconformances, in electronic

format, including software development and

management metrics.

1. Confirm that software artifacts are available in

electronic format to NASA.

3.1.10

042

The project manager shall require the software

developer(s) to provide NASA with electronic

access to the source code developed for the

project in a modifiable format.

1. Confirm that software developers provide NASA

with electronic access to the source code generated

for the project in a modifiable form.

3.1.11

139

The project manager shall comply with the

requirements in this NPR that are marked with an

”X” in Appendix C consistent with their software

classification.

1. Assess that the project's software requirements,

products, procedures, and processes are compliant

with the NPR 7150.2 requirements per the software

classification and safety criticality for software.

NASA-STD-8739.8B

24 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

3.1.12

121

Where approved, the project manager shall

document and reflect the tailored requirement in

the plans or procedures controlling the

development, acquisition, and deployment of the

affected software.

1. Confirm that any requirement tailoring in the

Requirements Mapping Matrix has the required

approvals.

2. Develop a tailoring matrix of software assurance

and software safety requirements.

3.1.13

125

Each project manager with software components

shall maintain a requirements mapping matrix or

multiple requirements mapping matrices against

requirements in this NPR, including those

delegated to other parties or accomplished by

contract vehicles or Space Act Agreements.

1. Confirm that the project maintains a requirements

mapping matrix (matrices) for all requirements in

NPR 7150.2.

2. Maintain the requirements mapping matrix

(matrices) for requirements in NASA-STD-8739.8.

3.1.14

027

The project manager shall satisfy the following

conditions when a COTS, GOTS, MOTS, OSS, or

reused software component is acquired or used:

a. The requirements to be met by the software

component are identified.

b. The software component includes

documentation to fulfill its intended purpose

(e.g., usage instructions).

c. Proprietary rights, usage rights, ownership,

warranty, licensing rights, transfer rights, and

conditions of use (e.g., required copyright,

author, and applicable license notices within the

software code, or a requirement to redistribute the

licensed software only under the same license

(e.g., GNU GPL, ver. 3, license)) have been

addressed and coordinated with Center

Intellectual Property Counsel.

d. Future support for the software product is

planned and adequate for project needs.

1. Confirm that the conditions listed in "a" through

"f" are complete for any COTS, GOTS, MOTS, OSS,

or reused software that is acquired or used.

NASA-STD-8739.8B

25 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

e. The software component is verified and

validated to the same level required to accept a

similar developed software component for its

intended use.

f. The project has a plan to perform periodic

assessments of vendor reported defects to ensure

the defects do not impact the selected software

components.

3.2

Software Cost Estimation

3.2.1

015

To better estimate the cost of development, the

project manager shall establish, document, and

maintain:

a. Two cost estimate models and associated cost

parameters for all Class A and B software

projects that have an estimated project cost of $2

million or more.

b. One software cost estimate model and

associated cost parameter(s) for all Class A and

Class B software projects that have an estimated

project cost of less than $2 million.

c. One software cost estimate model and

associated cost parameter(s) for all Class C and

Class D software projects.

d. One software cost estimate model and

associated cost parameter(s) for all Class F

software projects.

1. Confirm that the required number of software cost

estimates are complete and include software

assurance cost estimate(s) for the project, including a

cost estimate associated with handling safety-critical

software and safety-critical data.

NASA-STD-8739.8B

26 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

3.2.2

151

The project manager’s software cost estimate(s)

shall satisfy the following conditions:

a. Covers the entire software life cycle.

b. Is based on selected project attributes (e.g.,

programmatic assumptions/constraints,

assessment of the size, functionality, complexity,

criticality, reuse code, modified code, and risk of

the software processes and products).

c. Is based on the cost implications of the

technology to be used and the required

maturation of that technology.

d. Incorporates risk and uncertainty, including

end state risk and threat assessments for

cybersecurity.

e. Includes the cost of the required software

assurance support.

f. Includes other direct costs.

1. Assess the project's software cost estimate(s) to

determine if the stated criteria listed in "a" through

"f" are satisfied.

3.2.3

174

The project manager shall submit software

planning parameters, including size and effort

estimates, milestones, and characteristics, to the

Center measurement repository at the conclusion

of major milestones.

1. Confirm that all the software planning parameters,

including size and effort estimates, milestones, and

characteristics, are submitted to a Center repository.

2. Confirm that all software assurance and software

safety software estimates and planning parameters

are submitted to an organizational repository.

3.3

Software Schedules

NASA-STD-8739.8B

27 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

3.3.1

016

The project manager shall document and

maintain a software schedule that satisfies the

following conditions:

a. Coordinates with the overall project schedule.

b. Documents the interactions of milestones and

deliverables between software, hardware,

operations, and the rest of the system.

c. Reflects the critical dependencies for software

development activities.

d. Identifies and accounts for dependencies with

other projects and cross-program dependencies.

1. Assess that the software schedule satisfies the

conditions in the requirement.

2. Develop a software assurance schedule, including

software assurance products, audits, reporting, and

reviews.

3.3.2

018

The project manager shall regularly hold reviews

of software schedule activities, status,

performance metrics, and assessment/analysis

results with the project stakeholders and track

issues to resolution.

1. Confirm the generation and distribution of periodic

reports on software schedule activities, metrics, and

status, including reports of software assurance and

software safety schedule activities, metrics, and

status.

2. Confirm closure of any project software schedule

issues.

3.3.3

046

The project manager shall require the software

developer(s) to provide a software schedule for

the project's review, and schedule updates as

requested.

1. Confirm the project's schedules, including the

software assurance’s/software safety’s schedules, are

updated.

3.4

Software Training

3.4.1

017

The project manager shall plan, track, and ensure

project specific software training for project

personnel.

1. Confirm that any project-specific software training

has been planned, tracked, and completed for project

personnel, including software assurance and software

safety personnel.

2. Confirm that software assurance and software

safety personnel have completed the appropriate

NASA-STD-8739.8B

28 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

software assurance and/or software safety training to

satisfactorily conduct assurance and safety activities.

3.5

Software Classification Assessments

3.5.1

020

The project manager shall classify each system

and subsystem containing software in accordance

with the highest applicable software classification

definitions for Classes A, B, C, D, E, and F

software in Appendix D.

1. Perform a software classification or concur with

the engineering software classification of software

per the descriptions in NPR 7150.2.

3.5.2

176

The project manager shall maintain records of

each software classification determination, each

software Requirements Mapping Matrix, and the

results of each software independent

classification assessments for the life of the

project.

1. Confirm that records of the software Requirements

Mapping Matrix and each software classification are

maintained and updated for the life of the project.

3.6

Software Assurance and Software Independent

Verification & Validation

3.6.1

022

The project manager shall plan and implement

software assurance, software safety and IV&V (if

required) per NASA-STD-8739.8, Software

Assurance and Software Safety Standard.

1. Perform software assurance, software safety, and

IV&V (if required) according to the software

assurance and software safety standard requirements

in NASA-STD-8739.8, Software Assurance and

Software Safety Standard, and the Project’s software

assurance plan.

3.6.2

141

For projects reaching Key Decision Point A, the

program manager shall ensure that software

IV&V is performed on the following categories

of projects:

a. Category 1 projects as defined in NPR 7120.5.

b. Category 2 projects as defined in NPR 7120.5

that have Class A or Class B payload risk

1. Confirm that IV&V requirements (section 4.4) are

complete on projects required to have IV&V.

NASA-STD-8739.8B

29 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

classification per NPR 8705.4, Risk

Classification for NASA Payloads.

c. Projects selected explicitly by the Mission

Directorate Associate Administrator to have

software IV&V.

3.6.3

131

If software IV&V is required for a project, the

project manager, in consultation with NASA

IV&V, shall ensure an IPEP is developed,

approved, maintained, and executed in

accordance with IV&V requirements in NASA-

STD-8739.8.

1. Confirm that the IV&V Project Execution Plan

(IPEP) exists.

3.6.4

178

If software IV&V is performed on a project, the

project manager shall ensure that IV&V is

provided access to development artifacts,

products, source code, and data required to

perform the IV&V analysis efficiently and

effectively.

1. Confirm that IV&V has access to the software

development artifacts, products, source code, and

data required to perform the IV&V analysis

efficiently and effectively.

3.6.5

179

If software IV&V is performed on a project, the

project manager shall provide responses to IV&V

submitted issues and risks, and track these issues

and risks to closure.

1. Confirm that the project manager responds to

IV&V submitted issues, findings, and risks and that

the project manager tracks IV&V issues, findings,

and risks to closure.

3.7

Safety-Critical and Mission-Critical Software

3.7.1

205

The project manager, in conjunction with the

SMA organization, shall determine if each

software component is considered to be safety-

critical per the criteria defined in NASA-STD-

8739.8.

1. Confirm that the hazard reports or safety data

packages contain all known software contributions or

events where software, either by its action, inaction,

or incorrect action, leads to a hazard.

2. Assess that the hazard reports identify the software

components associated with the system hazards per

the criteria defined in NASA-STD-8739.8, Appendix

A.

NASA-STD-8739.8B

30 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

3. Assess that hazard analyses (including hazard

reports) identify the software components associated

with the system hazards per the criteria defined in

NASA-STD-8739.8, Appendix A.

4. Confirm that the traceability between software

requirements and hazards with software contributions

exists.

5. Develop and maintain a software safety analysis

throughout the software development life cycle.

3.7.2

023

If a project has safety-critical software, the

project manager shall implement the safety-

critical software requirements contained in

NASA-STD-8739.8.

1. Confirm that the identified safety-critical software

components and data have implemented the safety-

critical software assurance requirements listed in this

standard.

3.7.3

134

If a project has safety-critical software or

mission-critical software, the project manager

shall implement the following items in the

software:

a. The software is initialized, at first start and

restarts, to a known safe state.

b. The software safely transitions between all

predefined known states.

c. Termination performed by software functions

is performed to a known safe state.

d. Operator overrides of software functions

require at least two independent actions by an

operator.

e. Software rejects commands received out of

sequence when execution of those commands out

of sequence can cause a hazard.

f. The software detects inadvertent memory

1. Analyze the software requirements and the

software design and work with the project to

implement NPR 7150.2 requirement items "a"

through "l."

2. Assess that the source code satisfies the conditions

in the NPR 7150.2 requirement "a" through "l" for

safety-critical and mission-critical software at each

code inspection, test review, safety review, and

project review milestone.

3. Confirm that the values of the safety-critical

loaded data, uplinked data, rules, and scripts that

affect hazardous system behavior have been tested.

4. Analyze the software design to ensure the

following:

a. Use of partitioning or isolation methods in the

design and code,

NASA-STD-8739.8B

31 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

modification and recovers to a known safe state.

g. The software performs integrity checks on

inputs and outputs to/from the software system.

h. The software performs prerequisite checks

prior to the execution of safety-critical software

commands.

i. No single software event or action is allowed to

initiate an identified hazard.

j. The software responds to an off-nominal

condition within the time needed to prevent a

hazardous event.

k. The software provides error handling.

l. The software can place the system into a safe

state.

b. That the design logically isolates the safety-critical

design elements and data from those that are non-

safety-critical.

5. Participate in software reviews affecting safety-

critical software products.

6. Ensure the SWE-134 implementation supports and

is consistent with the system hazard analysis.

3.7.4

219

If a project has safety-critical software, the

project manager shall ensure that there is 100

percent code test coverage using the Modified

Condition/Decision Coverage (MC/DC) criterion

for all identified safety-critical software

components.

1. Confirm that 100% code test coverage is addressed

for all identified safety-critical software components

or that software developers provide a technically

acceptable rationale or a risk assessment explaining

why the test coverage is not possible or why the risk

does not justify the cost of increasing coverage for

the safety-critical code component.

3.7.5

220

If a project has safety-critical software, the

project manager shall ensure all identified safety-

critical software components have a cyclomatic

complexity value of 15 or lower. Any exceedance

shall be reviewed and waived with rationale by

the project manager or technical approval

authority.

1. Perform or analyze Cyclomatic Complexity

metrics on all identified safety-critical software

components.

2. Confirm that all identified safety-critical software

components have a cyclomatic complexity value of

15 or lower. If not, assure that software developers

provide a technically acceptable risk assessment,

accepted by the proper technical authority, explaining

why the cyclomatic complexity value needs to be

NASA-STD-8739.8B

32 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

higher than 15 and why the software component

cannot be structured to be lower than 15 or why the

cost and risk of reducing the complexity to below 15

are not justified by the risk inherent in modifying the

software component.

3.8

Automatic Generation of Software Source Code

3.8.1

146

The project manager shall define the approach to

the automatic generation of software source code,

including:

a. Validation and verification of auto-generation

tools.

b. Configuration management of the auto-

generation tools and associated data.

c. Description of the limits and the allowable

scope for the use of the auto-generated software.

d. Verification and validation of auto-generated

source code using the same software standards

and processes as hand-generated code.

e. Monitoring the actual use of auto-generated

source code compared to the planned use.

f. Policies and procedures for making manual

changes to auto-generated source code.

g. Configuration management of the input to the

auto-generation tool, the output of the auto-

generation tool, and modifications made to the

output of the auto-generation tools.

1. Assess that the approach for the auto-generation

software source code is defined, and the approach

satisfies at least the conditions “a” through “g.”

NASA-STD-8739.8B

33 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

3.8.2

206

The project manager shall require the software

developers and custom software suppliers to

provide NASA with electronic access to the

models, simulations, and associated data used as

inputs for auto-generation of software.

1. Confirm that NASA, engineering, project, software

assurance, and IV&V have electronic access to the

models, simulations, and associated data used as

inputs for auto-generation of software.

3.9

Software Development Processes and Practices

3.9.2

032

The project manager shall acquire, develop, and

maintain software from an organization with a

non-expired CMMI®-DEV rating as measured by

a CMMI® Institute Certified Lead Appraiser as

follows:

a. For Class A software: CMMI®-DEV Maturity,

Level 3 Rating, or higher for software.

b. For Class B software (except Class B software

on NASA Class D payloads, as defined in NPR

8705.4): CMMI®-DEV Maturity Level 2 Rating

or higher for software.

1. Confirm that Class A and B software acquired,

developed, and maintained by NASA is performed by

an organization with a non-expired CMMI-DEV

rating, as per the NPR 7150.2 requirement.

2. Assess potential process-related issues, findings, or

risks identified from the CMMI assessment findings.

3. Perform audits on the software development and

software assurance processes.

3.10

Software Reuse

3.10.1

147

The project manager shall specify reusability

requirements that apply to its software

development activities to enable future reuse of

the software, including the models, simulations,

and associated data used as inputs for auto-

generation of software, for U.S. Government

purposes.

1. Confirm that the project has considered reusability

for its software development activities.

NASA-STD-8739.8B

34 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

3.10.2

148

The project manager shall evaluate software for

potential reuse by other projects across NASA

and contribute reuse candidates to the appropriate

NASA internal sharing and reuse software

system. However, if the project manager is not a

civil servant, then a civil servant will pre-approve

all such software contributions; all software

contributions should include, at a minimum, the

following information:

a. Software Title.

b. Software Description.

c. The Civil Servant Software Technical POC for

the software product.

d. The language or languages used to develop the

software.

e. Any third-party code contained therein, and the

record of the requisite license or permission

received from the third party permitting the

Government’s use and any required markings

(e.g., required copyright, author, applicable

license notices within the software code, and the

source of each third-party software component

(e.g., software URL & license URL)), if

applicable.

f. Release notes.

1. Confirm that any project software contributed as a

reuse candidate has the identified information in

items “a” through “f.”

3.11

Software Cybersecurity

NASA-STD-8739.8B

35 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

3.11.2

156

The project manager shall perform a software

cybersecurity assessment on the software

components per the Agency security policies and

the project requirements, including risks posed by

the use of COTS, GOTS, MOTS, OSS, or reused

software components.

1. Confirm the project has performed a software

cybersecurity assessment on the software components

per the Agency security policies and the project

requirements, including risks posed by the use of

COTS, GOTS, MOTS, OSS, or reused software

components.

3.11.3

154

The project manager shall identify cybersecurity

risks, along with their mitigations, in flight and

ground software systems, and plan the

mitigations for these systems.

1. Confirm that cybersecurity risks, along with their

mitigations, are identified and managed.

3.11.4

157

The project manager shall implement protections

for software systems with communications

capabilities against unauthorized access per the

requirements contained in the NASA-STD-1006,

Space System Protection Standard.

1. For software products with communications

capabilities, confirm that the software requirements,

software design documentation, and software

implementation address unauthorized access per the

requirements contained in the Space System

Protection Standard, NASA-STD-1006.

3.11.5

159

The project manager shall test the software and

record test results for the required software

cybersecurity mitigation implementations

identified from the security vulnerabilities and

security weaknesses analysis.

1. Confirm that testing is complete for the

cybersecurity mitigation.

2. Assess the quality of the cybersecurity mitigation

implementation testing and the test results.

3.11.6

207

The project manager shall identify, record, and

implement secure coding practices.

1. Assess that the software coding guidelines (e.g.,

coding standards) includes secure coding practices.

3.11.7

185

The project manager shall verify that the software

code meets the project’s secure coding standard

by using the results from static analysis tool(s).

1. Analyze the engineering data or perform

independent static code analysis to verify that the

code meets the project’s secure coding standard

requirements.

NASA-STD-8739.8B

36 of 70

NPR

7150.2

Section

SWE

#

NPR 7150.2 Requirement

Software Assurance and Software Safety Tasks

3.11.8

210

The project manager shall identify software

requirements for the collection, reporting, and

storage of data relating to the detection of

adversarial actions.

1. Confirm that the software requirements exist for

collecting, reporting, and storing data relating to the

detection of adversarial actions.

3.12

Software Bi-Directional Traceability

3.12.1

052

The project manager shall perform, record, and

maintain bi-directional traceability between the

following software elements:

Bi-directional Traceability

Class

A, B,

and C

Class

D

Class

F

Higher-level requirements

to the software requirements

X

X

Software requirements to

the system hazards

X

X

Software requirements to

the software design

components

X

Software design

components to the software

code

X

Software requirements to

the software verification(s)

X

X

X

Software requirements to

the software non-

conformances

X

X

X

1. Confirm that bi-directional traceability has been

completed, recorded, and maintained.

2. Confirm that the software traceability includes

traceability to any hazard that includes software.

4

Software Engineering (Life Cycle) Requirements

4.1

Software Requirements