A SYSTEMATIC APPROACH TO PRIORITIZE VULNERABILITIES IN IOT

DEPLOYMENTS

A Dissertation

Presented to

The Academic Faculty

By

Omar Alrawi

In Partial Fulfillment

of the Requirements for the Degree

Doctor of Philosophy in the

School of Engineering

Department of Electric and Computer Engineering

Georgia Institute of Technology

May 2023

© Omar Alrawi 2023

A SYSTEMATIC APPROACH TO PRIORITIZE VULNERABILITIES IN IOT

DEPLOYMENTS

Thesis committee:

Dr. Emmanouil K. Antonakakis

Electrical and Computer Engineering

Georgia Institute of Technology

Dr. Fabian Monrose

Electrical and Computer Engineering

Georgia Institute of Technology

Dr. Douglas Blough

Electrical and Computer Engineering

Georgia Institute of Technology

Dr. Mustaque Ahamad

College of Computing

Georgia Institute of Technology

Dr. Roberto Perdisci

Computer Science

University of Georgia

Dr. Michael Bailey

School of Cybersecurity and Privacy

Georgia Institute of Technology

Date approved: April 18, 2023

ACKNOWLEDGMENTS

First and foremost, I would like to express my profound gratitude to the Almighty for

bestowing upon me knowledge and wisdom like that of Prophet David and Solomon, as

mentioned in the Quran, chapter 27, verse 15: “And We had certainly given to David and

Solomon knowledge, and they said, ‘Praise [is due] to Allah, who has favored us over many

of His believing servants’.” I want to thank the Ph.D. committee, whose mentorship, sup-

port, and constructive feedback have been instrumental in shaping my research and refining

my dissertation. I am grateful for their thoroughness during my defense and for setting high

expectations to push me to my full potential. I am deeply grateful to my advisor, Profes-

sor Manos Antonakakis, who has been my number-one fan throughout this journey. Your

unwavering faith in me and your constant encouragement provided the impetus I needed to

persevere through the challenges of completing my Ph.D.

I am indebted to Professor David Mohaisen, who introduced me to research, mentored

me, and convinced me to pursue a Ph.D. As a close friend, I have admired your unmatched

productivity. I aspire to attain the level of brilliance and dedication that you embody. A

heartfelt acknowledgment goes out to Professor Brendan Saltaformaggio, a close friend

and mentor. I am very grateful for your willingness to guide me through the intricacies of

research and academia. Your generosity in sharing resources, time, and friendship has been

a true gift. I owe a special gratitude to Professor Fabian Monrose, who opened my eyes to

what it truly means to be a scientist. Your rigorous approach, wisdom, and experience have

shaped me as a researcher. I have genuinely enjoyed every moment spent working with you

and receiving your invaluable feedback.

To my fellow lab mates, Chaz, Gong, Kevin V, Panos, Rosa, Thanos

1

, Thanos

2

, Thomas,

Yizheng, and Zane, thank you for your support and camaraderie. A special thanks to Chaz,

whose mentorship has been invaluable throughout my journey. I am grateful to the numer-

ous outside collaborators from industry and other academic institutions. Your partnership

iii

has been an essential part of my growth and success.

I would also like to express my heartfelt gratitude to my loving mom, sisters, and in-

laws, who have been my pillar of strength during these years. Your sacrifices and love

allowed me to concentrate on my studies. My sincere appreciation extends to your un-

derstanding and support. To my incredible wife, Shireen, and our lovely children, Bayan,

Bekr, Belal, and Badr, thank you for your unwavering support, love, and understanding.

Your love and patience have sustained me during the most challenging times to achieve

this milestone. Thank you, my precious Bayan, for smiling at me from the front-row seat

during my first paper presentation and melting my stage fright away. Finally, I am thankful

to everyone who has contributed to my journey in big and small ways. This dissertation is

not only a testament to my efforts but also a reflection of the collective efforts of those who

have touched my life throughout this incredible experience.

Thank you all!

iv

TABLE OF CONTENTS

Acknowledgments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . iii

List of Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

List of Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiv

List of Acronyms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvi

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Chapter 1: Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Thesis and Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1.1 Thesis Statement . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1.2 Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.3 Security Evaluation of Home-based Internet of Things (IoT) De-

ployments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.1.4 A Longitudinal Security Measurement of Home-based IoT Devices 3

1.1.5 A Large-Scale Analysis of The IoT Malware Lifecycle . . . . . . . 4

1.2 Dissertation Organization . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

Chapter 2: Foundational Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.1 IoT Security Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

v

2.1.1 Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.1.2 Mobile Application . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.1.3 Cloud Endpoint . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.1.4 Communication . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.2 Malware Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.2.1 Infection Stage . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.2.2 Payload Stage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.2.3 Persistence Stage . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.2.4 Capability Stage . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.2.5 Command & Control Stage . . . . . . . . . . . . . . . . . . . . . . 30

2.3 Related Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

2.3.1 IoT Security Evaluation . . . . . . . . . . . . . . . . . . . . . . . . 32

2.3.2 IoT Malware Analysis . . . . . . . . . . . . . . . . . . . . . . . . 32

Chapter 3: Security Evaluation of Home-based IoT Deployments . . . . . . . . . 34

3.0.1 Security Properties . . . . . . . . . . . . . . . . . . . . . . . . . . 35

3.0.2 Evaluation Scope and Attack Model . . . . . . . . . . . . . . . . . 36

3.1 Security Evaluation Methodology . . . . . . . . . . . . . . . . . . . . . . 36

3.1.1 Testbed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

3.1.2 Evaluation Procedures . . . . . . . . . . . . . . . . . . . . . . . . 45

3.2 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

3.2.1 Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

3.2.2 Mobile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

vi

3.2.3 Cloud . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

3.2.4 Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

3.3 Evaluation Cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

3.3.1 Good: Withings Home . . . . . . . . . . . . . . . . . . . . . . . . 51

3.3.2 Satisfactory: Nest Cam . . . . . . . . . . . . . . . . . . . . . . . . 52

3.3.3 Needs Improvement: MiCasa Verde VeraLite . . . . . . . . . . . . 52

3.4 An Integrated Security Evaluation . . . . . . . . . . . . . . . . . . . . . . 53

3.4.1 Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

3.4.2 Mobile App . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

3.4.3 Cloud Endpoints . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

3.4.4 Network Communication . . . . . . . . . . . . . . . . . . . . . . . 55

3.4.5 Attack Paths . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

3.5 Proposals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

3.5.1 Mitigations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

3.5.2 Stakeholders . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

3.5.3 Recommendations . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Chapter 4: Longitudinal Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . 66

4.1 Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

4.1.1 Longitudinal Studies of IoT Deployments . . . . . . . . . . . . . . 66

4.1.2 Goals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.1.3 Evaluation Scope . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.2 Methodology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

vii

4.2.1 Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

4.2.2 Data Collection and Analysis . . . . . . . . . . . . . . . . . . . . . 69

4.2.3 Challenges and Limitations . . . . . . . . . . . . . . . . . . . . . . 72

4.3 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

4.3.1 Study One: The Impact of Updates on Security Posture . . . . . . . 73

4.3.2 Study Two: A Longitudinal Analysis of Devices’ Security Lifecycle 78

4.4 Iterative Security Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . 83

Chapter 5: Large-Scale Analysis of the IoT Malware Lifecycle . . . . . . . . . . 85

5.1 Challenges and Limitations . . . . . . . . . . . . . . . . . . . . . . . . . . 85

5.2 Methodology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

5.2.1 Data Sources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

5.2.2 Analysis Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

5.3 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

5.3.1 Detection and Labeling . . . . . . . . . . . . . . . . . . . . . . . . 95

5.3.2 Infection Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

5.3.3 Payload Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

5.3.4 Persistence Analysis . . . . . . . . . . . . . . . . . . . . . . . . . 99

5.3.5 Capability Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . 101

5.3.6 C&C Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

5.4 Case Studies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

5.4.1 Code Reuse and Evolution . . . . . . . . . . . . . . . . . . . . . . 104

5.4.2 Payload Hosting . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

viii

5.5 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

5.5.1 Similarities and Differences . . . . . . . . . . . . . . . . . . . . . 108

5.5.2 Stakeholders and Defenses . . . . . . . . . . . . . . . . . . . . . . 110

5.6 Using Threat Analysis to Inform Risk Assessment . . . . . . . . . . . . . . 111

5.6.1 Targeted Devices In The Testbed . . . . . . . . . . . . . . . . . . . 112

Chapter 6: Risk Assessment Framework for IoT Deployments . . . . . . . . . . 113

6.1 An Informed Risk Assessment Model . . . . . . . . . . . . . . . . . . . . 113

6.2 Risk Assessment Framework . . . . . . . . . . . . . . . . . . . . . . . . . 114

6.2.1 Overview and Terminology . . . . . . . . . . . . . . . . . . . . . . 114

6.2.2 Threat Model and Attacker Types . . . . . . . . . . . . . . . . . . 116

6.3 Risk Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

6.3.1 Base Risk Score . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

6.3.2 Risk Score Adjustment: Exposure . . . . . . . . . . . . . . . . . . 119

6.3.3 Risk Score Adjustment: Threats . . . . . . . . . . . . . . . . . . . 120

6.3.4 Risk Assessment Example . . . . . . . . . . . . . . . . . . . . . . 121

6.4 Case Study . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

6.4.1 Multiple Devices, One Vendor . . . . . . . . . . . . . . . . . . . . 123

6.4.2 Incorporating Exposure and Attack Weights . . . . . . . . . . . . . 124

Chapter 7: Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

7.1 Risk Assessment and Empirical Data . . . . . . . . . . . . . . . . . . . . . 127

7.1.1 Understanding Attacks and Abuse on IoT Deployments . . . . . . . 128

7.1.2 A Longitudinal Security Evaluation of IoT Cloud Backends . . . . . 129

ix

7.1.3 Understanding Attacks on The IoT Software Supply Chain . . . . . 129

7.1.4 An Automated and Iterative Security Evaluation Framework for LE

IoT Protocols . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

7.2 Broader Impact of Empirical Studies . . . . . . . . . . . . . . . . . . . . . 131

7.2.1 Security Evaluation of Home-based IoT Deployments . . . . . . . . 131

7.2.2 Large-Scale Analysis of the IoT Malware Lifecycle . . . . . . . . . 134

7.3 Closing Remarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

x

LIST OF TABLES

2.1 Systematization of the IoT literature. Each section corresponds to a compo-

nent of IoT deployment across attack vectors, mitigations, and stakehold-

ers. The ✓implies the category of attack, mitigation, or stakeholder applies

to the discussed literature. . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

2.2 A summary of the proposed comparative framework and definitions for

each component. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.3 A comparison between desktop, mobile, and IoT malware using the pro-

posed framework. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.1 A summary of the efforts required for the testbed components and evalua-

tion stages. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

3.2 An overview of the devices used in the evaluation. . . . . . . . . . . . . . . 38

3.3 This table is a summary of each evaluated device per graph component

in Figure 3.2. The device section summarizes the number of running ser-

vices and issues found. The mobile application summarizes excessive per-

missions, sensitive data, or incorrectly use of cryptographic protocols. The

communication category summarizes the susceptibility to MITM attack and

the communication channel state as fully encrypted ( ), partially encrypted

(G#), or not encrypted (#). . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

3.4 Device Evaluation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

3.5 List of devices and their CVEs with CVSS score of Critical and High. . . . 63

3.6 Mobile Application Evaluation. . . . . . . . . . . . . . . . . . . . . . . . . 63

3.7 Cloud Endpoint Evaluation. . . . . . . . . . . . . . . . . . . . . . . . . . . 64

3.8 Communication Evaluation. ✓+ (TLS/SSL) — ✓- (3rd-party recursive DNS) 65

xi

4.1 Device evaluation based on an initial evaluation, baseline evaluation, and

update evaluation. Red cells show an increase for services and issues and

green cells show a decrease in services and issues. . . . . . . . . . . . . . . 71

4.2 Summary of device use of encryption (#- None, G#- partial, - full), issues

found in SSL/TLS protocol, and vulnerabilities affecting services. Green

shows improvement, red shows decline, and yellow shows improvement

but poor encryption. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

4.3 A summary of issues for baseline evaluation. Green rows show fixed issues

by updates. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

5.1 A summary of documented or publicly available IoT Malware analysis plat-

forms. ✗ indicates resource no longer available. . . . . . . . . . . . . . . . 87

5.2 The data sources used for the empirical study. . . . . . . . . . . . . . . . . 89

5.3 A statistical summary of the dataset, metadata, static, and dynamic analysis

grouped by IoT malware’s target architecture. . . . . . . . . . . . . . . . . 94

5.4 Top anti-virus (AV) labels based on reports from VirusTotal. . . . . . . . . 94

5.5 Device categories and their top vulnerabilities that are targeted by IoT mal-

ware based on data from Bad Packets. . . . . . . . . . . . . . . . . . . . . 97

5.6 Top exploits found in IoT malware binaries based on static analysis. . . . . 97

5.7 Scanning methods found in IoT malware binaries based on dynamic analysis.101

5.8 DDoS capabilities found in IoT malware binaries based on static analysis

and leaked source code. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

5.9 Top IoT malware clusters grouped by AV Labels. . . . . . . . . . . . . . . 103

6.1 Example of point assignment for the VeraLite device . . . . . . . . . . . . 122

6.2 A summary of assigned weights to exposure and attack variables . . . . . . 123

6.3 The risk scores for the Belkin WeMo Devices. . . . . . . . . . . . . . . . . 124

6.4 The base risk score and temporal and threat weighed risk score for four

devices. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

xii

LIST OF FIGURES

2.1 Typical home-based IoT setup. . . . . . . . . . . . . . . . . . . . . . . . . 13

3.1 Single IoT deployment. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.2 IoT graph model. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.3 An overview of the lab architecture. . . . . . . . . . . . . . . . . . . . . . 39

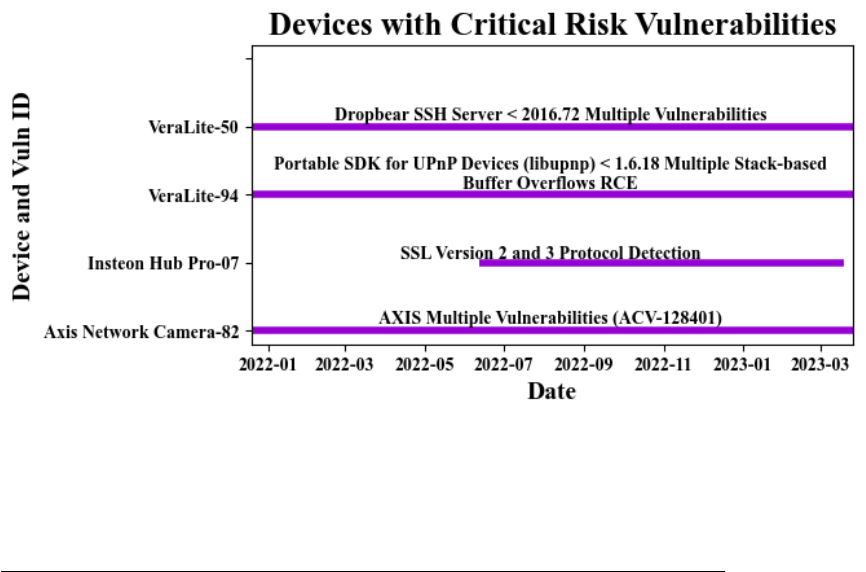

4.1 Summary of critical Common Vulnerability Scoring System (CVSS) vul-

nerabilities found in testbed. . . . . . . . . . . . . . . . . . . . . . . . . . 78

4.2 Summary of high CVSS vulnerabilities found in testbed. . . . . . . . . . . 79

4.3 Summary of medium CVSS vulnerabilities found in testbed. . . . . . . . . 81

4.4 Summary of low CVSS vulnerabilities found in testbed. . . . . . . . . . . . 83

5.1 The daily volume of files and detected files submitted to VirusTotal in 2019

per platform. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

5.2 An overview of the static and dynamic analysis pipeline. . . . . . . . . . . 90

5.3 The number of AV engines that detect IoT malware per architecture. The

dotted vertical line marks five AVs. . . . . . . . . . . . . . . . . . . . . . . 95

5.4 A timeline of exploits for Mirai variants based on reports from security

researchers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

5.5 DNS measurement of domains for the top IoT malware family clusters

based on the pDNS dataset. . . . . . . . . . . . . . . . . . . . . . . . . . . 104

5.6 Mirai’s faulty evasion code (top) and the fixed code found in newer variants

(bottom). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

xiv

LIST OF ACRONYMS

C&C Command and Control

CDN Content Delivery Network

COTS commercial off-the-shelf

CVE Common Vulnerabilities and Exposures

CVSS Common Vulnerability Scoring System

DHCP Dynamic Host Configuration Protocol

DNS Domain Name System

EOL End-of-Life

ICS Industrial Control System

IoT Internet of Things

ISPs Internet Service Providers

LAN Local Area Network

MITM Man-in-the-Middle

NAT Network Address Translation

NIST National Institute of Standards and Technology

OS Operating System

UPnP Universal Plug and Play

VLAN Virtual Local Area Network

xvi

SUMMARY

Practitioners must use an informed approach that prioritizes security measures based

on risk and cost to improve the security of deployed Internet of Things (IoT). For several

reasons, improving the overall security of IoT is essential. First, IoT devices are widely

used in various sectors, such as healthcare, transportation, and finance, and often handle

sensitive data, making them a prime target for cybercriminals. Second, many IoT devices

have limited computing resources, making it challenging to implement robust security mea-

sures. This limitation can expose IoT devices to several vulnerabilities, making them easier

targets for malicious attacks. Third, many IoT devices are interconnected and communi-

cate with each other, increasing the attack surface for cybercriminals. An attack on one

IoT device could compromise the entire network, causing significant harm to the system

and its users. Therefore, improving the overall security of IoT deployments is crucial to

ensure data privacy, protect against cyberattacks, and maintain the integrity and availability

of critical services.

To do so, practitioners require an approach to assess the security of IoT deployments

comprehensively, standardize the security assessment across diverse devices, and prioritize

security measures for high-risk devices. A comprehensive security evaluation can identify

the most significant security threats and vulnerabilities in IoT deployments. This will help

practitioners focus on the most critical areas that require the highest priority. Standardizing

security assessment will help reduce costs associated with customizing security evaluation

for each device and make it easier to compare security across diverse devices. Finally,

prioritizing security measures provides a more strategic approach to protecting high-risk

devices vulnerable to cyberattacks. Toward improving overall security, this dissertation

contributes a systematic and replicable approach that better prioritizes vulnerabilities in

IoT deployments.

xvii

CHAPTER 1

INTRODUCTION

Insecure IoT deployments can cause severe operational issues for critical internet infras-

tructure, including downtime, data breaches, network congestion, malware propagation,

and control system manipulation [1]. Understanding the risk of deploying IoT systems

is complex. For example, operators must enumerate their assets, identify vulnerabilities,

correlate cyber threats that target these vulnerabilities, and prioritize their resources to pro-

tect the most critical IoT deployments. More problematic, IoT deployments often consist

of legacy and newer devices, which can be challenging to secure. Legacy devices may

not have the latest security features and may be more vulnerable to modern threats. Fur-

thermore, patching legacy devices may be difficult or more costly than replacing them.

Many IoT deployments are dynamic and evolve in various aspects through updates, end-

of-lifecycle, and changes to their network infrastructure and topology. These changes can

cause them to be misconfigured and vulnerable to cyber threats. These compounding com-

plexities overwhelm operators, and it becomes difficult to prioritize what IoT deployments

security issues should be addressed quickly. Therefore, assessing the risk of IoT deploy-

ments is essential to prioritizing security measures and involves comprehensive, iterative,

and large-scale efforts.

1.1 Thesis and Contributions

1.1.1 Thesis Statement

A comprehensive and iterative vulnerability and threat analysis of IoT deployments im-

proves risk assessment models for prioritizing security measures.

1

1.1.2 Contributions

Prior to my work, risk assessments for IoT deployments focused on custom security evalua-

tions, lacked iteration, and did not consider internet threats. Prior approaches paint a partial

picture of the potentially exploitable security issues that exist in IoT deployments leaving

gaps in the overall risk assessment. This is far from sufficient, as the modern internet threats

evolve and the pace of new IoT applications are rapidly growing while custom security as-

sessment techniques cannot keep up. For example, an IoT vacuum cleaner and a home

assistant device may use an embedded Linux operating system, but the software services

on the devices will be different. A smart vacuum may expose a web server that accepts

commands locally from a mobile application to initiate cleaning tasks, while a voice assis-

tant may accept commands from several remote cloud endpoints to service requests from

users: each requiring a customized security evaluation approach for the different type of

software services and deployment. In addition, as the devices age and their threats evolve,

the device risk levels change over time. There have been several large-scale studies that ap-

ply theoretical-based approaches to simulate these complexities, but they do not accurately

represent real-world IoT deployments.

My research aims to break from traditional approaches and instead develop a systematic

and replicable approach based on end-host binary program analysis and network vulnera-

bility analysis for real-world IoT deployments. In this dissertation, I present three studies

that combine binary program analysis and network vulnerability analysis to investigate the

fundamental security problems that contribute to high-risk IoT deployments. Specifically,

this body of work is built upon systematic methodologies that allow others to reproduce,

verify, and extend. The overall goal of this dissertation is to combine real-world security

evaluation observations and malware threat analysis to quantify the risk and prioritize se-

curity measures for IoT deployments. Below, I will briefly introduce these studies and their

contributions.

2

1.1.3 Security Evaluation of Home-based IoT Deployments

This work proposes a component-based security evaluation framework that provides a com-

prehensive and standardized assessment method for IoT deployments. The framework de-

composes complex IoT deployments into manageable core components and uncovers the

breadth of the attack surface. Leveraging this framework, we build a test bed of 45 diverse

home-based IoT devices and assess their security properties for device services, cloud ser-

vices, companion mobile applications, and network protocols. We limit the assessment

scope to home-based IoT devices because they are readily available and the experimental

setup can be reproduced. The results show an IoT deployment can have multiple com-

ponents, each with vulnerabilities. By systematically combining end-host binary program

analysis and vulnerability analysis, we discover that certain device types or vendors can

have disproportionate vulnerabilities affecting specific components. Holistically studying

these vulnerabilities is essential to understand the potential risks associated with an IoT

deployment.

1.1.4 A Longitudinal Security Measurement of Home-based IoT Devices

A systematic security evaluation must consider the temporal component of an IoT deploy-

ment by incorporating an iterative approach that can account for vulnerability changes over

time. In this study, we conduct 13-month longitudinal assessments to understand how se-

curity flaws evolve throughout the device’s lifecycle. The longitudinal component enables

a more comprehensive understanding of vulnerabilities as they arise and uncovers trends

that are otherwise impossible to observe. This study reinforces the replicable approach pro-

posed in the first work and contributes new findings. The results reveal additional vulner-

abilities missed in the initial one-time security evaluation. Moreover, the findings evaluate

the effectiveness of security patching for different device types and vendors. Lastly, the

results highlight groups of vulnerability types by severity and their persistence through-

out the lifecycle of an IoT device, which can inform the risk analysis and prioritization of

3

security measures.

1.1.5 A Large-Scale Analysis of The IoT Malware Lifecycle

Toward providing a more accurate risk assessment of IoT deployments, we study the mal-

ware threats that target vulnerable IoT deployments. In this work, we investigate attacks

on IoT systems at scale by empirically studying the lifecycle of IoT malware and com-

paring it with traditional malware that targets desktop and mobile platforms. We build an

extensible binary program analysis platform for six Linux-based system architectures and

characterize IoT malware infection tactics, their infection payloads, and their capabilities.

We leverage the binary analysis platform to carry out a large-scale measurement of more

than 166K Linux-based IoT malware samples collected over a year. The results contribute

new observations about the IoT malware lifecycle, including device targeting, persistence

techniques, abuse tactics, and Command and Control (C&C) communication operation.

Specifically, the results identify areas of an IoT deployment that may be particularly vul-

nerable to attacks. Furthermore, the results discover trends similar to the development of

traditional malware for desktop and mobile platforms, which inform the potential evolution

of future threats. These findings help more accurately characterize the threats targeting IoT

deployment and provide a more informed risk assessment. Lastly, we make our binary

analysis platform and the malware dataset publicly available for the research community to

encourage reproducibility and validation.

1.2 Dissertation Organization

The dissertation is organized as follows:

• Chapter 1 presents the challenges in risk assessment and security measure priori-

tization for IoT deployments. Next, the chapter briefly highlights the gaps in prior

works, which do not provide a holistic understanding of risks in IoT deployments. Fi-

nally, the chapter overviews the dissertation’s contribution and describes three works

4

that build upon one another to provide a better risk assessment and security measure

prioritization of IoT deployments. The chapter closes by providing an outline of the

dissertation.

• Chapter 2 provides the foundational work required to design and evaluate system-

atic and replicable methods for security evaluation and malware analysis of IoT de-

ployments. First, the chapter systematizes prior work in the security evaluation of

home-based IoT deployments. The findings guide the design and inception of a novel

security evaluation framework, which later chapters use. Next, the chapter system-

atizes malware lifecycle studies for traditional platforms like desktop and mobile.

The findings inform the design of a systematic and comparative lifecycle framework

tailored to modern IoT malware and encompass traditional malware threats.

• Chapter 3 uses the derived framework from the foundational chapter to evaluate a

large-scale and diverse testbed of home-based IoT devices. First, the chapter de-

scribes the security evaluation framework and its components. We formalize the

framework into a model that allows others to replicate and generalize to different IoT

deployments. Next, the chapter presents the testbed design and engineering efforts,

including their challenges. This includes the lab setup, evaluation tools, automation,

data collection, and analysis. Finally, we present the results and notable findings

that highlight the utility of our framework. Specifically, we provide three case stud-

ies based on our large-scale evaluation showcasing good, satisfactory, and poor IoT

deployments.

• Chapter 4 presents a temporal security evaluation using the framework from the

previous chapter to reinforce reproducibility and uncover new vulnerabilities as they

arise. Specifically, we evaluate the security of the device component from the home-

based IoT testbed. We describe our experimental setup and data collection using

the automated tools built by our first study. Finally, we present the results showing

5

changes in the vulnerabilities that impact IoT deployments and uncover insightful

observations about vendors, device types, and severity of vulnerabilities. We then

show how these findings impact the results from the initial security evaluation using

two case studies, which informs the risk modeling.

• Chapter 5 presents a large-scale study of the IoT malware lifecycle. Using the

framework derived in the foundational chapter, we empirically evaluate how IoT

malware infect, persist, abuse, and communicate on IoT devices. Next, we use the

findings to inform the risk of attackers targeting vulnerabilities found in IoT deploy-

ments. Moreover, we compare our findings with traditional malware and discuss the

future evolution of IoT malware, which helps prioritize security measures and more

accurately characterizes risk.

• Chapter 6 combines the empirical results and proposes a module, risk-scoring model.

We derive a simplified risk assessment approach based on the Common Vulnera-

bility Scoring System (CVSS) proposed by the National Institute of Standards and

Technology (NIST). However, unlike CVSS, the scoring methodology provides a

module risk score that incorporates the components, time, and threats targeting IoT

deployments. We demonstrate the risk assessment by presenting two case studies.

Both case studies empirically show a more accurate risk characterization than less

informed approaches. Together, the module scoring and holistic approach provide a

better prioritization of security measures.

• Chapter 7 summarizes the key findings from each study and lays out future work

for improving the overall risk analysis process. Specifically, we highlight additional

studies that would provide a more in-depth analysis of the security and threats in IoT

deployments. These studies include a longitudinal study into how attackers co-op

and abuse infected IoT deployments, a large-scale analysis of IoT cloud backends

availability and security, a comprehensive study of the third-party software supply-

6

CHAPTER 2

FOUNDATIONAL WORK

This chapter establishes the foundation of prior knowledge to which the dissertation will

contribute. Specifically, we present two systematizations. The first systematization ex-

amines the security evaluation literature for attacks and defenses against smart-home IoT

systems. The second systematization looks at malware threats for traditional platforms like

desktop and mobile to compare to IoT malware. Finally, we present a related work section

differentiating the contribution of this dissertation from prior efforts. The systematized

literature is chosen based on the following criteria:

• Merit: The work is unique and among the first to explore a given security predica-

ment.

• Scope: The work focuses on the security (offensive and defensive) of home-based

IoT systems or malware analysis.

• Impact: The work is regarded as significant based on the number of citations.

• Disruption: The work uncovers a new area the community is investigating.

2.1 IoT Security Evaluation

We noticed four broad categories of approaches as we surveyed the literature for security

evaluation of IoT deployments. These approaches include security assessment of IoT de-

vices through device services, cloud backends, companion mobile apps, and network com-

munication. Using the same differentiae, we organize the literature based on those four IoT

components: the device, the cloud backend, the companion mobile application, and the net-

work communication. This organization allows us to understand each component’s attack

8

techniques, proposed mitigations, and stakeholder responsibilities. Table 2.1 presents an

overview of the systematized work and their corresponding subsections where we discuss

the literature in detail. The component classification highlights the focus of the work while

the attack vectors, mitigations, and stakeholders identify the approach. The systematization

highlights representative work; hence it does not provide an all-encompassing reference to

every related work.

2.1.1 Device

Most of the home-based IoT research focuses on the device because the device component

is the cornerstone of an IoT deployment.

Attack Vector

Several works ([2, 3, 4, 5]) explored IoT device configuration insecurities. Barnes [2],

building on the findings of Clinton et al. [3], demonstrated how exposed hardware pins on

a device allowed him to gain privilege access and spy on the end-users. Insecure configura-

tions combined with weak or a lack of authentication can exacerbate the problem as shown

by Chapman [6] and Rodrigues [7]. Weak or a lack of authentication in running services is

a key contributor to several documented attacks [8, 9, 10, 11]. These attacks demonstrate

that device setup and configuration is an important process that the vendor must consider

and evaluate for security flaws. Vendors should enforce strict authentication policies and

for end-users to configure the device before allowing it to operate.

9

Table 2.1: Systematization of the IoT literature. Each section corresponds to a component

of IoT deployment across attack vectors, mitigations, and stakeholders. The ✓implies the

category of attack, mitigation, or stakeholder applies to the discussed literature.

Attack Vector Mitigations Stakeholders

Component Ref

Vuln. Services Weak Auth Default Config Patching Framework Vendor End User

Ur13 [4] ✓ ✓ ✓

Costi14 [12] ✓ ✓ ✓

Chapm14 [6] ✓ ✓ ✓ ✓

Kaval14 [11] ✓ ✓ ✓ ✓ ✓ ✓

Wuess15 [5] ✓ ✓ ✓

Rodri15 [7] ✓ ✓ ✓ ✓

Lodge16 [13] ✓ ✓ ✓

Ike16 [3] ✓ ✓ ✓

Franc16 [14] ✓ ✓ ✓

O’Fly16 [15] - - - - - - - - - - - - - -

Ferna16 [16] ✓ ✓ ✓

Max16 [8] ✓ ✓ ✓ ✓ ✓

FlowF16 [17] ✓ ✓ ✓ ✓ ✓

Oberm16 [10] ✓ ✓ ✓ ✓ ✓

Barne17 [2] ✓ ✓ ✓

Herna17[18] ✓ ✓ ✓

Morge17 [19] ✓ ✓ ✓

Ferna17 [20] ✓ ✓ ✓ ✓

Ronen17 [21] ✓ ✓ ✓

Dolph17 [22] ✓ ✓ ✓

Tian17 [9] ✓ ✓ ✓ ✓ ✓ ✓

Device

subsection 2.1.1

Wang18 [23] - - - - - - ✓ ✓

Permissions Programming Data Protection

Barre10 [24] ✓ ✓ ✓

Au12 [25] ✓ - - - - ✓ ✓

Egele13 [26] ✓ ✓ ✓ ✓

Vienn14 [27] ✓ ✓ - - - - - - - -

Max16 [8] ✓ ✓ ✓ ✓

Sivar16 [28] ✓ ✓ ✓ ✓ ✓

Demet17 [29] ✓ ✓ ✓ ✓

Mobile

Application

subsection 2.1.2

IoTFu18 [30] ✓ - - - - ✓

Vuln. Services Weak Auth Encryption

Max16 [8] ✓ ✓ ✓ ✓

Oberm16 [10] ✓ ✓ ✓ ✓

Nandi16 [31] ✓ ✓ ✓

Blaic16 [32] ✓ ✓ ✓ ✓ ✓

Wilso17 [33] ✓ ✓ ✓ ✓

Surba17 [34] ✓ - - - - ✓ ✓

Cloud

Endpoint

subsection 2.1.3

DTAP18 [35] ✓ ✓ ✓ ✓ ✓ ✓

Encryption MITM

BEAST11 [36] ✓ ✓ ✓

Garci11 [37] ✓ ✓ ✓ ✓ ✓

LUCKY13 [38] ✓ ✓ ✓

Ryan13 [39] ✓ ✓ - - - - - - - -

Foula13 [40] ✓ ✓ - - - - - - - -

Alfar13 [41] ✓ ✓ ✓

Selvi14 [42] ✓ ✓ ✓

POODL14 [43] ✓ ✓ ✓

FREAK15 [44] ✓ ✓ ✓

CRIME15 [45] ✓ ✓ ✓

SMACK15[46] ✓ ✓ ✓ ✓

Adria15 [47] ✓ ✓ ✓ ✓

Zilln15 [48] ✓ ✓ - - - - - - - -

DROWN16 [49] ✓ ✓ ✓ ✓

Jasek16 [50] ✓ ✓ ✓

Kinti16 [51] - - - - ✓ ✓

Aptho17 [52] ✓ ✓ ✓

Communication

subsection 2.1.4

Wood17 [53] ✓ ✓ ✓

Max [8] assessed the security of the August Smart Lock and found that weak authentica-

tion and insecure default configuration broke the security of the lock. He found hard-coded

credentials and debug configurations that allows modification and introspection of the lock.

The work of Obermaier et al. [10] on cloud-based cameras found that although the device

had what appeared to be a strong password (36 characters of alphanumeric and symbols),

the password was the MAC address of the camera reversed and Base64 encoded. Kavalaris

et al. [11] showed that the Sonos device runs undocumented and unauthenticated services

on high ports allowing LAN clients to fully control the device. The Sonos device was sus-

ceptible to unauthorized device pairing due to the lack of authentication. SmartAuth [9]

found that the authentication problem also manifests itself in the IoT application platforms

through over-privileged applications. Device pairing establishes a trusted channel between

a client and their device. Further, IoT hubs bridge LE devices to IP networks, which have

a pre-established trust relationship. An attacker would exploit this specific process to cir-

cumvent the device or use it as a pivot point.

IoT application platforms expose a permission-based model to allow third-party ap-

plications to run. Fernandes et al. [16, 17, 20] showed how implicit trust to third-party

applications can have major implications on the security of the device. There are many

subcomponents within the device’s platform, which can make securing the device difficult.

Many vendors have good practices in place to ensure secure authentication and secure de-

fault configurations (as demonstrated by O’Flynn [15]), but core device services can suffer

from side-channel information leakage. Ronen et al. [21] showed that although the Philips

Hue device was reasonably secure, they were able to extract the master encryption key

through a side-channel attack and combine it with a vulnerability found in the communica-

tion protocol, which resulted in a wormable exploit.

Flaws in firmware allow attackers to steal WiFi credentials [13], turn smart thermostats

into spy gadgets [18], ransom them [14], run arbitrary commands on smart TVs [19], and

control home assist devices covertly [22]. Costin et al. [12] conducted a large-scale study

11

on firmware analysis and found an array of flaws. The literature showed that device se-

curity requires defensive approaches to secure side-channel, firmware, and hardware. The

toolchain for software and hardware development has a well-defined secure development

process that vendors must utilize.

Mitigations

To address vulnerable services, misconfiguration, and weak authentication, vendors patch

through device updates, while inherent design flaws in IoT platforms are mitigated through

new frameworks. Wang et al. [23] proposed a provenance-based framework to aggregates

device activities across a deployment that can detect errors and malicious activities. Smar-

tAuth [9] is a framework that identifies required permissions for IoT applications running

on platforms like SmartThings and Apple Home. FlowFence [17] is a framework that splits

application codes into sensitive and non-sensitive modules and orchestrates the execution

through opaque handlers. This approach burdens developers because they must be mindful

of what code operates on sensitive and non-sensitive data. Furthermore, researchers can

adapt techniques found in mobile application frameworks to address IoT platform insecu-

rities.

Stakeholders

Table 2.1 shows that the main stakeholder is the vendor. Vendors are responsible for patch-

ing and updating vulnerable devices but can delegate some of the responsibilities to users

through configurations. For example, users can mitigate insecurities by disabling prob-

lematic services on the device. SmartAuth [9] provides a derived authentication approach

for applications on the device, but the implementation must be done by the vendor. Users

gain control by having a choice about what permissions to authorize for third-party appli-

cations. Kavalaris et al. [11] showed how services that the Sonos device exposes create

a security risk. Users can mitigate this risk through network segmentation, but it requires

12

Figure 2.1: Typical home-based IoT setup.

IoT Hub Mobile Apps Smart TVLE Devices

Cloud Endpoints

Vendor Vendor/User

some technical expertise.

Not many devices allow users to fully configure running services or even disable them

unless they have privileged access. Based on all the proposed mitigations, end-users can

manage configuration or network segmentation residing on the home demarcation side as

shown in Figure 2.1. End-users do not have much control and often are given a minimalistic

interface, which limits the mitigation of vulnerable services. Vendors, on the other hand,

bear the responsibility for keeping the device up to date.

Take Away

The literature addresses some aspects of device security. Devices have many components

that contribute to their overall security like the platform permissions, unauthenticated ser-

vices, insecure configurations, and software and hardware bugs. Further, they are amplified

when combined. The device security is not purely in software, but vulnerabilities manifest

themselves in hardware and side-channels as well. Embedded Linux is found in many of the

devices, but there is no secure open IoT platform, which can incorporate newly proposed

frameworks [23, 17, 9] by the community.

System patching addresses most of the vulnerabilities. The patching process is not

perfect [18] and can be improved by good practices implemented in other areas of com-

puting [54]. The end-users have almost no control or visibility into the operation of the

13

device. Securely providing health telemetry and fine-grained configuration parameters can

empower users to mitigate immediate risks. Users can deploy the device in many ways that

go beyond the vendor’s permissive assumptions, hence vendors should assume the device

is Internet-facing when building security measures.

Similar problems are faced with general purpose computing systems that are publicly

accessible and running vulnerable services or using weak authentication (SSH with guess-

able password). Adapting techniques from secure platforms and operating systems will

improve the security posture of many IoT devices.

2.1.2 Mobile Application

Many of the home-based IoT devices have a companion mobile application to control,

configure, and interface with the device. Mobile applications can be leveraged as an attack

surface against IoT deployments.

Attack Vector

Acar et al. [55] identified five different areas of Android mobile application issues, namely

permission evolution, permission revolution, webification, programming-induced leakage,

and software distribution. We adapted Acar’s approach and identified three major classes

of insecurities that effect IoT devices: over-privilege (permissions [24, 25]), programming

errors (programming [26]), and hard-coded sensitive information (data protection [27]).

Max [8] showed how programming errors leak sensitive information about the device and

the cloud endpoint. Max used the sensitive information to dump credentials, escalate priv-

ileges, and circumvent the security of the August Smart Lock. Apart from Max’s work,

there are no direct attacks leveraging the mobile application to circumvent an IoT device.

Chen et al. [30] presented IoTFuzzer that instruments the mobile application within an

IoT deployment to find bugs on the IoT device. Chen’s approach is unique and leverages

the semantics that the vendor programmed into the application. Although there are no re-

14

ports of this technique used in the wild, theoretically an attacker can use the same approach

to escalate privilege on an IoT device. Sivaraman et al. [28] showed how a mobile appli-

cation can be used on a local network to collect information about available home devices

and then reconfigure the router/modem firewall rules to make the devices Internet facing.

Hanguard [29] showed how permissive security assumptions by vendors about the LAN

can expose an IoT device. Companion mobile applications are an entry point to the device

and vendors often assume that the deployment network is trusted and secure. These as-

sumptions can have grave effects on the security of the device especially when devices rely

on unauthenticated services or unencrypted communications.

Mitigation

Hanguard [29] proposed a user-space mobile application that interfaces with the router to

control access through role-based access control (RBAC). Hanguard’s approach will pre-

vent the attack discussed by Sivaraman et al. [28] but cannot stop attacks from a compro-

mised companion application. Securing the mobile application by adhering to best prac-

tices discussed in Pscout [25], Barrera et al. [24], Egele et al. [26], and Viennot et al. [27],

reduces the attack surface. Unfortunately, as Viennot et al. [27] showed, a large portion

of the applications in the Google Play Store contain issues relating to permissions, pro-

gramming errors, and information leakage. Mobile application platforms are mature and

have built-in security facilities to promote good practices. Developers and vendors should

adhere to best practices and audit their mobile applications periodically.

Stakeholders

The mobile application component relies on both the user and the vendor. This is partly due

to the permission model that most mobile platforms provide to end-users. Hanguard [29]

provides the user with a system to deploy inside the local network through routing rules

(user demarcation Figure 2.1), which does not involve the vendor. Sivaraman et al. [28]

15

proposes that users should be vigilant when running mobile applications on their networks

and only use authorized stores (Google Play, Apple App Store, etc.). The vendors must

address programming errors and secure information storage through updates. Vendors must

familiarize themselves with the mobile platforms to deploy secure applications or use a

reputable third-party developer to provide secure development expertise.

Take Away

The work of Acar et al. [55] showed the maturity of the mobile application security field.

An inherent trust is given to mobile applications, which in many cases control core com-

ponents of an IoT device or a cloud service. Max [8] and IoTFuzzer [30] demonstrated

how to abuse the implicit trust between mobile applications and IoT devices or cloud ser-

vices. IoT vendors and developers should adhere to platform development guidelines and

leverage security features to ensure proper deployments. Limiting mobile application ac-

cess to the device through fine-grained controls is a promising direction that can reduce the

attack impact. Lastly, Hanguard’s [29] approach should be further investigated to provide

end-users with control to mitigate risks.

2.1.3 Cloud Endpoint

Cloud endpoints are the Internet components of the IoT deployment, and in a sense, they

define what IoT is. They provide core services like remote administration, alerts, and digital

content. The IoT devices and their mobile applications trust these cloud endpoints, which

gives adversaries an additional attack point.

Attack Vector

The attack by Max [8] is a great example that touches on all components of the IoT ecosys-

tem. The attack discovered insecure application program interface (API) on the cloud

endpoint for the August Smart Lock, which escalated a guest account to an administra-

16

tor account. Blaich [32] audited the Wifi Barbie doll for various vulnerabilities and found

that the cloud endpoints did not authenticate firmware downloads, had multiple cross-site-

scripting vulnerabilities, allowed username enumeration, had no brute force limiting, and

issued never expiring cookies. Obermaier et al. [10] audited the cloud endpoints of surveil-

lance cameras and showed that an attacker can inject footage, trigger false alarms, and carry

out a denial-of-service attack against the camera system. These attacks were possible due

to vulnerabilities introduced in the configuration of the infrastructure, vulnerable services,

and insecure APIs. Zuo et al. [56] leveraged client-to-cloud trust to implement AutoForge,

which forges requests from the mobile applications to the cloud endpoints enabling pass-

word brute-forcing, password probing, and security access token hijacking. Implicit trust

between IoT components is sensitive and vendors must verify endpoints before allowing

them unfettered access.

IoT integration platforms, like IFTTT [57], automate.io [58], and CloudWork [59], are

third-party cloud endpoints. They use OAuth tokens to connect multiple IoT devices to

perform user programmed tasks. Surbatovich et al. [34] studied the security implications

on privacy and integrity when using recipes

1

and showed that some recipes can allow at-

tackers to distribute malware and carry out denial-of-service attacks. Nandi et al. [31]

reported a similar type of user-induced programming error through trigger-action program-

ming (TAP), which led to an incorrect event triggering or a lack thereof. Fernandes et

al. [35] pointed out that the cloud integration platforms can be compromised, which might

expose the user’s OAuth tokens publicly. These scenarios are likely to happen based on

recent platform compromises like Equifax [60] and Orbitz [61]. The work of Wilson et

al. [33] did not identify an attack vector on the IoT ecosystem, but it studied the privacy

and trust that users place with IoT vendors. These attacks show that cloud integration ser-

vices lack fine-grained control and they leak private and sensitive information that can lead

to a breach.

1

recipes are high-level programmable instructions that are used to trigger IoT device actions based on an

occurrence of an event.

17

Mitigation

To mitigate these attacks, Max [8], Obermaier et al. [10], and Blaich [32] recommend

proper configuration and secure authentication mechanisms. Surbatovich et al. [34] offered

a framework to analyze the cloud platform recipes, which motivated later work. Nandi et

al. [31] proposed an automatic trigger generation system that analyzes user-defined triggers

for errors and rectifies them by rewriting the triggers. Fernandes et al. [35] proposed the

use of a decentralized framework for trigger-action programmable platforms called DTAP.

The DTAP platform is a shim between the IoT cloud platform and the user’s local network

and brokers access to the IoT devices based on transfer tokens (XTokens). The mitigation

techniques include securing cloud endpoints, offering tools to analyze third-party integra-

tion services, assisting developers in generating correct triggers for their applications, and

providing short-lived tokens with constrained access to a device’s functions.

Somewhat related, Wilson et al. [33] looked at empowering IoT users that trust the

vendors with their private data. The technique is known as TLS-Rotate and Release (TLS-

RaR), which requires an auditor entity collecting TLS packets to request the session key

from the vendor to decrypt the communication. The vendor then rotates the TLS session

key and discloses to the auditor the prior key to decrypt the collected TLS packets. The

audit system must be deployed on the end-user demarcation side and collects traffic for

devices that they wish to audit.

Stakeholders

The vendor controls the cloud endpoints and the users do not have a way to inspect or con-

trol what their device sends to the cloud endpoints [53, 62]. Additionally, third-party cloud

providers offer infrastructure-as-a-service (IaaS) and platform-as-a-service (PaaS) to IoT

deployment. Many of the IoT devices rely on cloud-based infrastructure to run their ser-

vices. Unplanned outages[63], infrastructure compromises[64], and intentional attacks[65]

impact the deployment of the cloud endpoints. When it comes to cloud infrastructure con-

18

figuration and API implementation ([8, 32, 10]), the vendor is responsible for the mitigation

of the vulnerabilities.

Newer IoT devices are taking advantage of managed IoT platforms, which offload much

of the security responsibilities to the public cloud providers. On the other hand, the majority

of the proposed frameworks ([31, 35, 33]) are user-centric and give end-users visibility and

control in a limited way. The work by Fernandes et al. [35] and Wilson et al. [33] is a

hybrid approach and can be deployed jointly by vendors and users or by a trusted third-

party. As for cloud providers, the vendor can mitigate their exposure by diversifying and

over subscribing to different cloud providers.

Take Away

IoT cloud endpoints exhibit insecure cloud deployment through configuration and API im-

plementation, but these vulnerabilities can be addressed with readily available tools for

cloud security. Additional measurements are needed to further understand the extent of

these misconfigurations in cloud deployments. The Censys Project [66] is a valuable source

of data that can allow researchers to historically analyze IoT infrastructure. Further, the

IoT cloud integration platforms introduce new challenges that mimic classical work like

Decentralized Trust Management [67]. Integration cloud platforms offer users a way to

chain multiple IoT devices to execute tasks based on an event, and they suffer from over-

privilege recipes and privacy implications, which is demonstrated in the work of Surbatovic

et al. [34].

Fernandes et al. [35] utilized prior techniques for the IoT cloud platforms by applying

trust management systems and token authentication protocols to the IoT platforms. Vendors

are adapting managed IoT cloud platforms, which shifts the security responsibility to cloud

providers like Amazon IoT Core [68], Azure IoT Hub [69], and Google Cloud IoT [70].

IoT cloud endpoints are relying more on third-party infrastructure to deploy and run their

services, which means vendors should consider a contingency plan for unplanned outages

19

and infrastructure compromises. Additional studies are needed to understand the managed

IoT cloud platforms and what possible weaknesses exist.

2.1.4 Communication

Network communication in IoT deployment fall into two classes of protocols, Internet

protocol (IP) and low-energy (LE) protocol. Both communications can exist on the user

demarcation (see Figure 2.1) of the network, but only IP communication can go over the

Internet. Researchers from industry and academia both are heavily invested in the security

of network communication because of its applicability in other areas.

Most home-based IoT systems implement four types of communication protocols: IP,

Zigbee, Z-Wave, and Bluetooth-LE (BLE). IoT devices choose to use the IP suite for com-

munication due to its reliability and proven capability of transferring incredible volumes

of global network traffic. The IP protocol is stateless and offers no security, but it can be

supplemented by the use of TCP and TLS/SSL protocols to provide the security features

needed. Based on the literature, we identified five popular application layer protocols that

home-based IoT devices use, namely: DNS, HTTP, UPnP, NTP, and custom implementa-

tions.

Attack Vectors

The DNS protocol is a lightweight protocol that Internet services rely on, but inadvertently

leaks private information based on the recursive and client configurations. Kintis et al. [51]

found that open recursive DNS that enable EDNS Client Subnet feature (ECS) [71] (which

embeds a truncated portion of the client’s IP address) have privacy implications. Selvi [42]

demonstrated how a MITM attack on NTP was used to bypass HTTP strict transport se-

curity (HSTS). The HTTP protocol gives a more reliable mode of transportation, but like

DNS and NTP, it does not provide any confidentiality or integrity. Bellissimo et al. [72]

and Samuel et al. [54] demonstrated how an insecure protocol like HTTP allows attackers

20

to MITM and backdoor the system software update process.

IoT devices widely rely on UPnP protocol to offer easy configuration and control. UPnP

uses the HTTP protocol, hence inherits the same flaws [73]. Garcia [37] showed how

attackers abuse UPnP because it lacks authentication, validation, and logging. GNUciti-

zen [74] demonstrated how an UPnP enabled device is vulnerable to cross-site scripting

(XSS) vulnerabilities, while HD Moore [75] presented statistics and measurements around

UPnP enabled devices on the Internet. Their work demonstrates that unauthenticated and

unencrypted use of application layer protocols enables attackers to mass exploit devices,

which leads to additional attacks. TLS/SSL sessions provide confidentiality and integrity,

which help address the inherent flaws in these communication protocols.

Researchers have thoroughly examined the TLS/SSL protocols and uncovered severe

vulnerabilities. Starting off in 2011, BEAST [36] exposed the initialization vector (IV) flaw

in TLS 1.0, which allowed attackers to predict the IV of the next message in the stream.

In 2012, CRIME [45] showed how TLS sessions that allow compression, like Google’s

SPDY protocol, were susceptible to session hijacking. In 2013, AlFardan et al. [38] used

malformed packets to infer time delays, a side-channel attack, in the MAC verification to

statistically infer the plaintext from the ciphertext. AlFardan et al. [41] also showed how

the RC4 stream cipher weakens the security of TLS sessions. POODLE [43] exposed a

downgrade flaw in SSL 3.0 that allowed for insecure communication between two parties.

Beurdouche et al. [46] found flaws in several client and server implementations of TLS/SSL

libraries that allow MITM attacks, including the FREAK [44] vulnerability.

Additional attacks disclosed by Adrian et al. [47] and DROWN [49] illustrated the dif-

ficulty of implementing secure communication protocols. Many IoT communications are

susceptible to MITM attacks because they support older versions of TLS/SSL protocols.

TLS/SSL is also widely used in managed IoT platforms to secure the communication chan-

nels. Emerging managed IoT platforms, like AWS IoT Core [68], Azure IoT Hub [69], and

Google Cloud IoT [70], implement custom protocols that utilize certificates and TLS/SSL.

21

These protocols and platforms are sparsely documented but rely on time-tested technolo-

gies to implement secure end-to-end communication.

The BLE [76], Zigbee [77], and Z-Wave [78] protocols have many security problems.

Ryan [39] showed a severe flaw in the key-exchange protocol for Bluetooth, which allows

an attacker to passively recover the session key. Jasek [50] demonstrated how attackers

can passively and actively abuse the generic attribute profile in the GATT layer found in

Bluetooth network stack. Zillner et al. [48] showed how the Default Trust Center Link

Key defined by the Zigbee Alliance [77] is the same across all devices. Fouladi et al. [40]

showed how a hard-coded constant in the Z-Wave firmware is used to derive session keys,

which eventually became publicly known. Legacy versions of LE protocols have critical

security flaws, which many home-based IoT devices implement in hardware; hence limits

their mitigation options.

Aside from the inherent flaws, LE protocols offer a proximity feature that authentica-

tion systems rely on to identify geographical presence. Ho et al. [79] showed how relay

attacks were possible against LE protocols by serializing the LE packets and relaying them

over IP. Researchers have shown that MITM relay attacks against LE protocols are practical

and break the geographical proximity, which authentication systems rely on. These com-

munication channels can have privacy concerns as demonstrated by Apthorpe et al. [52]

and Wood et al. [53].

Mitigations

For HTTP, UPnP, DNS, and NTP protocols, the suggested mitigations include disabling the

ECS feature in DNS, using updated versions of the NTP protocol (NTPv4), and using TL-

S/SSL with insecure protocols (HTTPS). For TLS/SSL implementation flaws, upgrading

the server-side and client-side libraries to the latest version should address the vulnerabil-

ities. Further, disabling weak or vulnerable TLS/SSL versions reduces exposure but loses

backward compatibility. For LE-based communication, the first generation of Zigbee and

22

Z-Wave protocols have critical flaws and have limited mitigation options. Vendors can

disable insecure portions of these protocols [80] at the expense of compatibility.

A recent direction by researchers is the work found in Apthorpe et al. [52] and Wood

et al. [53]. Wood et al. [53] proposed a system that monitors the home network and in-

form users of sensitive data sent by IoT devices. Apthorpe et al. [52] demonstrated how

traffic shaping on the home network can prevent side-channel snooping. This direction of

research requires additional attention to empower consumers in protecting their networks

and privacy.

Devices electing to use Z-Wave must now opt for Z-Wave Plus, which has improved

security [81] and over-the-air (OTA) update capabilities. Also, Zigbee added a new secu-

rity model to allow for secure-key distribution known as Trust Center (TC) [82]. TC is

a trusted entity within the Zigbee network that is authorized to distribute keys to Zigbee

client devices. TC gives each Zigbee connected device a unique encryption key, unlike the

legacy key distribution schema. To mitigate relay attacks in LE protocols, Ho et al. [79]

introduced a touch-based-intent communication approach using body-area network (BAN)

for signal propagation.

Stakeholders

End-users cannot address the communication flaws since the implementation is on the de-

vice, the cloud endpoint, or in the mobile application. Further, vendors have limited options

in addressing the communication vulnerabilities since some flaws require a hardware up-

grade, but in some cases they can disable them [80]. The vendors can patch vulnerable

libraries on the device, the mobile application, and the cloud endpoints.

Internet service providers (ISPs) have visibility into the utilization of IP based proto-

cols, but they are not directly responsible for any mitigation. For ISPs to be involved, they

must provide network and legal policies that define their role. As for the LE protocols, ven-

dors can mitigate legacy devices by disabling vulnerable pairing. Users can use alternate

23

methods for pairing LE devices with IoT hubs if such options exists. Users can buy newer

devices that offer next generation secure LE protocols, like Z-Wave Plus and Zigbee.

Take Away

Communication channels provide essential functions for home-based IoT. Home-based IoT

devices have adapted industry standards for IP and LE protocols, but they suffer from

legacy libraries that in some cases cannot be fixed.

Vendors bear the responsibilities for addressing the vulnerabilities in the communica-

tion channels. Further, cloud endpoints and mobile applications can be updated by the

vendor directly, but vendors must be proactive and informed about vulnerabilities affecting

their software. IoT devices continue to rely on insecure protocols like UPnP and, as we will

show next, rarely encrypt their communication on the LAN. End-users do not know if their

device or mobile application is vulnerable to weak encryption or MITM attacks unless they

analyze and test the communication traffic. An informed power-user might segment their

local network into trusted and untrusted zones to limit the exposure.

TLS/SSL addresses insecure protocols that are susceptible to MITM attacks, but they

also exhibit flaws in their implementation and deployment. The work of Clark et al. [83]

provided additional analysis regarding SSL and HTTPS. ISPs can provide reports outlining

best network practices and statistics about device and protocol utilization. Managed cloud

IoT platforms use custom communication protocols that rely on public-key infrastructure

(PKI) and TLS/SSL protocols. Further studies are required to investigate protocols used

by managed cloud IoT platforms. These new platforms are not well studied and warn for

further investigation to identify any weaknesses.

2.2 Malware Analysis

In order to perform a systematic comparison between traditional and IoT malware, we

require a principled framework that describes the malware threat cycle. We systematized

24

the literature on traditional malware and noticed five main components of the malware

lifecycle:

• Infection Vector is how the malware attacks a system.

• Payload is the dropped malware code after exploitation.

• Persistence is how the malware installs on a system.

• Capabilities are the functions in the malware code.

• C&C Infrastructure is how the malware communicates with the operator.

For each component, we identify techniques discussed in the literature for traditional mal-

ware (desktop/mobile) and empirically measure it for IoT malware (chapter 5). We further

refine the categories by examining 25 papers from the systematized works to derive subcat-

egories under each component qualitatively. We then use the MITRE ATT&CK taxonomy

to derive additional subcategories not found in prior academic works but documented by

security companies. Table Table 2.2 summarizes the comparative analysis framework com-

ponents and their definitions.

2.2.1 Infection Stage

Desktop Infection Vectors. In Table Table 2.3, we see desktop malware pioneered many of

the infection techniques. Moore et al. [84] document the SQL Slammer worm that exploited

vulnerable SQL services on the internet. Although no large academic study explored desk-

top malware use of repackaging, default credentials, and removable media, there are ample

instances from security companies documenting these techniques [85, 86, 87]. Desktop

malware rely more on infection vectors like drive-by download and phishing. Provos et

al. [88] present an extensive study on drive-by downloads, and several prior works mea-

sure [89, 88, 90, 91] and propose defenses [92, 93, 94] for them.

25

Table 2.2: A summary of the proposed comparative framework and definitions for each

component.

Components

Definition for each component’s subcategories

Infection

Remote Exploit Remote Exploit refers to exploiting a service or an application running on a device.

Repackaging Repackaging refers to benign application repackaged with malware (i.e. pirated software).

Drive-by Drive-by refers to infection by redirecting the system to a malicious resource.

Phishing Phishing refers to social engineering attacks that trick a user into getting infected.

Default Cred. Default Credentials refers to the use of vendor default credentials for device access.

Rem. Media Removable Media refers to the use of USB for infection between devices.

Payload

Packing Packing refers to the use of packers or polymorphic techniques for obfuscation.

Env. Keying Env. Keying refers to the dependence on the target’s environment artifact (i.e. HW id).

Scripting Scripting refers to the use of a scripting interpreter (i.e. Powershell, sh, etc.).

Cross-Arch/Plat.

Cross-Arch/Plat. refers to using payloads for different architectures (x86, ARM, etc.) or platforms (Windows, Android, etc.).

Persist.

Firmware Firmware refers to persisting by modifying the device’s firmware.

OS - Kernel OS - Kernel refers to persisting as a kernel module.

OS - User OS - User refers to persisting in user-space through configuration or process/service.

Capability

Priv. Escalation Priv. Escalation refers to exploiting OS vulnerability to elevate privilege on a device.

Defense Evasion Defense Evasion refers to actively avoiding or disabling security features on the device.

Info. Theft Info. Theft refers to profiling and exfiltrating sensitive information from the device.

Scanning Scanning refers to using the device to scan for other devices.

DDoS DDoS refers to using the infected device to orchestrate a DDoS attack.

Destruction Destruction refers to actively destroying or ransoming the device.

Resource Abuse Resource Abuse refers to using the device to run unauthorized services or applications.

C&C

Peer-2-Peer Peer-2-Peer refers to using peer-2-peer network protocol for managing the botnet.

Centralized Centralized refers to using a central C&C server for managing the botnet.

Email/SMS Email/SMS refers to using email or short message service for call-back to the bot master.

For phishing, Abu Rajab et al. [95] present a multi-dimensional measurement into bot-

nets. Their work documents how botnets leverage phishing emails for spreading. Holz et

al. [89] and Kotzias et al. [96] empirically show that phishing is a common infection vector

affecting desktop users. Desktop malware continued to evolve and make up a large portion

of the threats on the internet. The key insight is that desktop malware initially used remote

exploitation and default credentials to automatically spread but has evolved to depend on

user interaction. Currently, desktop malware’s most common infection techniques require

user interaction such as phishing (email), drive-by download (browsing), removable media

(physical interaction), and repackaging (i.e. pirated software).

Mobile Infection Vectors. Similar to our study, Zhou et al. [108] look at Android mobile

malware and characterize the infection techniques. Their work shows that many Android

malware use repackaging, drive-by download, and phishing to propagate as shown in Ta-

ble Table 2.3. Lindorfer et al. [110] identify removable media propagation techniques in

their large-scale study. The key insight is that unlike desktop malware, mobile malware is

dependent on user interaction. Automated spreading has not been documented for the mo-

bile platform. While worm-based malware for the Android platform do exist, they require

26

Table 2.3: A comparison between desktop, mobile, and IoT malware using the proposed

framework.

Components Summary Desktop Mobile IoT

Categories

Desktop

Mobile

IoT

Moore03 [84]

Krueg05 [97]

AbuRa06 [95]

Barfo07 [98]

AbuRa07 [99]

Dagon07 [100]

Holz08 [101]

Polyc08 [102]

Kanic08 [103]

Holz08 [89]

Provo08 [88]

Stone09 [92]

Lu10 [93]

Cho10 [104]

Lindo11 [105]

Shin11 [90]

Rosso12 [106]

Inver14 [94]

Kwon15 [91]

Ganan15 [107]

Kotzi19 [96]

Zhou12 [108]

Lever13 [109]

Lindo14 [110]

Tam17 [111]

Infection

Remote Exploit ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓

Sec. subsection 5.3.2

Repackaging ✓* ✓ ✓

Drive-by ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓

Phishing ✓ ✓ ✓ ✓ ✓ ✓

Default Cred. ✓* ✓ ✓

Rem. Media ✓* ✓ ✓

Payload

Packing ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓

Sec. subsection 5.3.3

Env. Keying ✓ ✓ ✓ ✓ ✓ ✓

Scripting ✓* ✓ ✓

Cross-Arch/Plat. ✓* ✓ ✓ ✓ ✓

Persist.

Firmware ✓ ✓ ✓ ✓

Sec. subsection 5.3.4

OS - Kernel ✓ ✓ + ✓ ✓ ✓ ✓

OS - User ✓ ✓ + ✓ ✓ ✓

Capability

Priv. Escalation ✓ ✓ ✓ ✓ ✓ ✓

Sec. subsection 5.3.5

Defense Evasion ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓

Info. Theft ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓

Scanning ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓

DDoS ✓ ✓ ✓ ✓ ✓ ✓

Destruction ✓ ✓ ✓ ✓ ✓ ✓

Resource Abuse ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓

C&C

Peer-2-Peer ✓ ✓ ✓ ✓ ✓ ✓ ✓

Sec. subsection 5.3.6

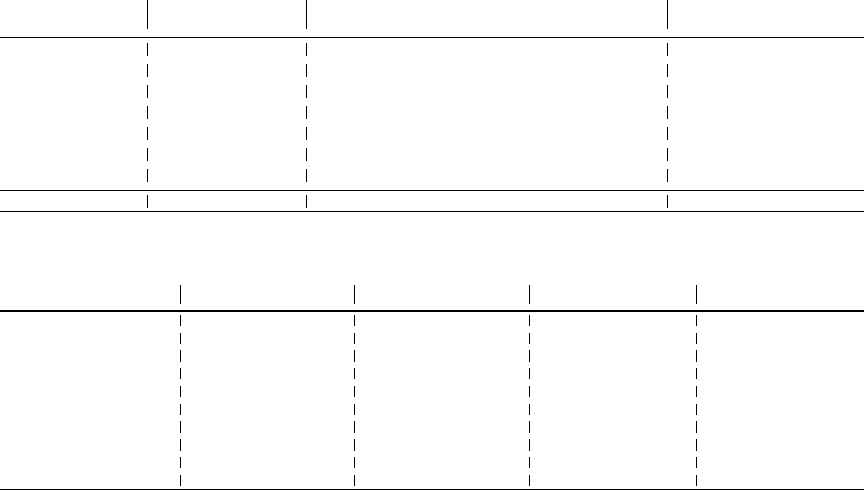

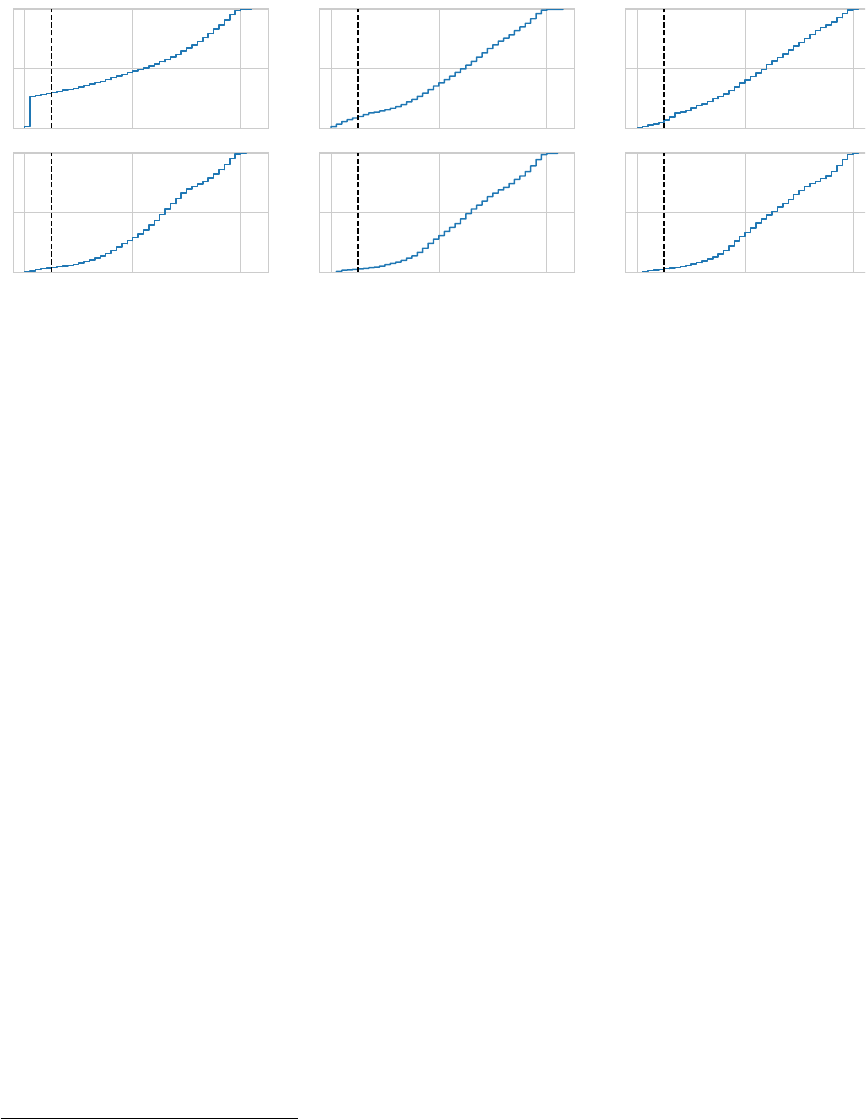

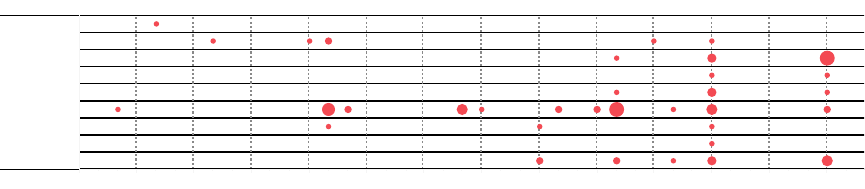

Centralized ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓