Knowledge Extraction from Fictional Texts

Cuong Xuan Chu

Dissertation zur Erlangung des Grades des

DOKTORS DER INGENIEURWISSENSCHAFTEN (DR.-ING.)

der Fakultät für Mathematik und Informatik

der Universität des Saarlandes

Saarbrücken, 2022

Day of Colloquium 25/04/2022

Dean of the Faculty Univ.-Prof. Dr. Jürgen Steimle

Chair of the Committee Prof. Dr. Dietrich Klakow

Reporters

First Reviewer Prof. Dr. Gerhard Weikum

Second Reviewer Dr. Simon Razniewski

Third Reviewer Prof. Dr. Martin Theobald

Academic Assistant Dr. Frances Yung

ii

Abstract

Knowledge extraction from text is a key task in natural language processing, which

involves many sub-tasks, such as taxonomy induction, named entity recognition and

typing, relation extraction, knowledge canonicalization and so on. By constructing struc-

tured knowledge from natural language text, knowledge extraction becomes a key asset

for search engines, question answering and other downstream applications. However,

current knowledge extraction methods mostly focus on prominent real world entities

with Wikipedia and mainstream news articles as sources. The constructed knowledge

bases, therefore, lack information about long-tail domains, with fiction and fantasy as

archetypes. Fiction and fantasy are core parts of our human culture, spanning from

literature to movies, TV series, comics and video games. With thousands of fictional

universes which have been created, knowledge from fictional domains are subject of

search-engine queries – by fans as well as cultural analysts. Unlike the real-world do-

main, knowledge extraction on such specific domains like fiction and fantasy has to tackle

several key challenges:

• Training data. Sources for fictional domains mostly come from books and fan-built

content, which is sparse and noisy, and contains difficult structures of texts, such

as dialogues and quotes. Training data for key tasks such as taxonomy induction,

named entity typing or relation extraction are also not available.

• Domain characteristics and diversity. Fictional universes can be highly sophis-

ticated, containing entities, social structures and sometimes languages that are

completely different from the real world. State-of-the-art methods for knowledge

extraction make assumptions on entity-class, subclass and entity-entity relations

that are often invalid for fictional domains. With different genres of fictional do-

mains, another requirement is to transfer models across domains.

• Long fictional texts. While state-of-the-art models have limitations on the input

sequence length, it is essential to develop methods that are able to deal with very

long texts (e.g. entire books), to capture multiple contexts and leverage widely

spread cues.

iii

This dissertation addresses the above challenges, by developing new methodologies

that advance the state of the art on knowledge extraction in fictional domains.

• The first contribution is a method, called TiFi, for constructing type systems

(taxonomy induction) for fictional domains. By tapping noisy fan-built content

from online communities such as Wikia, TiFi induces taxonomies through three

main steps: category cleaning, edge cleaning and top-level construction. Exploiting

a variety of features from the original input, TiFi is able to construct taxonomies

for a diverse range of fictional domains with high precision.

• The second contribution is a comprehensive approach, called ENTYFI, for named

entity recognition and typing in long fictional texts. Built on 205 automatically

induced high-quality type systems for popular fictional domains, ENTYFI exploits

the overlap and reuse of these fictional domains on unseen texts. By combining

different typing modules with a consolidation stage, ENTYFI is able to do fine-

grained entity typing in long fictional texts with high precision and recall.

• The third contribution is an end-to-end system, called KnowFi, for extracting

relations between entities in very long texts such as entire books. KnowFi leverages

background knowledge from 142 popular fictional domains to identify interesting

relations and to collect distant training samples. KnowFi devises a similarity-

based ranking technique to reduce false positives in training samples and to select

potential text passages that contain seed pairs of entities. By training a hierarchical

neural network for all relations, KnowFi is able to infer relations between entity

pairs across long fictional texts, and achieves gains over the best prior methods for

relation extraction.

iv

Kurzfassung

Wissensextraktion ist ein Schlüsselaufgabe bei der Verarbeitung natürlicher Sprache,

und umfasst viele Unteraufgaben, wie Taxonomiekonstruktion, Entitätserkennung und

Typisierung, Relationsextraktion, Wissenskanonikalisierung, etc. Durch den Aufbau

von strukturiertem Wissen (z.B. Wissensdatenbanken) aus Texten wird die Wissen-

sextraktion zu einem Schlüsselfaktor für Suchmaschinen, Question Answering und an-

dere Anwendungen. Aktuelle Methoden zur Wissensextraktion konzentrieren sich je-

doch hauptsächlich auf den Bereich der realen Welt, wobei Wikipedia und Mainstream-

Nachrichtenartikel die Hauptquellen sind. Fiktion und Fantasy sind Kernbestandteile

unserer menschlichen Kultur, die sich von Literatur bis zu Filmen, Fernsehserien, Comics

und Videospielen erstreckt. Für Tausende von fiktiven Universen wird Wissen aus Such-

maschinen abgefragt – von Fans ebenso wie von Kulturwissenschaftler. Im Gegensatz zur

realen Welt muss die Wissensextraktion in solchen spezifischen Domänen wie Belletristik

und Fantasy mehrere zentrale Herausforderungen bewältigen:

• Trainingsdaten. Quellen für fiktive Domänen stammen hauptsächlich aus Büch-

ern und von Fans erstellten Inhalten, die spärlich und fehlerbehaftet sind und

schwierige Textstrukturen wie Dialoge und Zitate enthalten. Trainingsdaten für

Schlüsselaufgaben wie Taxonomie-Induktion, Named Entity Typing oder Relation

Extraction sind ebenfalls nicht verfügbar.

• Domain-Eigenschaften und Diversität. Fiktive Universen können sehr anspruchsvoll

sein und Entitäten, soziale Strukturen und manchmal auch Sprachen enthalten,

die sich von der realen Welt völlig unterscheiden. Moderne Methoden zur Wissen-

sextraktion machen Annahmen über Entity-Class-, Entity-Subclass- und Entity-

Entity-Relationen, die für fiktive Domänen oft ungültig sind. Bei verschiedenen

Genres fiktiver Domänen müssen Modelle auch über fiktive Domänen hinweg trans-

ferierbar sein.

• Lange fiktive Texte. Während moderne Modelle Einschränkungen hinsichtlich der

Länge der Eingabesequenz haben, ist es wichtig, Methoden zu entwickeln, die in

v

der Lage sind, mit sehr langen Texten (z.B. ganzen Büchern) umzugehen, und

mehrere Kontexte und verteilte Hinweise zu erfassen.

Diese Dissertation befasst sich mit den oben genannten Herausforderungen, und ent-

wickelt Methoden, die den Stand der Kunst zur Wissensextraktion in fiktionalen Domä-

nen voranbringen.

• Der erste Beitrag ist eine Methode, genannt TiFi, zur Konstruktion von Typ-

systemen (Taxonomie induktion) für fiktive Domänen. Aus von Fans erstell-

ten Inhalten in Online-Communities wie Wikia induziert TiFi Taxonomien in

drei wesentlichen Schritten: Kategoriereinigung, Kantenreinigung und Top-Level-

Konstruktion. TiFi nutzt eine Vielzahl von Informationen aus den ursprünglichen

Quellen und ist in der Lage, Taxonomien für eine Vielzahl von fiktiven Domänen

mit hoher Präzision zu erstellen.

• Der zweite Beitrag ist ein umfassender Ansatz, genannt ENTYFI, zur Erkennung

von Entitäten, und deren Typen, in langen fiktiven Texten. Aufbauend auf 205

automatisch induzierten hochwertigen Typsystemen für populäre fiktive Domänen

nutzt ENTYFI die Überlappung und Wiederverwendung dieser fiktiven Domä-

nen zur Bearbeitung neuer Texte. Durch die Zusammenstellung verschiedener

Typisierungsmodule mit einer Konsolidierungsphase ist ENTYFI in der Lage, in

langen fiktionalen Texten eine feinkörnige Entitätstypisierung mit hoher Präzision

und Abdeckung durchzuführen.

• Der dritte Beitrag ist ein End-to-End-System, genannt KnowFi, um Relationen

zwischen Entitäten aus sehr langen Texten wie ganzen Büchern zu extrahieren.

KnowFi nutzt Hintergrundwissen aus 142 beliebten fiktiven Domänen, um inter-

essante Beziehungen zu identifizieren und Trainingsdaten zu sammeln. KnowFi

umfasst eine ähnlichkeitsbasierte Ranking-Technik, um falsch positive Einträge in

Trainingsdaten zu reduzieren und potenzielle Textpassagen auszuwählen, die Paare

von Kandidats-Entitäten enthalten. Durch das Trainieren eines hierarchischen

neuronalen Netzwerkes für alle Relationen ist KnowFi in der Lage, Relationen

zwischen Entitätspaaren aus langen fiktiven Texten abzuleiten, und übertrifft die

besten früheren Methoden zur Relationsextraktion.

vi

Acknowledgments

First and foremost, I would like to thank my supervisor, Prof. Dr. Gerhard Weikum,

for being my mentor since I started my Master program, giving me the opportunity

to carry out this research and providing invaluable guidance throughout my doctoral

studies. From him, I have learned about simplicity, vision and enthusiasm that broaden

my attitude towards research. This seven years is definitely an invaluable period of time

in my life.

I would like to thank my co-supervisor, Dr. Simon Razniewski, for being a great friend

and an excellent collaborator. Without his insightful comments and guidance, I would

have not done this work. Working with Simon, I have gained a lot of valuable experience

on the research and advices for my future career.

I would like to thank the additional reviewer and examiner of my dissertation, Prof. Dr.

Martin Theobald, and thanks to Prof. Dr. Dietrich Klakow and Dr. Frances Yung for

being the chair and the academic assistant of my Ph.D. committee.

I also would like to thank my colleagues and staff at D5 group for making the work-

place an exciting and friendly atmosphere. A special note of thanks to Petra, Alena,

Daniela, Jenny and Steffi, for their great support. Many thanks to my officemates, Dat

Ba Nguyen, Dhruv Gupta, Mohamed H. Gad-Elrab, to my lunchmates, Vinh-Thinh Ho,

Tuan-Phong Nguyen, Thong Nguyen and Hai-Dang Tran, for the relax and helpful dis-

cussions with them.

I am also very grateful to Uncle Duy Ta, Aunt Hong Le, and my friends in Saarbruecken

for their great help to my life.

Last but not least, I would like to thank my family for their constant support throughout

the years.

vii

Contents

1 Introduction 1

1.1 Motivation and Scope . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 Challenges . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.3 Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.4 Publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.5 Organization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

2 Background 9

2.1 Knowledge Bases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.1.1 Encyclopedic Knowledge Bases . . . . . . . . . . . . . . . . . . . 9

2.1.2 Other Knowledge Bases . . . . . . . . . . . . . . . . . . . . . . . 9

2.1.3 Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

2.2 Knowledge Base Construction . . . . . . . . . . . . . . . . . . . . . . . . 12

2.2.1 Manual Construction . . . . . . . . . . . . . . . . . . . . . . . . . 12

2.2.2 Automated KB Construction . . . . . . . . . . . . . . . . . . . . 13

2.3 Input Sources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.4 NLP for Fictional Texts . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3 TiFi: Taxonomy Induction for Fictional Domains 23

3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.1.1 Motivation and Problem . . . . . . . . . . . . . . . . . . . . . . . 23

3.1.2 Approach and Contribution . . . . . . . . . . . . . . . . . . . . . 25

3.2 Related Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.3 Design Rationale and Overview . . . . . . . . . . . . . . . . . . . . . . . 28

3.3.1 Design Space and Choices . . . . . . . . . . . . . . . . . . . . . . 28

3.3.2 TiFi Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

3.4 Category Cleaning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.5 Edge Cleaning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

3.6 Top-level Construction . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

ix

Contents

3.7 Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

3.7.1 Step 1: Category Cleaning . . . . . . . . . . . . . . . . . . . . . . 37

3.7.2 Step 2: Edge Cleaning . . . . . . . . . . . . . . . . . . . . . . . . 40

3.7.3 Step 3: Top-level Construction . . . . . . . . . . . . . . . . . . . . 41

3.7.4 Final Taxonomies . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.7.5 Wikipedia as Input . . . . . . . . . . . . . . . . . . . . . . . . . . 43

3.7.6 WebIsALOD as Input . . . . . . . . . . . . . . . . . . . . . . . . 43

3.8 Use Case: Entity Search . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.9 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4 ENTYFI: Entity Typing in Fictional Texts 47

4.1 Introductions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.2 Related Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.3 Design Space and Approach . . . . . . . . . . . . . . . . . . . . . . . . . 51

4.4 Type System Construction . . . . . . . . . . . . . . . . . . . . . . . . . . 52

4.5 Reference Universe Ranking . . . . . . . . . . . . . . . . . . . . . . . . . 54

4.6 Mention Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

4.7 Mention Typing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

4.7.1 Supervised Fiction Types . . . . . . . . . . . . . . . . . . . . . . 56

4.7.2 Supervised Real-world Types . . . . . . . . . . . . . . . . . . . . 58

4.7.3 Unsupervised Typing . . . . . . . . . . . . . . . . . . . . . . . . . 58

4.7.4 KB Lookup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

4.8 Type Consolidation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

4.9 Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

4.9.1 Test Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

4.9.2 Automated End-to-End Evaluation . . . . . . . . . . . . . . . . . 62

4.9.3 Crowdsourced End-to-End Evaluation . . . . . . . . . . . . . . . 65

4.9.4 Component Evaluation . . . . . . . . . . . . . . . . . . . . . . . . 66

4.9.5 Unconventional Real-world Domains . . . . . . . . . . . . . . . . 68

4.10 ENTYFI Demonstration . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

4.10.1 Web Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

4.10.2 Demonstration Experience . . . . . . . . . . . . . . . . . . . . . . 71

4.11 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

5 KnowFi: Knowledge Extraction from Long Fictional Texts 75

5.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

5.2 Related Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

x

Contents

5.3 System Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

5.4 Distant Supervision with Passage Ranking . . . . . . . . . . . . . . . . . 80

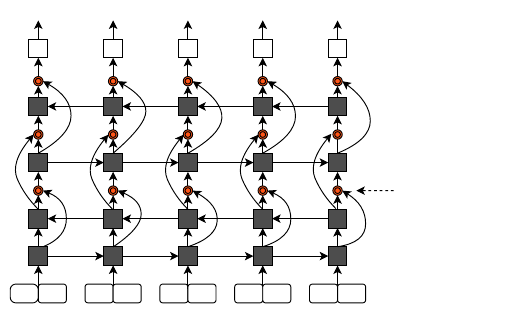

5.5 Multi-Context Neural Extraction . . . . . . . . . . . . . . . . . . . . . . 81

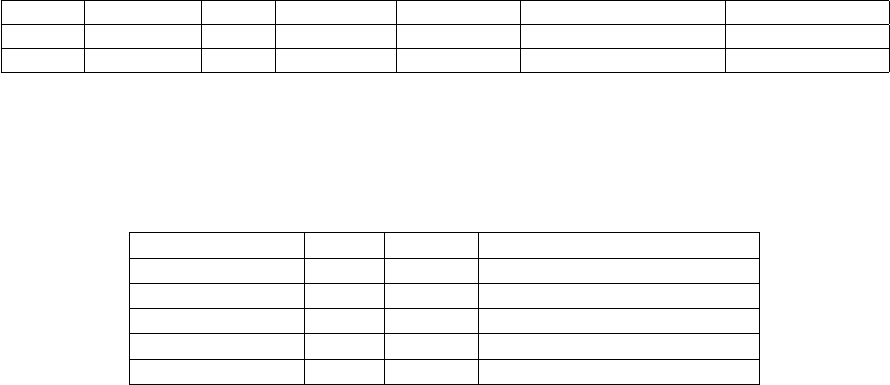

5.6 LoFiDo Benchmark . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

5.7 Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

5.7.1 Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

5.7.2 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

5.7.3 Anecdotal Examples . . . . . . . . . . . . . . . . . . . . . . . . . 87

5.7.4 Background KB Statistics . . . . . . . . . . . . . . . . . . . . . . 87

5.8 Extrinsic Use Case: Entity Summarization . . . . . . . . . . . . . . . . . 88

5.9 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

6 Conclusions 89

6.1 Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

6.2 Discussion and Future Work . . . . . . . . . . . . . . . . . . . . . . . . . 90

A KnowFi – Training Data Extraction 95

B KnowFi – Additional Experiments 99

List of Figures 103

List of Tables 106

Bibliography 107

xi

Chapter 1

Introduction

1.1 Motivation and Scope

Motivation With the tremendous expansion of the internet, there is a huge amount

of data that is put online every day. This information is stored and shared in different

forms such as text, audio or visual. Among them, text is the most popular form that is

presented in variety sources such as books, news articles, web pages and more. With the

rapid development of artificial intelligence, the need to develop intelligent applications

requires computers or machines to be able to learn “knowledge”. That is the time when

the term knowledge harvesting (or knowledge extraction) appeared. Knowledge harvest-

ing is the task of extracting structured knowledge (or machine-readable knowledge) from

noisy Internet content and storing them into knowledge bases. A knowledge base (KB) is

a collection of facts, usually presented in a form of triples SPO: subject-predicate-object,

about the real world. Consider the following example:

“In 1895, Marie Curie married the French physicist Pierre Curie, and she

shared the 1903 Nobel Prize in Physics with him and with the physicist Henri

Becquerel for their pioneering work developing the theory of “radioactivity”

– a term she coined.”

1

From this text, the goal of knowledge harvesting is extracting a list of facts, such as:

• <Marie_Curie, married_to, Pierre_Curie, 1895>

• <Marie_Curie, win, Nobel_Prize_in_Physics, 1903>

• <Pierre_Curie, win, Nobel_Prize_in_Physics, 1903>

• <Henri_Becquerel, win, Nobel_Prize_in_Physics, 1903>

• <Marie_Curie, work_on, Radioactivity>

1

https://en.wikipedia.org/wiki/Marie_Curie

1

CHAPTER 1. INTRODUCTION

• <Pierre_Curie, is_a, Physicist>

• ...

In the last decade, computer scientists have put a lot of effort into automatically

extracting and organizing these structured knowledge. Large KBs have been built like

YAGO [Hoffart et al., 2013, Suchanek et al., 2007], DBpedia [Auer et al., 2007], Wikidata

[Vrandečić and Krötzsch, 2014], etc., and become a key asset on search engine and

question answering systems. For example, when a user searches for “Nobel prizes of

Marie Curie” on search engine systems like Google or Bing, a direct answer, which

includes a list of two Nobel prizes, Physics, 1903 and Chemistry, 1911, is returned.

Apparently, these systems have knowledge about Marie Curie and knowledge about the

concept Nobel prizes, hence, are able to provide answers for the user. In fact, Google

has Google Knowledge Graph and Bing has Microsoft Satori in their backend data.

However, current KBs are mostly constructed for our real world domain, where Wikipedia

and main stream news are primary sources. These KBs, hence, lack knowledge about

long-tail domains, where fiction and fantasy are the most prominent. Fiction and fantasy

are core parts of our human culture, spanning from traditional literature into modern

stories, movies, TV series and games. People have created a huge collection of fic-

tional universes such as Greek and Roman Mythology (myths), Marvel and DC comics

(comics), Harry Potter and Lord of the Rings (high fantasy novels), World of Warcraft

and League of Legends (games), and so on. These universes are well-structured, with

thousands of entities and types that are usually completely different from our real world.

Served as entertainment, people spend a lot of time on fiction and fantasy. As a statistic

in 2020, a U.S consumer spent 213 minutes (3h33min) daily watching TV on average

2

.

With such high attention, the information from fiction and fantasy are usually subjects

for search-engine queries by fans and topics for culture analysis.

Consider more examples. As a fan of the popular TV series Game of Thrones, a user

wants to retrieve a list of “enemies of Jon Snow” – a main character in the series and

looks for the answer from search engine systems. Instead of providing a list of enemies,

these systems, however, only return a list of web pages where the user can access and

find the answer by themselves. This scenario also happens when a user is looking for

a list of “muggles in Harry Potter” – another popular TV series (and novels as well).

Apparently, KBs in the backend data of search engine systems lack information about

these fictional domains. Research shows that in popular recommendation systems, the

dataset in DBpedia only contains less than 85% number of movies, 63% number of

music artists and 31% for books [Hertling and Paulheim, 2018, Noia et al., 2016] and

2

https://www.statista.com/statistics/186833/average-television-use-per-person-in-the-us-since-2002/

2

1.1. MOTIVATION AND SCOPE

the numbers for entities and facts about them in these domains are much more lower.

Therefore, knowledge extraction from fictional domains becomes an essential task. Not

only using the output to enhance existing KBs, techniques used in these domains can

be also adapted for other specific domains such as professional domains, companies or

even in new languages.

Scope Working on knowledge extraction involves three main sub-tasks: building type

systems for entities (e.g. taxonomy induction), named entity recognition and typing,

and relation extraction.

Taxonomy induction is the task of constructing type systems or class subsumption

hierarchies. For example, electric guitar players are rock musicians, and muggle-born

wizards are magic creatures. Taxonomies are an essential part of KBs, and important

resources for a variety of tasks such as entity search, question answering and relation

extraction. As statistics, YAGO includes over 350,000 entity types [Suchanek et al.,

2007], and DBPedia includes over one million type labels and concepts that are retrieved

from Wikipedia and also linked to other KBs such as Yago, UMBEL and schema.org.

Named entity recognition and typing is the task of identifying entity mentions in

text and classifying them into semantic classes such as person, location, etc. as in coarse-

grained level, or musicians, muggle-born wizards, etc. as in finer-grained level. For

the example about Marie Curie, state-of-the-art NER systems annotate Marie Curie,

Pierre Curie and Henri Becquerel as person and physicist, and 1903 Nobel Prize

in Physics as award.

Relation extraction is the task of identifying and classifying semantic relations

between entities, and thus can extract facts from natural language texts. For example,

the relation spouse between Marie Curie and Pierre Curie can be inferred based on

the context around these two entities.

Along with the above sub-tasks, a variety of other sub-tasks are also tackled to im-

prove the quality of extracted knowledge, such as co-reference resolution, name entity

disambiguation and discourse parsing. Although those problems have been investigated

for a long time, knowledge about fiction and fantasy has been not explored yet. The

issues come from sparse sources that are used to extract the knowledge and suitable

methodologies for natural language processing and knowledge extraction for these spe-

cific domains.

3

CHAPTER 1. INTRODUCTION

1.2 Challenges

Challenge C1: Input Sources and Training Data Knowledge extraction mainly takes

the Internet content as resources. While Wikipedia, a premium source with rich and

high-quality content, is the main input for knowledge extraction in the real-world do-

main, sources for fictional domains come from books or fan-built content, which is noisy

and contains difficult structures of text such as dialogues and quotes. In addition, with

recent advances in deep learning, it is essential to prepare training data for each specific

NLP task, which are mostly not available when working on new domains, like fiction and

fantasy. For example, taxonomy induction in the real-world domain can leverage the

existing Wikipedia category system as the starting point [Gupta et al., 2016c, Hoffart

et al., 2013, Ponzetto and Strube, 2011], but this category network is not suitable for

fiction and fantasy due to poor coverage. The taxonomies (or type systems) also needs to

be pre-defined and constructed before working on named entity recognition and typing

task, especially when the target types are fine-grained. In the case of relation extraction,

output relations and their training data are also not available for fictional domains.

Challenge C2: Domain-specific Taxonomy Entity classes and subclass relations are

different from the real-world domain. State-of-the-art methods for taxonomy induction

make assumptions about the surface forms of entity names and entity classes which do

not apply in fictional domains. For example, they assume typical phrases for classes

(e.g. noun phrases in plural form) and named entities (e.g. proper names) which do not

always hold in fictional domains. Also the assumption that certain classes are disjoint

is also invalid (e.g., living beings and abstract entities, the oracle of Delphi being a

counterexample).

Challenge C3: Contextual Typing in Long Fictional Texts State-of-the-art methods

for entity typing on news and other real-world texts leverage types from Wikipedia

categories or WordNet concepts and focus on typing a single entity mention, based on

its surrounding context (e.g. usually in a single sentence) [Choi et al., 2018, Dong et al.,

2015, Shimaoka et al., 2017]. Entity typing in fictional domains, on the other hand,

requires the model to predict types for entity mentions in long texts (e.g. Potter in the

whole book Harry Potter). Since one entity could be mentioned in multiple sentences, it

is essential to design a model that is able to leverage different contexts and consolidate

the outputs.

4

1.3. CONTRIBUTIONS

Challenge C4: Relation Extraction in Long Fictional Texts Similar to the entity typ-

ing task, relation extraction in fictional domains also has to tackle the same challenge

when working on long texts. State-of-the-art methods for relation extraction mostly work

on single sentences or short documents. They focus on general encyclopedic knowledge

about prominent people, places, etc., and basic relations of wide interest such as birth-

place, birthdate, spouses, etc. [Carlson et al., 2010b, Shi and Lin, 2019, Soares et al.,

2019, Zhou et al., 2021]. For knowledge on fictional domains, people are more interested

in relations that capture traits of characters and key elements of the narrations where

training data for them is not available, such as allies, enemies, skills, etc. To extract

these relations, it requires the model to handle multiple contexts between each entity

pair, across the whole input text (e.g. books). For example, what is the relation between

Harry Potter and Severus Snape in Harry Potter? enemy or ally?

1.3 Contributions

This work addresses the above challenges by developing methods to advance the state

of the art:

TiFi We present TiFi [Chu et al., 2019], the first method to construct taxonomies for

fictional domains (Challenge C2). TiFi uses noisy category systems from fan wikis

or text extraction as input and building the taxonomies through three main steps: (i)

category cleaning, by identifying candidate categories that truly represent classes in

the domain of interest, (ii) edge cleaning, by selecting subcategory relationships that

correspond to class subsumption, and (iii) top-level construction, by mapping classes

onto a subset of high-level WordNet categories. A comprehensive evaluation shows that

TiFi is able to construct taxonomies for a diverse range of fictional domains such as Lord

of the Rings, The Simpsons, or Greek Mythology with very high precision and that it

outperforms state-of-the-art baselines for taxonomy induction by a substantial margin.

ENTYFI We present ENTYFI [Chu et al., 2020a,b], the first method for typing entities

in fictional texts coming from books, fan communities or amateur writers (Challenge

C3). ENTYFI builds on 205 automatically induced high-quality type systems for pop-

ular fictional domains, and exploits the overlap and reuse of these fictional domains for

fine-grained typing in previously unseen texts. ENTYFI comprises five steps: type sys-

tem induction, domain relatedness ranking, mention detection, mention typing, and type

consolidation. The recall-oriented typing module combines a supervised neural model,

5

CHAPTER 1. INTRODUCTION

unsupervised Hearst-style and dependency patterns, and knowledge base lookups. The

precision-oriented consolidation stage utilizes co-occurrence statistics in order to remove

noise and to identify the most relevant types. Extensive experiments on newly seen

fictional texts demonstrate the quality of ENTYFI.

KnowFi We present KnowFi [Chu et al., 2021], for extracting relations between entities

coming from very long texts such as books, novels or fan-built wikis (Challenge C4).

KnowFi leverages semi-structured content in wikis of fan communities on fandom.com

(aka wikia.com) to extract initial KBs of background knowledge for 142 popular domains

(TV series, movies, games). This serves to identify interesting relations and to collect

distant supervision samples. Yet for many relations, this results in very few samples. To

overcome this sparseness challenge and to generalize the training across a wide variety of

relations, a similarity-based ranking technique is devised for matching seeds in text pas-

sages. Given a long input text, KnowFi judiciously selects a number of context passages

containing seed pairs of entities. To infer if a certain relation holds between two entities,

KnowFi’s neural network is trained jointly for all relations as a multi-label classifier.

Experiments with several fictional domains demonstrate the gains that KnowFi achieves

over the best prior methods for neural relation extraction.

The challenge C1 is addressed along with other challenges when working on above

tasks.

1.4 Publications

Specific results of this work have been published:

• KnowFi: Knowledge Extraction in Long Fictional Texts.

Cuong Xuan Chu, Simon Razniewski, Gerhard Weikum. Proceedings of the 3rd

Conference on Automated Knowledge Base Construction, AKBC 2021.

• ENTYFI: Entity Typing in Fictional Texts.

Cuong Xuan Chu, Simon Razniewski, Gerhard Weikum. Proceedings of the 13th

ACM International Conference on Web Search and Data Mining, WSDM 2020.

• ENTYFI: A System for Fine-grained Entity Typing in Fictional Texts.

Cuong Xuan Chu, Simon Razniewski, Gerhard Weikum. Proceedings of the 2020

Conference on Empirical Methods in Natural Language Processing, EMNLP 2020.

6

1.5. ORGANIZATION

• TiFi: Taxonomy Induction for Fictional Domains.

Cuong Xuan Chu, Simon Razniewski, Gerhard Weikum. Proceedings of the Web

Conference (the 28th International Conference on World Wide Web), WWW 2019.

The author of this dissertation is the main author of all these publications. Demon-

stration, code and data are also published and available at https://www.mpi-inf.

mpg.de/yago-naga/fiction-fantasy to accelerate further research in fictional

domains.

1.5 Organization

The remainder of this dissertation is organized as follows. Chapter 2 introduces back-

ground about knowledge bases and methodologies for sub-tasks in KB construction,

which include taxonomy induction, named entity recognition and typing and relation

extraction. Three following chapters describe our methods for solving these tasks in

fictional domains. Chapter 3 presents a method for taxonomy induction. Chapter 4

presents an end-to-end system for named entity recognition and typing from long fic-

tional texts. Chapter 5 presents a model for relation extraction that overcomes sparsity

in training data when working on long texts in fictional domains and Chapter 6 con-

cludes the dissertation with some discussions on open problems for knowledge extraction

in fictional domains.

7

Chapter 2

Background

2.1 Knowledge Bases

2.1.1 Encyclopedic Knowledge Bases

Encyclopedic knowledge represents facts about notable real-world entities such as person,

location, organization, etc. A knowledge base that contains this kind of knowledge is

called encyclopedic KB or entity-centric KB.

In general, an encyclopedic KB contains three primary pieces of information:

• entities, like people, events, products, organizations such as Albert Einstein,

Joe Biden, Iphone XS Max, WHO, etc.

• entity types or entity classes to which entities belong, for example, person, location

at coarse-grained level, or musicians, left-wing politician at finer-grained level.

• statements about entities (e.g. relations between entities), for example (Max Planck

isFatherOf Erwin Planck), or (Max Planck bornIn Kiel).

Additional information like temporal or spatial information is also presented in several

KBs such as YAGO 2 [Hoffart et al., 2013].

Large-scale encyclopedic KBs are YAGO, DBpedia and Wikidata. These KBs have

become major assets for enriching search engine and question answering systems. The

above KBs are mostly extracted from Wikipedia and enhanced by adding more extracted

knowledge from news articles.

2.1.2 Other Knowledge Bases

Along with encyclopedic knowledge, other kinds of knowledge have been also inves-

tigated, such as commonsense knowledge, product knowledge, and long-tail domain

9

CHAPTER 2. BACKGROUND

knowledge.

Commonsense knowledge embodies facts about classes and concepts, such as prop-

erties of concepts (gold hasProperty conductivity), relations between concepts (keyboard

partOf computer), and interaction between concepts (musician create song). Popular

commonsense KBs are Cyc [Lenat, 1995], ConceptNet [Liu and Singh, 2004], BabelNet

[Navigli and Ponzetto, 2010], Webchild [Tandon et al., 2014], Quasimodo [Romero et al.,

2019], and ASCENT [Nguyen et al., 2021]. Most of them are extracted from Web con-

tent, either manually or automatically, with hundred thousands of concepts and millions

of statements.

Product knowledge contains knowledge about products, product types, services,

etc. from commercial enterprises. This knowledge has been constructed to help com-

panies manage their internal data, improve customer service and marketing. Some

examples are Amazon product graph [Dong et al., 2020], Alibaba E-commerce graph

[Luo et al., 2020], or Bloomberg Knowledge Graph [Meij, 2019].

Long-tail domain knowledge contains knowledge about entities from long-tail do-

mains. For example, medical knowledge contains knowledge about medicine, disease,

symptoms, etc. Food knowledge presents knowledge about food, dishes, ingredients or

receipts. Or cultural knowledge describes information about customs and practices in

different countries. Not like other knowledge mentioned above, the sources for extracting

long-tail domain knowledge are very sparse and it usually requires domain experts to be

involved.

Fiction and fantasy are also archetypes of long-tail domains. Although there is a huge

potential, knowledge in fiction and fantasy has not yet received sufficient attention from

computer scientists. Section 2.4 describes related work on these domains in detail.

2.1.3 Applications

With structured knowledge extracted from noisy Internet content, knowledge bases have

been used in a wide variety of applications and downstream tasks.

Semantic search and question answering: Many commercial search engines incorpo-

rate data from KBs to improve their search results. For example, Google uses Google

Knowledge Graph, while Bing uses Microsoft Satosi and Facebook uses Graph Search.

By taking advantage of these knowledge bases, search-engine systems are able to provide

direct answers for queries from users. For instance, an answer “France national football

team” is directly given for the query “which team won world cup 2018?” by Google.

Since the KBs are entity-centric, a major use case is entity-oriented search, which utilizes

10

2.1. KNOWLEDGE BASES

large-scale KBs to improve representations of queries, documents (i.e. web pages), as

well as ranking results. In particular, entities from queries are disambiguated by rec-

ognizing and linking to existing KBs. In the example, “world cup 2018” is more likely

to be linked to 2018 FIFA World Cup, instead of other events such as 2018 ITTF Team

World Cup or 2018 Athletics World Cup, hence, a football team is returned. On the

other hand, document representation can be enriched by annotating entities (i.e. seman-

tic web) and adding the information into the vector space model [Ensan and Bagheri,

2017, Liu and Fang, 2015, Raviv et al., 2016].

Question answering also leverages data from KBs. IBM Waston used knowledge bases

like YAGO and DBpedia in the Jeopardy game show [Ferrucci et al., 2010]. In recent

years, many methods of question answering over knowledge bases have been developed.

The goals of these tasks are to understand question semantics, reduce the search space

and retrieve accurate answers efficiently [Christmann et al., 2019, Wu et al., 2019].

Recommender systems and chatbots: With the advance of artificial intelligence,

digital assistants, such as recommender systems and chatbots, have become more and

more popular. For example, a user can interact with a recommender system to find a

good movie, or communicate with a chatbot to find out what services a store is providing.

Using only users’ data, such as user-item interactions, is not enough for these systems

to be able to work properly. To overcome the issue, recent systems and studies start to

consider KBs as a source for background information. Large-scale KBs such as Wikidata

have become good choices [Gao et al., 2021, Jannach et al., 2020]. Social chatbots and

digital assistants such as Cortana, Siri or Alexa use KBs as key assets. Many e-commerce

companies also construct their own KBs to improve customer services, such as Amazon

and Alibaba.

Text and visual understanding: With a lot of ambiguities on texts, downstream tasks

and applications need to understand the meaning of the input text. For example, a user

asks a digital assistant to “play some songs of Monkees member David Jones”. In this

case, the assistant knows that the mention “David Jones” should be linked to David

Jones (aka Davy Jones), a member of Monkees. However, if the user only asks to “play

some songs of David Jones”, how does the assistant know which entity the mention

“David Jones” should be linked to, Davy Jones (member of Monkees) or singer David

Jones (aka David Bowie)? KBs are the key assets to distinguish the meanings of the

input words. For instance, WordNet, a lexical database for English, contains synsets

of hundred thousands of English concepts with their descriptions. Commonsense KBs

such as ConceptNet, Webchild, contain millions of concepts, along with their properties.

11

CHAPTER 2. BACKGROUND

Entity-centric KBs such as YAGO, DBpedia, contain millions of unique entities. Word

and entity disambiguation is not only useful for digital assistants, but also for other

downstream tasks such as search, question answering or machine translation [Shen et al.,

2014].

Although recent works on visual understanding, such as object detection, have achieved

impressive results with the advances of deep learning, leveraging external knowledge can

further improve the performance of deep learning models. For example, with common-

sense knowledge, the model should be able to learn that a tennis racket usually appears

along with a tennis ball, and not along with other similar objects like a lemon or an

orange [Chowdhury et al., 2019, Nag Chowdhury et al., 2021].

2.2 Knowledge Base Construction

2.2.1 Manual Construction

The idea of constructing a knowledge base was first pursued in the 1980s, with Cyc

[Lenat, 1995] being a seminal project. By manually construction, Cyc contained hundred

thousands of concepts and millions of facts.

WordNet [Fellbaum and Miller, 1998] is a lexical database for English. WordNet de-

scribes the relations between concept synsets, which include synonymy, hypo-hypernymy,

and mero-holonymy. The most recent WordNet database contains more than 155k words

which belong to more than 117k synsets and the number of word-sense pairs is over 200k.

WordNet is carefully handcrafted and has high accuracy, but low coverage of concepts

and statements. VerbNet [Schuler, 2005] is also a lexical database for English, which

focuses on English verbs and is compatible with WordNet .

With the advances of the internet, people are able to collaborate with others on such

projects. Wikidata [Vrandečić and Krötzsch, 2014] is a project that was established

based on this idea. By providing a free open API, Wikidata can be read and edited by

both humans and machines. Wikidata contains more than 95M data items with almost

10k predicates and millions of facts. Wikidata can be considered as the largest project

on constructing KBs with over 25k active users and over 1.5B edits that have been made

since the project launched.

By manually constructing, the advantages of these systems are having high quality

and easily maintained. However, due to high cost and much time consuming, they are

not scalable and have low coverage.

12

2.2. KNOWLEDGE BASE CONSTRUCTION

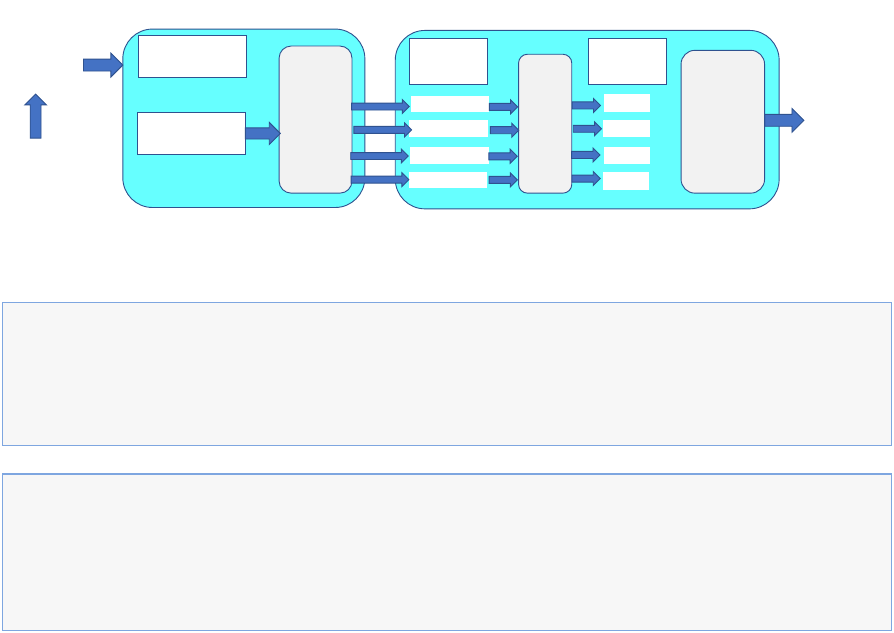

Input Text

(Wikipedia, News,

Books, Social, etc.)

Taxonomy Induction

Named Entity Recognition,

Typing & Disambiguation

Relation Extraction

Knowledge Bases

Taxonomy for Entities Entities Names and Types Relational Statements

Figure 2.1: A general framework for automated knowledge extraction.

2.2.2 Automated KB Construction

Since the late 2000s, there is a variety of knowledge bases that have been built automat-

ically, such as YAGO, DBpedia, Freebase, ConceptNet, BabelNet, NELL, WebIsALOD,

etc. Compared to handcrafted KBs, these KBs are much larger, with millions of entities,

hundred thousands of entity types and hundred millions to billions of assertions.

The output of automated extraction methods is usually represented as in one of the

following two types: schema-free and schema-based. Since concepts and relations in

a schema-free KB do not follow any ontology, it is hard to infer new knowledge from

existing knowledge. Most of KBs, especially encyclopedic KBs, therefore, are schema-

based, where components follow a specific ontology (e.g. relations between entities are

pre-defined, the entity types are pre-defined, etc.). Figure 2.1 shows a basic framework

to construct schema-based knowledge. The following subsections give an overview on

state-of-the-art methods for each task in the framework in detail.

Taxonomy Induction

Taxonomies, also known as type systems or class subsumption hierarchies, are an im-

portant resource for a variety of tasks and a core piece in knowledge graphs. Taxonomy

induction, hence, is a common problem that has been explored in many works [de Melo

and Weikum, 2010, Flati et al., 2014, Gupta et al., 2016b, 2017b, Ponzetto and Strube,

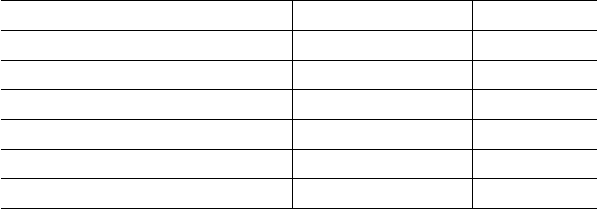

2007], which can be classified based on two dimensions: input source and model. Figure

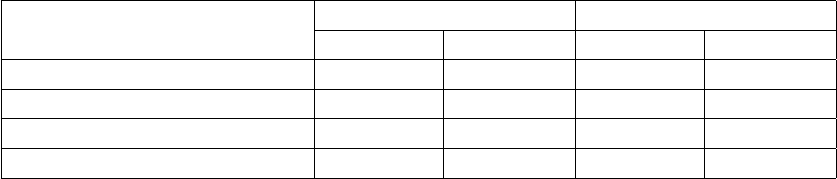

2.2 shows design space for the taxonomy induction task.

In the timeline of taxonomy induction, using Hearst patterns [Hearst, 1992] seems to

be the earliest method. With the simple patterns such as “X is a Y”, “X such as Y

and Z”, the method is able to achieve very high precision when working on unstructured

texts and still part of other advanced approaches.

With the rapid expansion of Wikipedia, there is a variety of methods that use Wikipedia

as the input for taxonomy induction. Along with encyclopedic information about enti-

ties, Wikipedia also provides categories, which groups Wikipedia pages and other related

categories as well. The categories can be organized as a directed graph, and are often

13

CHAPTER 2. BACKGROUND

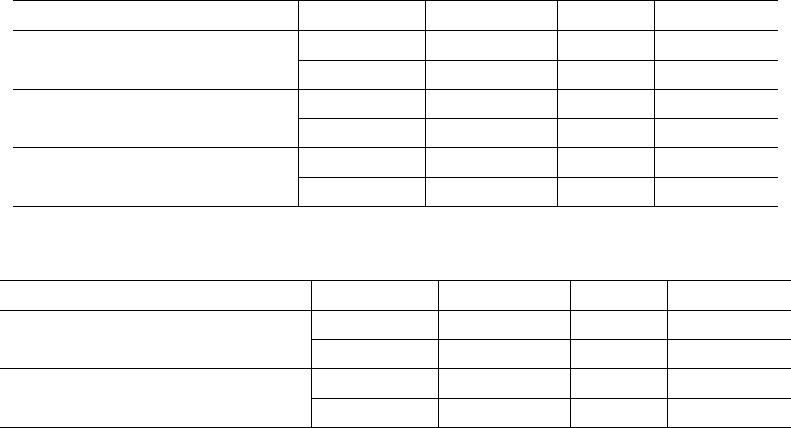

unstructured texts structured contents

unsupervised

supervised

[Ponzetto and Strube, 2007]

[Ponzetto and Navigli, 2009]

[Ponzetto and Strube, 2011]

[Hoffart et al., 2013, Suchanek et al., 2007]

[Auer et al., 2007]

[Flati et al., 2016]

[Gupta et al., 2016c]

[de Melo and Weikum, 2010]

[Bansal et al., 2014]

[Faralli et al., 2017]

[Martel and Zouaq, 2021]

[Hearst, 1992]

[Snow et al., 2005]

[Roller and Erk, 2016]

[Gupta et al., 2017a]

[Faralli et al., 2019]

[Roller et al., 2014]

[Yu et al., 2015]

[Nguyen et al., 2017b]

[Vu and Shwartz, 2018]

Figure 2.2: Design space for taxonomy induction.

referred to as Wikipedia category network (WCN). By leveraging the information from

WCN and other existing ontologies like WordNet, these methods are able to construct

large-scale full-fledged ontologies with high accuracy. Some notable works are WikiTax-

onomy [Ponzetto and Navigli, 2009, Ponzetto and Strube, 2007, 2011], WikiNet [Nastase

et al., 2010], YAGO, DBpedia, MENTA [de Melo and Weikum, 2010], MultiWibi [Flati

et al., 2014] and HEAD [Gupta et al., 2016c]. Among these works, MENTA [de Melo

and Weikum, 2010] was one of the largest multilingual lexical knowledge bases with over

5.4 million entities in more than 270 languages. In the case of English only, ProBase

[Wu et al., 2012a] contains over 20 million isA pairs between over 2.6 million concepts.

With advanced deep neural models, many recent approaches utilize distributional

representations of entity types [Nguyen et al., 2017b, Roller et al., 2014, Vu and Shwartz,

2018, Yu et al., 2015], and classify hypernym relations between the entity type pairs using

supervised techniques. Some of the methods leverage existing knowledge graphs, like

YAGO, DBpedia and learn their embeddings to automatically extract the taxonomies

[Martel and Zouaq, 2021].

Named Entity Recognition and Typing (NER)

Named entity recognition is the task of identifying named entities in natural language

texts and classifying them into coarse-grained semantic types such as person, location,

14

2.2. KNOWLEDGE BASE CONSTRUCTION

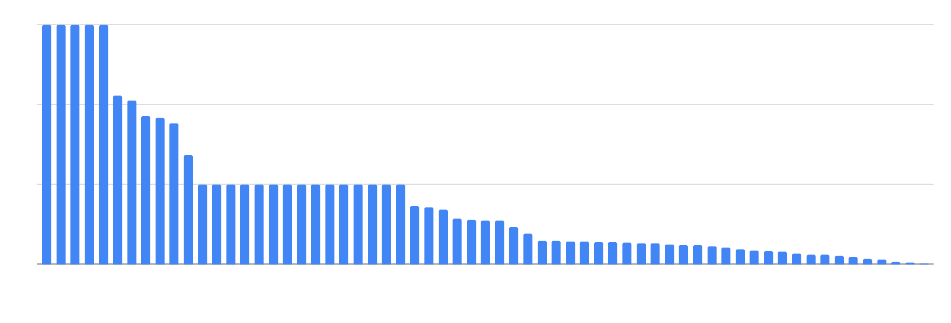

rule-based deep-learning basedunsupervised feature-based

[Black et al., 1998]

[Mikheev et al., 1999]

[Sekine and Nobata, 2004]

[Hanisch et al., 2005]

[Zhang and Elhadad, 2013]

[Quimbaya et al., 2016]

[Collins and Singer, 1999]

[Etzioni et al., 2005]

[Nadeau et al., 2006]

[Zhang and Elhadad, 2013]

[Zhou and Su, 2002]

[Bender et al., 2003]

[McCallum and Li, 2003]

[Krishnan and Manning, 2006]

[Szarvas et al., 2006]

[Torisawa et al., 2007]

[Liao and Veeramachaneni, 2009]

[Hoffart et al., 2011]

[Nguyen et al., 2017a]

[Nguyen et al., 2016]

[Kuru et al., 2016]

[Rei et al., 2016]

[Ma and Hovy, 2016]

[Zheng et al., 2017]

[Li et al., 2017]

[Moon et al., 2018]

[Jie and Lu, 2019]

[Devlin et al., 2019b]

Figure 2.3: Design space for named entity recognition.

organization and misc [Collins and Singer, 1999, Grishman and Sundheim, 1996, Li

et al., 2020a, Zhang and Elhadad, 2013, Zheng et al., 2017]. NER has been investigated

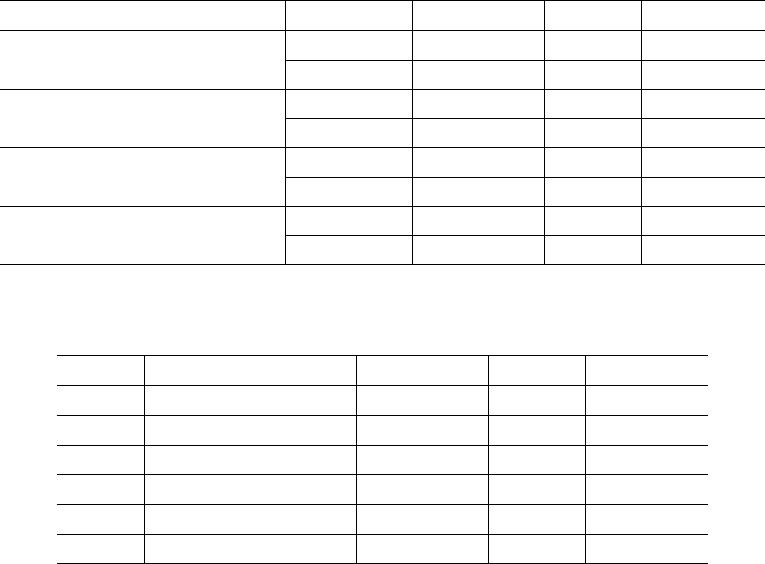

since the 90s and achieved remarkable results, along with the development of machine

learning. Figure 2.3 shows the design space for NER, which can be classified into four

main streams: 1) rule-based approaches, 2) unsupervised learning approaches, 3) feature-

based supervised learning approaches, and 4) deep-learning based approaches.

Rule-based approaches usually design hand-crafted semantic and syntactic rules to

recognize entities [Black et al., 1998, Hanisch et al., 2005, Zhang and Elhadad, 2013].

These rules are based on domain-specific dictionaries and syntactic-lexical patterns.

However, due to insufficiency in dictionaries, these methods often achieve low recall and

are hardly transferred to other domains.

Unsupervised learning approaches, like clustering, recognize named entities by com-

puting their context similarity with other “seed” entities. The similarity score is usually

based on lexical form (the noun phrase and its surrounding context) and statistics (e.g.

frequency, context vectors) from a large corpus [Collins and Singer, 1999, Nadeau et al.,

2006, Zhang and Elhadad, 2013].

Feature-based approaches, on the other hand, exploit different features, such as mor-

phology, part-of-speech tags, dependency relations, and use machine learning algorithms

such as Hidden Markov Models (HMM), Support Vector Machines (SVM) or Conditional

15

CHAPTER 2. BACKGROUND

Random Fields (CRF), to cast NER into a sequence tagging task or a multi-class clas-

sification problem [Hoffart et al., 2011, McCallum and Li, 2003, Torisawa et al., 2007,

Zhou and Su, 2002]. Among these models, CRF-based NER has been widely applied,

not only in mainstream texts, but also in domain-specific texts, such as medical texts

[Funk et al., 2014], chemical texts [Rocktäschel et al., 2012] or product-related texts

[Shang et al., 2018].

Similar to other NLP tasks, deep-learning based approaches have gained much at-

tention recently, and also achieve state-of-the-art results on NER [Devlin et al., 2019b,

Nguyen et al., 2016, Zheng et al., 2017]. With sufficient training data, deep-learning

models are able to exploit hidden features without engineering. Input of deep-learning

models are usually distributional representations of texts, such as word-level representa-

tion [Nguyen et al., 2016, Zheng et al., 2017], character-level representation [Kuru et al.,

2016] or contextualized language-model embeddings [Devlin et al., 2019b]. Models for

NER vary from convolutional neural networks (CNN) [Strubell et al., 2017, Yao et al.,

2015], to recurrent neural networks (e.g. gated recurrent unit – GRU, long-short term

memory – LSTM) [Ju et al., 2018, Katiyar and Cardie, 2018, Ma and Hovy, 2016] and

deep transformers (e.g. transformer, BERT) [Devlin et al., 2019b, Vaswani et al., 2017].

Named entity typing is the task of identifying semantic classes for named entities in

textual contexts. While NER focuses on recognition of the entities and distinguishes

them into a few coarse-grained types such as person, organization, location, named

entity typing usually works on a fine-grained level, where entity mentions are classified

into hundreds to thousands of types [Choi et al., 2018, Lee et al., 2006, Ling and Weld,

2012]. Figure 2.4 shows the design space for named entity typing.

As other extraction tasks, pattern-based approaches design specific patterns that de-

scribe relations between entity mentions and classes in texts. For example, a text snippet

like “hobbits such as Frodo and Sam” suggests that Frodo and Sam belong to the class

hobbit. This pattern and other similar patterns are well known as Hearst patterns and

are widely used, especially when the type system is not available [Hearst, 1992, Seitner

et al., 2016]. On the other hand, supervised typing has gained more attention when the

taxonomies (e.g. type systems) are pre-defined. These methods leverage information

about surrounding contexts of entity mentions as features to classify entity mentions.

The features consist of lexical, syntactic and semantic features [Corro et al., 2015, Ling

and Weld, 2012, Yogatama et al., 2015, Yosef et al., 2012]. Recently, more neural meth-

ods are being investigated on entity typing and able to classify entity mentions into

hundreds to thousands of types [Choi et al., 2018, Shimaoka et al., 2017, Xiong et al.,

16

2.2. KNOWLEDGE BASE CONSTRUCTION

non-available type system available type system

pattern-based

supervised learning

[Hearst, 1992]

[Corro et al., 2015]

NER

[Ling and Weld, 2012]

[Yogatama et al., 2015]

[Shimaoka et al., 2017]

[Choi et al., 2018]

[Xiong et al., 2019]

[Lin and Ji, 2019]

Figure 2.4: Design space for named entity typing.

2019]. Some notable neural models are LSTM with attention mechanism [Choi et al.,

2018, Lin and Ji, 2019, Shimaoka et al., 2017] and deep transformers [Eberts et al., 2020,

Onoe and Durrett, 2019, 2020].

Relation Extraction

Relation extraction (RE) is the task of identifying semantic relations between two given

entities. The input of the task is either semi-structured texts like infoboxes from

Wikipedia pages, or unstructured texts like Wikipedia pages and news articles. Based

on the input, a wide range of methods have been proposed, which can be classified into

two main classes: pattern-based approaches [Carlson et al., 2010a, Kim and Moldovan,

1995, Nakashole et al., 2012, Soderland et al., 1995] and supervised approaches, where

deep-learning models currently achieve state-of-the-art results [Han et al., 2020a, Soares

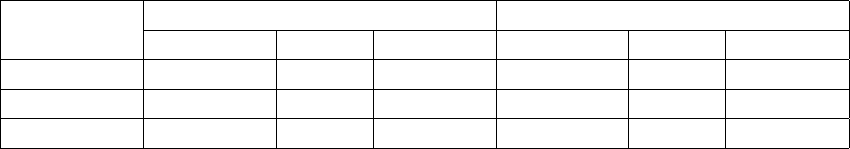

et al., 2019, Wang et al., 2020, Zhou et al., 2021]. Figure 2.5 shows design space for

relation extraction.

Early methods on RE utilize lexical and syntactic structure from text to manually

design patterns. In the case of semi-structure texts, these patterns are induced by

using web scraping [Auer et al., 2007, Hoffart et al., 2013]. For example, Figure 2.6

shows a snapshot from the Wikia infobox of the entity Zeus in Greek mythology and

the Wiki markup table extracted from the dump file of the Wiki page

1

. In the case of

unstructured texts, the lexical and dependency features are usually used to construct the

1

https://greekmythology.wikia.org/wiki/Zeus

17

CHAPTER 2. BACKGROUND

semi-structured texts document-level

pattern-based

deep-learning based

sentence-level

[Soderland et al., 1995]

[Kim and Moldovan, 1995]

[Mooney, 1999]

[Suchanek et al., 2007]

[Auer et al., 2007]

[Hertling and Paulheim, 2020]

[Hearst, 1992]

[Huffman, 1995]

[Carlson et al., 2010a]

[Nakashole et al., 2012]

[Riedel et al., 2013]

[Lin et al., 2015]

[Kambhatla, 2004]

[Zhou et al., 2005]

[Wang, 2008]

[Gormley et al., 2015]

[Zhang et al., 2017b]

[Cui et al., 2018]

[Trisedya et al., 2019]

[Shi and Lin, 2019]

[Soares et al., 2019]

[Nguyen et al., 2007]

[Weston et al., 2013]

[Srivastava et al., 2016a]

[Labatut and Bost, 2019]

[Wang et al., 2019]

[Wang et al., 2020]

[Zhou et al., 2021]

Figure 2.5: Design space for relation extraction.

patterns. For example, a regular expression <A .* born in .* B> indicates that entity

A hasBirthplace B, or a simple entity-type-based pattern <PERSON (write(s?)|wrote)

BOOK> indicates the relation hasAuthor between a book and a person. The drawback of

pattern-based methods is requiring intervention from human experts, hence costly and

not scalable.

Supervised approaches, on the other hand, are more scalable and require less human ef-

fort. In terms of models, supervised approaches can be classified into two types: feature-

based approaches and deep-learning based approaches. Feature-based approaches design

lexical, syntactic and semantic features for the entity pairs, based on their surrounding

context, and use these features in the classification models, such as logistic regression,

support vector machine or graphical models [Kambhatla, 2004, Nguyen et al., 2007, Zhou

et al., 2005]. In contrast, deep-learning based approaches do not require feature engi-

neering and are able to automatically extract hidden semantic features from the text.

With the advance of neural network models, a variety of models have been proposed and

are able to work on texts with different granularities that include sentence-level RE and

document-level RE. For example, convolutional neural networks (CNNs) [Wang et al.,

2016, Zeng et al., 2014] work on short sequence text, with a fix window size of length,

recurrent neural networks (RNNs) [Lee et al., 2019, Xu et al., 2016, Zhang et al., 2015]

work on longer sequence text, attention-based neural networks [Guo et al., 2019, Lin

18

2.2. KNOWLEDGE BASE CONSTRUCTION

Figure 2.6: Zeus infobox from Greek Mythology.

et al., 2016] emphasize weight on specific positions in text (e.g. attention mechanism),

and graph-based neural networks (GNNs) that build entity graphs from text, work on

long texts and are able to infer global relations between entity pairs [Wang et al., 2020,

Zhou et al., 2021]. Inputs for deep-learning models are usually semantic representations

of words (e.g. word embeddings that are learned from pre-trained language models) and

position embeddings of words in the context [Mikolov et al., 2013, Zhang et al., 2017a].

With respect to the performance, Transformers [Vaswani et al., 2017] and BERT [Devlin

et al., 2019b] have recently achieve new start-of-the-art results on relation extraction.

Different from pattern-based models, supervised approaches require training data,

especially for the tasks with pre-specified relations. Besides manually creating training

data [Zhang et al., 2017b], a large number of methods use distant supervision techniques

to collect more training data [Mintz et al., 2009, Suchanek et al., 2009, Yao et al., 2019].

Distant supervision leverages existing knowledge from KGs to collect positive training

samples. The idea is that, for any entity pair with relation r in KGs, if a text (e.g.

sentence or paragraph) mentions both of them, the text can be considered as one positive

training sample for the relation r. However, producing many false positives is the main

drawback of distant supervision. To be able to overcome this, some methods have

been proposed to denoise distant supervision, such as selecting informative instances in

each training batch or from a batch of instances with the same entity pairs [Li et al.,

2020b, Riedel et al., 2010], or incorporating with information from other resources (e.g.

19

CHAPTER 2. BACKGROUND

knowledge bases, multilingual datasets) [Ji et al., 2017, Wang et al., 2018].

2.3 Input Sources

Having been investigated for a long time, automated methods leverage a wide range of

sources as input for knowledge extraction. In general, these sources can be classified

into four main categories as follows.

1. Handcrafted Data. This kind of data is manually created by human experts

with high quality and clean structure, for example, WordNet and Wikidata. It

can be used as seed knowledge to collect more knowledge from other sources (e.g.

with distant supervision).

2. Semi-structured Data. Not as high quality as handcrafted data, semi-structured

data addresses the problem of scalability with better coverage and sufficient quality.

The most prominent data in this setting comes from Wikipedia, which includes

Wikipedia category networks, infoboxes of entity pages, and other formats like

tables and lists. With semi-structured input, knowledge can be extracted by using

pattern-based approaches.

3. Unstructured Data. Most of the text data on the internet is unstructured,

spanning from new articles, web pages into text documents like books, movie

scripts or technical descriptions, etc. Knowledge extraction from these sources

requires advanced models that are able to infer semantics from text.

4. Social Media. Text from online users on social media platforms like social net-

works, discussion forums, etc., can be classified as unstructured data. However,

the average length of text sequences from these sources is usually short and knowl-

edge that is expressed in these texts is quite noisy and sparse. Dealing with these

texts requires more cleaning processes and a large amount of data.

Wikipedia Wikipedia is the most popular and richest source for knowledge extraction.

It contains encyclopedic knowledge of millions of entities and across over three hundred

languages. Wikipedia organizes its pages following a category network which becomes

rich resources for taxonomy induction. Each entity page in Wikipedia also contains

an infobox that stores basic information about the entity. With semi-structured for-

mat, Wikipedia infoboxes are great resources for knowledge or relation extraction. The

content in Wikipedia pages is written using the Wiki markup language. With the crisp

20

2.4. NLP FOR FICTIONAL TEXTS

content, text from Wikipedia is valuable for entity recognition, disambiguation and link-

ing, and relation extraction. Many large KBs have been built from Wikipedia, such as

YAGO, DBpedia, Freebase, etc. However, Wikipedia favors entities in the real world,

so that it lacks knowledge in long-tail domains where fiction and fantasy are typical

examples.

Wikia (Fandom) Wikia or Fandom

2

is the largest web platform for organized fan

communities for fictional universes. As of July 2018, its Alexa rank is 49 worldwide

(and 19 in the US). It contains over 380,000 fan-built communities. For example, the

The Lord of the Rings universe

3

contains 6,229 content pages, while the Star Wars

universe contains more than 170,000. Wikia is also constructed similarly to Wikipedia,

with each universe is organized as a Wiki, so that it also contains pages of entities in

the universe, infoboxes and category networks. With tremendous contribution from fans

on creating the content, Wikia has become a great source for knowledge extraction in

fictional domains [Hertling and Paulheim, 2020].

2.4 NLP for Fictional Texts

With a huge interest in fiction and fantasy, various aspects related to fictional texts have

been investigated, especially in literature and culture studies [Labatut and Bost, 2019].

Along with narrative extraction (e.g. storyline analysis), character network extraction

is one of the most popular tasks that have been tackled in these domains. This task

involves several sub-tasks such as character detection, character interaction detection,

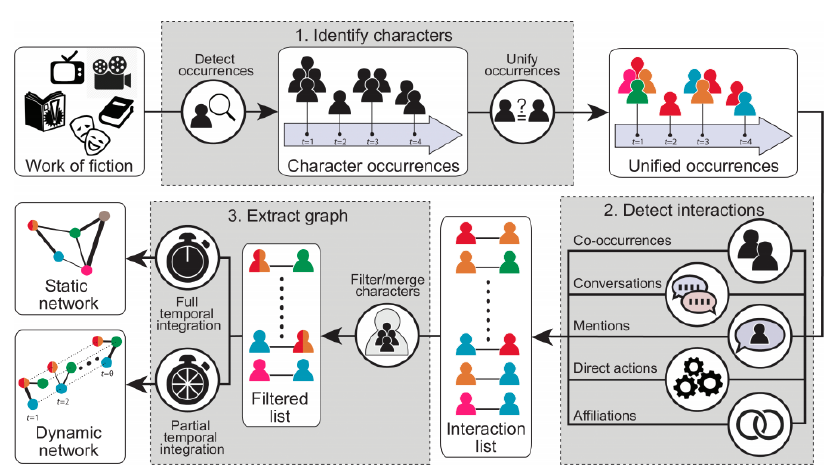

and character graph construction. Figure 2.7 shows an overview of the basic character

network extraction process [Labatut and Bost, 2019].

In particular, Vala et al. [2015] proposed a graph-based model to detect characters and

their occurrences in novels, while a number of authors apply traditional NER systems to

run on novels and only keep PERSON entities as character names [Chaturvedi et al., 2017,

Elson et al., 2010, Srivastava et al., 2016a]. Very few works consider other categories

such as LOCATION or ORGANIZATION [Labatut and Bost, 2019]. In the case of relation ex-

traction, many works focus on character networks whether the characters have the same

occurrences, conversations, or directly interact with each other [Chaturvedi et al., 2016a,

Makazhanov et al., 2014, Srivastava et al., 2016a]. Makazhanov et al. [2014] propose

a heuristic approach to detect family relations between characters. Based on vocative

2

www.fandom.com

3

https://lotr.fandom.com/

21

CHAPTER 2. BACKGROUND

Figure 2.7: Overview of the basic character network extraction process [2019].

utterances, the relation candidates are filtered by manual constraints. Chaturvedi et al.

[2016a] present a Markov model to capture interactions between characters and detect

friendly vs. hostile signals. Srivastava et al. [2016a] leverage both text-based and struc-

tural cues for learning a model to infer interpersonal relations in narrative summaries.

The common between all of above methods is taking books or fan fiction as the input

source.

In the case of leveraging the richness of Wikia, DBkWik [Hertling and Paulheim,

2018, Hofmann et al., 2017] uses the DBpedia framework to extract a knowledge graph

from thousands of Wikis. The framework focuses on extracting information from semi-

structured sources such as infoboxes or wiki category networks of Wikia pages.

22

Chapter 3

TiFi: Taxonomy Induction for Fictional

Domains

3.1 Introduction

3.1.1 Motivation and Problem

Taxonomy Induction: Taxonomies, also known as type systems or class subsumption

hierarchies, are an important resource for a variety of tasks related to text comprehen-

sion, such as information extraction, entity search or question answering. They repre-

sent structured knowledge about the subsumption of classes, for instance, that electric

guitar players are rock musicians and that state governors are politicans. Tax-

onomies are a core piece of large knowledge graphs (KGs) such as DBpedia, Wiki-

data, Yago and industrial KGs at Google, Microsoft Bing, Amazon, etc. When search

engines receive user queries about classes of entities, they can often find answers by

combining instances of taxonomic classes. For example, a query about “left-handed

electric guitar players” can be answered by intersecting the classes left-handed people,

guitar players and rock musicians; a query about “actors who became politicans”

can include instances from the intersection of state governors and movie stars such as

Schwarzenegger. Also, taxonomic class systems are very useful for type-checking answer

candidates for semantic search and question answering [Kalyanpur et al., 2011].

Taxonomies can be hand-crafted, examples being WordNet [Fellbaum and Miller,

1998], SUMO [Niles and Pease, 2001] or MeSH and UMLS [Bodenreider, 2004], or auto-

matically constructed by taxonomy induction from textual or semi-structured cues about

type instances and subtype relations. Methods for the latter include text mining using

Hearst patterns [Hearst, 1992] or bootstrapped with Hearst patterns (e.g., [Wu et al.,

2012b]), harvesting and learning from Wikipedia categories as a noisy seed network (e.g.,

23

CHAPTER 3. TIFI: TAXONOMY INDUCTION FOR FICTIONAL DOMAINS

[de Melo and Weikum, 2010, Flati et al., 2014, Gupta et al., 2016c, Ponzetto and Nav-

igli, 2009, Ponzetto and Strube, 2007, 2011, Suchanek et al., 2007, Wu et al., 2008]),

and inducing type hierarchies from query-and-click logs (e.g., [Gupta et al., 2014, Pasca,

2013, Pasca and Durme, 2007]).

The Case for Fictional Domains: Fiction and fantasy are a core part of human

culture, spanning from traditional literature to movies, TV series and video games.

Well known fictional domains are, for instance, the Greek mythology, the Mahabharata,

Tolkien’s Middle-earth, the world of Harry Potter, or the Simpsons. These universes

contain many hundreds or even thousands of entities and types, and are subject of search-

engine queries – by fans as well as cultural analysts. For example, fans may query about

Muggles who are students of the House of Gryffindor (within the Harry Potter universe).

Analysts may be interested in understanding character relationships [Bamman et al.,

2014, Iyyer et al., 2016, Srivastava et al., 2016b], learning story patterns [Chambers

and Jurafsky, 2009, Chaturvedi et al., 2017] or investigating gender bias in different

cultures [Agarwal et al., 2015]. Thus, organizing entities and classes from fictional

domains into clean taxonomies (see example in Fig. 3.1) is of great value.

Challenges: While taxonomy construction for encyclopedic knowledge about the real

world has received considerable attention already, taxonomy construction for fictional

domains is a new problem that comes with specific challenges:

1. State-of-the-art methods for taxonomy induction make assumptions on entity-class

and subclass relations that are often invalid for fictional domains. For example, they

assume that certain classes are disjoint (e.g., living beings and abstract entities, the

oracle of Delphi being a counterexample). Also, assumptions about the surface forms

of entity names (e.g., on person names: with or without first name, starting with

Orcs

Goblins Swords

Weapons

Objects

Sieges

Wars

abstract_entity physical_entity

entity

living_thingEvents

(a) LoTR

Sith

Sith Lords Steels

Alloys

Substancesliving_thing

Deities

Religions

abstract_entity physical_entity

entity

Culture

(b) Star Wars

Figure 3.1: Excerpts of LoTR and Star Wars taxonomies.

24

3.1. INTRODUCTION

Mr., Mrs., Dr., etc.) and typical phrases for classes (e.g., noun phrases in plural

form) do not apply to fictional domains.

2. Prior methods for taxonomy induction intensively leveraged Wikipedia categories,

either as a content source or for distant supervision. However, the coverage of fiction

and fantasy in Wikipedia is very limited, and their categories are fairly ad-hoc. For

example, Lord Voldemort is in categories like Fictional cult leaders (i.e., people),

J.K. Rowling characters (i.e., a meta-category) and Narcissism in fiction (i.e.,

an abstraction). And whereas Harry Potter is reasonably covered in Wikipedia, fan

websites feature many more characters and domains such as House of Cards (a TV

series) or Hyperion Cantos (a 4-volume science fiction book) that are hardly captured

in Wikipedia.

3. Both Wikipedia and other content sources like fan-community forums cover an ad-

hoc mixture of in-domain and out-of-domain entities and types. For example, they

discuss both the fictional characters (e.g., Lord Voldemort) and the actors of movies

(e.g., Ralph Fiennes) and other aspects of the film-making or book-writing.

The same difficulties arise also when constructing enterprise-specific taxonomies from

highly heterogeneous and noisy contents, or when organizing types for highly specialized

verticals such as medieval history, the Maya culture, neurodegenerative diseases, or nano-

technology material science. Methodology for tackling such domains is badly missing.

We believe that our approach to fictional domains has great potential for being carried

over to such real-life settings. This work focuses on fiction and fantasy, though, where

raw content sources are publicly available.

3.1.2 Approach and Contribution

In this work we develop the first taxonomy construction method specifically geared

for fictional domains. We refer to our method as the TiFi system, for Taxonomy

induction for Fiction. We address Challenge 1 by developing a classifier for categories

and subcategory relationships that combines rule-based lexical and numerical contextual

features. This technique is able to deal with difficult cases arising from non-standard

entity names and class names. Challenge 2 is addressed by tapping into fan community

Wikis (e.g., harrypotter.wikia.com). This allows us to overcome the limitations of

Wikipedia. Finally, Challenge 3 is addressed by constructing a supervised classifier for

distinguishing in-domain vs. out-of-domain types, using a feature model specifically

designed for fictional domains.

Moreover, we integrate our taxonomies with an upper-level taxonomy provided by

25

CHAPTER 3. TIFI: TAXONOMY INDUCTION FOR FICTIONAL DOMAINS

WordNet, for generalizations and abstract classes. This adds value for searching by

entities and classes. Our method outperforms the state-of-the-art taxonomy induction

system for the first two steps, HEAD [Gupta et al., 2016c], by 21-23% and 6-8% per-

centage points in F1-score, respectively. An extrinsic evaluation based on entity search

shows the value that can be derived from our taxonomies, where, for different queries, our

taxonomies return answers with 24% higher precision than the input category systems.

TiFi datasets are available at https://www.mpi-inf.mpg.de/index.php?id=3971.

3.2 Related Work

Text Analysis and Fiction Analysis and interpretation of fictional texts are an im-

portant part of cultural and language research, both for the intrinsic interest in under-

standing themes and creativity [Chambers and Jurafsky, 2009, Chaturvedi et al., 2017],

and for extrinsic reasons such as predicting human behaviour [Fast et al., 2016] or mea-

suring discrimination [Agarwal et al., 2015]. Other recurrent topics are, for instance,

to discover character relationships [Bamman et al., 2014, Iyyer et al., 2016, Srivastava

et al., 2016b], to model social networks [Bamman et al., 2014, Elangovan and Eisenstein,

2015], or to describe personalities and emotions [Elson et al., 2010, Jhavar and Mirza,

2018]. Traditionally requiring extensive manual reading, automated NLP techniques

have recently lead to the emergence of a new interdisciplinary subject called Digital Hu-

manities, which combines methodologies and techniques from sociology, linguistics and

computational sciences towards the large-scale analysis of digital artifacts and heritage.

Taxonomy Induction from Text Taxonomies, that is, structured hierarchies of classes

within a domain of interest, are a basic building block for knowledge organization and

text processing, and crucially needed in tasks such as entity detection and linking, fact

extraction, or question answering. A seminal contribution towards their automated con-

struction was the discovery of Hearst patterns [Hearst, 1992], simple syntactic patterns

like “X is a Y” that achieve remarkable precision, and are conceptually still part of

many advanced approaches. Subsequent works aim to automate the process of discov-

ering useful patterns [Roller and Erk, 2016, Snow et al., 2005]. Recent work by Gupta

et al. [Gupta et al., 2017a] uses seed terms in combination with a probabilistic model to

extract hypernym subsequences, which are then put into a directed graph from which the

final taxonomy is induced by using a minimum cost flow algorithm. Other approaches

utilize distributional representations of types [Nguyen et al., 2017b, Roller et al., 2014,

Vu and Shwartz, 2018, Yu et al., 2015], or aim to learn them pairwise [Yu et al., 2015]

26

3.2. RELATED WORK

or hierarchically [Nguyen et al., 2017b].

Taxonomy Construction using Wikipedia A popular structured source for taxonomy

construction is the Wikipedia category network (WCN) for taxonomy induction. The

WCN is a collaboratively constructed network of categories with many similarities to

taxonomies, expressing for instance that the category Italian 19th century composers

is a subcategory of Italian Composers. One project, WikiTaxonomy [Ponzetto and